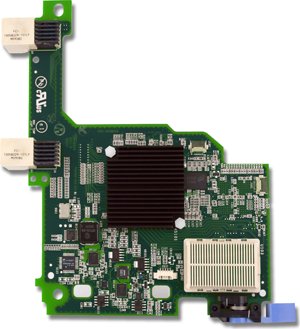

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I blogged about it in October and that was because, I didn't get it. I didn't get what the big deal was with being able to take a 10Gb pipe and allow you to carve it up into 4 "virtual NICs". HP's been doing this for a long time with their FlexNICs (check out VirtualKennth's blog for a great detail on this technology) so I didn't see the value in what IBM and Emulex was trying to do. But now I understand. Before I get into this, let me remind you of what this adapter is. The Emulex Virtual Fabric Adapter (CFFh) for IBM BladeCenter is a dual-port 10 Gb Ethernet card that supports 1 Gbps or 10 Gbps traffic, or up to eight virtual NIC devices.

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I blogged about it in October and that was because, I didn't get it. I didn't get what the big deal was with being able to take a 10Gb pipe and allow you to carve it up into 4 "virtual NICs". HP's been doing this for a long time with their FlexNICs (check out VirtualKennth's blog for a great detail on this technology) so I didn't see the value in what IBM and Emulex was trying to do. But now I understand. Before I get into this, let me remind you of what this adapter is. The Emulex Virtual Fabric Adapter (CFFh) for IBM BladeCenter is a dual-port 10 Gb Ethernet card that supports 1 Gbps or 10 Gbps traffic, or up to eight virtual NIC devices.

This adapter hopes to address three key I/O issues:

1.Need for more than two ports per server, with 6-8 recommended for virtualization

2.Need for more than 1Gb bandwidth, but can't support full 10Gb today

3.Need to prepare for network convergence in the future

"1, 2, 3, 4"

I recently attended an IBM/Emulex partner event and Emulex presented a unique way to understand the value of the Emulex Virtual Fabric Adapter via the term, "1, 2, 3, 4" Let me explain:

"1" – Emulex uses a single chip architecture for these adapters. (As a non-I/O guy, I'm not sure of why this matters – I welcome your comments.)

"2" – Supports two platforms: rack and blade (Easy enough to understand, but this also emphasizes that a majority of the new IBM System x servers announced this week will have the Virtual Fabric Adapter "standard")

"3" – Emulex will have three product models for IBM (one for blade servers, one for the rack servers and one intergrated into the new eX5 servers)

"4" – There are four modes of operation:

-

Legacy 1Gb Ethernet

-

10Gb Ethernet

-

Fibre Channel over Ethernet (FCoE)…via software entitlement ($$)

-

iSCSI Hardware Acceleration…via software entitlement ($$)

This last part is the key to the reason I think this product could be of substantial value. The adapter enables a user to begin with traditional Ethernet, then grow into 10Gb, FCoE or iSCSI without any physical change – all they need to do is buy a license (for the FCoE or iSCSI).

Modes of operation

The expansion card has two modes of operation: standard physical port mode (pNIC) and virtual NIC (vNIC) mode.

In vNIC mode, each physical port appears to the blade server as four virtual NIC with a default bandwidth of 2.5 Gbps per vNIC. Bandwidth for each vNIC can be configured from 100 Mbps to 10 Gbps, up to a maximum of 10 Gb per virtual port.

In pNIC mode, the expansion card can operate as a standard 10 Gbps or 1 Gbps 2-port Ethernet expansion card.

As previously mentioned, a future entitlement purchase will allow for up to two FCoE ports or two iSCSI ports. The FCoE and iSCSI ports can be used in combination with up to six Ethernet ports in vNIC mode, up to a maximum of eight total virtual ports.

Mode IBM Switch Compatibility

vNIC – works with BNT Virtual Fabric Switch

pNIC – works with BNT, IBM Pass-Thru, Cisco Nexus

FCoE– BNT or Cisco Nexus

iSCSI Acceleration – all IBM 10GbE switches

I really think the "one card can do all" concept works really well for the IBM BladeCenter design, and I think we'll start seeing more and more customers move toward this single card concept.

Comparison to HP Flex-10

I'll be the first to admit, I'm not a network or storage guy, so I'm not really qualified to compare this offering to HP's Flex-10, however IBM has created a very clever video that does some comparisons. Take a few minutes to watch and let me know your thoughts.

7 habits of highly effective people

pet food express

cartoon network video

arnold chiari malformation

category 1 hurricane

Pingback: TechHead

Pingback: Shaun Walsh

I'm not a blade guy, but that IBM video left me annoyed. Right off the bad, IBM's first “point” is that Virtual Fabric supports Ethernet, FCoE, and iSCSI, while HP “only supports Ethernet”. Huh? Since when can't you run iSCSI over ANY Ethernet port? I've run it over Wi-Fi! I gotta believe that's dead wrong.

As for FCoE, didn't HP tell us it's supported?

And Flex-10 allows for real FC support, not just FCoE.

And Flex-10 looks awfully flexible to me.

In short, that video looks like a poorly-executed smear on HP and lacking in facts. What am I missing here?

this video is definitely IBM smearing HP's Flex-10, but it's funny. As of today, Flex-10 does not do FCoE and the iSCSI capability is not “hardware iSCSI” however, IBM can't offer either of those capabilities either. The FCoE and iSCSI Hardware Assisted features will be coming soon (date not announced.) Thanks for the comment!

Hardware iSCSI is a red herring. Microsoft's software initiator is faster than any iSCSI HBA in a modern system. And the VMware ESX 4 software iSCSI is solid.

FCoE is also a red herring since no one* uses it yet…

* Yes, I know, someone uses it. But HP will have it by the time it's popular!

So hardware iSCSI is no big deal, but the Emulex adapter supports 1Gb Ethernet. Can HP do that?

The HP Flex-10 adapter connects to either 1 Gb or 10 Gb Ethernet, I believe. I'm not an HP believer, I just read the Virtual Connect for Dummies book on the plane! :-)

That was my poor attempt at humor. Yes, the FlexNiC will do 1Gb or 10Gb, but NOT 10BaseT.

HP will probably do something like this very soon . the real miss to me is Nexus 4000 that only IBM have .

For network guys it's very important …

Pingback: Mike Scheerer

You're correct, Steve. The claim that HP doesn't support hardware offload of iSCSI is incorrect: HP's BladeSystem supports hardware iSCSI offload with the QLogic mezz card (QMH4062). The card also supports Virtual Connect.

HP DOES NOT do iSCSI offload on Flex10. yes they have it on their 1Gb Qlogic card.

Big question is why is everyone talking about carving up a 10Gb pipe for virtualization, they hypervisors do that for you and give you QoS, isolation, rate limiting…. everything you want without the complexity of Flex10 or the Emulex solution above. why use 2 sets of tools and interfaces to carve up the pipe, keep it simple. just look at the nightmare of complexity you linked to in “virtualkenneth”'s blog. check out brad hedlund's blog on this (and you can do it without Nexus 1000v also)

http://www.internetworkexpert.org/2010/02/09/hp…

Mark, I didn't say HP did iSCSI offload on Flex-10. I said HP does iSCSI hardware offload using QLogic's QMH4062 which is compatible with Virtual Connect Flex-10 technology.

Pingback: IBM System x

Pingback: Tom Boucher

Pingback: Cornel Heijkoop