Last week, Blade.org invited me to their 3rd Annual Technology Symposium – an online event with speakers from APC, Blade Network Technologies, Emulex, IBM, NetApp, Qlogic and Virtensys. Blade.org is a collaborative organization and developer community focused on accelerating the development and adoption of open blade server platforms. This year’s Symposium focused on “the dynamic data center of the future”. While there were many interesting topics (check out the replay here), the one that appealed to me most was “Shared I/O” by Alex Nicolson, VP and CTO of Emulex. Let me explain why.

While there are many people who would (and probably will) argue with me, blade servers are NOT for all workloads. When you take a look at the blade server ecosystem today, the biggest bottleneck you see is the limitation of on board I/O. Without compromising server slots, the maximum amount of expansion you can achieve on nearly any blade server is 8 I/O ports (6 Ethernet + 2 storage.) In addition, blade servers are often limited to 2 expansion cards so if a customer has a requirement for “redundant physical adapters” the amount of expansion is reduced even more. Based on these observations, if you could remove the I/O from the server the blade server limitations would be eliminated allowing for the adoption of blade servers into more environments. This could be accomplished with shared I/O.

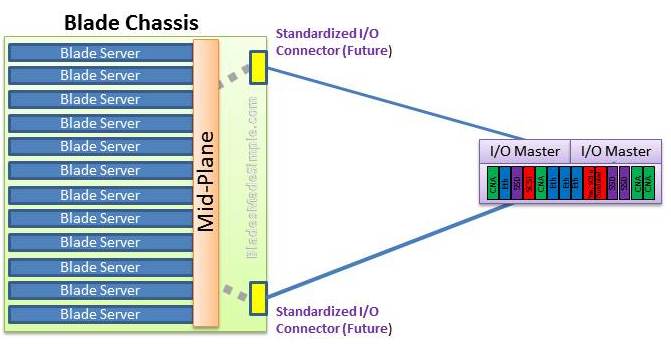

When you stop and think about the blade infrastructure design, no matter who the vendor is, it has been the same for the past 9 years. YES, the vendors have come out with better chassis designs that allow for “high-speed” connectivity, but the overall design is still the same: blade server with CPUs, Memory and I/O cards all on one system board. It’s time for blade server evolution to a design where I/O is shared. The idea behind Shared I/O is simple: separate the I/O from the server. Instead of having storage adapters inside a blade server, you would have an I/O Drawer outside containing the blade chassis with the I/O adapters for the blade servers. No more I/O bottlenecks on your blade servers. Your I/O potential is (nearly) unlimited! The advantages to this design include:

When you stop and think about the blade infrastructure design, no matter who the vendor is, it has been the same for the past 9 years. YES, the vendors have come out with better chassis designs that allow for “high-speed” connectivity, but the overall design is still the same: blade server with CPUs, Memory and I/O cards all on one system board. It’s time for blade server evolution to a design where I/O is shared. The idea behind Shared I/O is simple: separate the I/O from the server. Instead of having storage adapters inside a blade server, you would have an I/O Drawer outside containing the blade chassis with the I/O adapters for the blade servers. No more I/O bottlenecks on your blade servers. Your I/O potential is (nearly) unlimited! The advantages to this design include:

- More internal space for blade server design. If the I/O – including the LAN on Motherboard – was moved off the server, there would be substantial space remaining for more CPUs, more RAM or even more disks.

- Standardized I/O adapters no matter what blade vendor is used. This is the thought that really excites me. If you could remove the I/O from the blade server, you would be able to have IBM, Dell, HP and even Cisco in the same rack using the same I/O adapter. Your investments would be limited to the blade chassis and server. Not only that, but as blade server architecture changes, you would be able to KEEP the investments you make into your I/O adapters OR on the flip side of that, as I/O adapter speeds increase, you could replace them and keep your servers in place without having to buy new adapters for every server.

- Sharing of I/O adapters means FEWER adapters are needed. In order for this design to be beneficial, the adapters would need to have the ability to be shared between the servers. This means that 1 storage HBA may provided resources for 6 servers, but as I/O adapter throughput continues to increase, this may be more of a desire. Let’s face it – 10Gb is being discussed (and sold) today, but this time next year, 40Gb may be hot and in 3 years, 100Gb may be on the market. Technology will continue to evolve and if the I/O adapters were separated from the servers, you would have the ability to share the technology across all of your servers.

Now, before you start commenting that this is old news and that companies like Xsiogo have been offering virtual I/O products for a couple of years – hear me out. The evolution I’m referring to is not a particular vendor providing proprietary options. I imagine a blade ecosystem across all the vendors that allow for a standardized I/O platform providing guidelines for all blade servers to connect to a shared I/O drawer made by any vendor. Yes, this may be an unrealistic Nirvana, but look at USB. All vendors provide them natively out of the chassis without any modifications, so why can’t we get to the same point with a shared I/O connectivity?

So, what do you think. Am I crazy, or do you think blade server technology will evolve to allow for a separation of I/O. Share you thoughts in the comments below.

Pingback: Tweets that mention Blades Made Simple » Blog Archive » Shared I/O – The Future of Blade Servers? -- Topsy.com

Pingback: Kevin Houston

Pingback: neomen

Kevin,

It's a great concept but a total pipe dream. Part of the advantage of blades to the vendor is the amount of lock-in the chassis creates, nobody is willing to open the I/O architecture, any more than they are willing to open the blade form factor so inter-vendor blade swaps are possible.

Developing a shared I/O architecture would be great but it wouldn't happen, and if you are going to go that far why waste time with blades? Using the blade chassis would be a waste in this context, instead do it on a rack bassis. If you could get the vendors to agree on sharing I/O why not just go the extra half step and build standardized racks with integrated power/cooling and I/O that any vendors blade could plug in to?

Ahem, this smacks of something I “mentioned” to you about 6 months ago as being where I think things will head :) Glad you now agree about the promise this shows!!

Pingback: Kevin Houston

Pingback: unix player

Pingback: Mark S A Smith

I like the vision, Kevin, but knowing the manufacturers like I do, it's not going to happy with the current bunch of blade builders. They want to own the data center and they quote “good reasons” to keep the blade interconnects proprietary.

It's going to be up to third parties–and market acceptance–to deliver on the vision. If Emulex and QLogic could come up with something to squirt lots of bits into a separate drawer that could then be fanned out to the standard networks, that would be a nice addition to the existing approach.

Pingback: Corus360

ATCA, CompactPCI, and other telco standards come close to an shared blade form factor, although I share Joe's cynicism that IBM/HP/Dell/&c would all adopt a common standard in mainstream x86.

Sun's blade architecture does take one step down Alex' “Shared I/O” path by sending native PCIe from each server blade to a common location where shared I/O is housed, albeit a location that's still inside the enclosure.

Dislaimer: I work for HP.

Pingback: Stephen Foskett

Pingback: Frank Owen

I completely agree that #ibm #dell #hp and #cisco will most likely never adopt a standardized approach for connecting to a shared I/O infrastructure, however I can always hope! As always, thanks for your support!

Yes, you mentioned virtual I/O via #xsigo however my vision is to see a standardized I/O connectivity so that any blade vendor could connect to any shared I/O array. I know, it's a pipe dream, but I can always have hope! Thanks for your continued support!

Thanks for the comment. Shared I/O could allow for vendors to stack MORE blade servers in a smaller footprint offering greater server density with less power and space. But, as you say, it is a pipe dream. Maybe one day… Thanks for reading, and for your comments. Your support of BladesMadeSimple.com is greatly appreciated!

Great point – telco standards are the vision of where #bladeservers could end up, if everyone could get along. Yes, Sun's blade server design separates the I/O, however it's simply a 1U server on its side, so the idea of reducing space, power and cooling goes out the window. They have the right idea though. Thanks for your comments – always good to hear from you!

Pingback: Tweets that mention https://bladesmadesimple.com/2010/06/shared-io-the-future-of-blade-servers/#comment-57497280?utm_source=pingback -- Topsy.com

BTW, Egenera's BladeFrame servers already do this :) But of course, I doubt that this model will be adopted cross-industry….

Perhaps nearer to your vision is Egenera's other approach. We virtualize I/O (NICs, HBAs) using software…. then place the blades on a converged Fabric. We can manipulate that Fabric however you like… and are doing so across multiple HW vendors.

~ Ken (Egenera Inc)

Pingback: Kevin Houston

Kevin, your concept is a great one, and certainly not a pipe dream. The benefits of converged infrastructure and virtualization have been proven for servers and networks in the datacenter – however they have not yet been realized for datacenter IO. One of the major reasons for this is that the solutions have been proprietary and based on niche or immature technologies.

Aprius, in contrast, has taken a standards-based approach to developing shared IO for servers. For IO resources, the Aprius system uses off-the-shelf PCIe cards from any vendor, and connected servers run the vendor driver natively. For connectivity, the system utilizes the ubiquitous Ethernet network that is already present in any datacenter architecture. This combination provides architects with tools to preserve the universal PCI software model for servers, as well as the cost and management benefits that come from use of the converged network.

You have highlighted one of the main benefits of a system like this- by removing components (power) and airflow restrictions from the server, the unit of computing can be reduced to the essentials (CPU, Memory, Chipset), while external IO can be allocated and used when and where it's needed, on demand. This enables a very dense, uniform, and efficient compute infrastructure for highly scalable environments.

This concept applies to both blade and rack infrastructures, and all the major server and IO vendors are taking a look at the technology. This could be just what you (and the industry) are looking for.

Pingback: Craig@Aprius

Kevin, nice article on servers. thanks for sharing.

Pingback: Kevin Houston

Pingback: What We Can Expect for Blade Servers in 2022 | Blades Made Simple