UPDATED 11-16-2010

IBM recently announced a new addition to their BladeCenter family – the IBM BladeCenter GPU Expansion blade. This new offering provides a single HS22 with the capability of hosting up to 4 x NVIDIA Tesla M2070 or Tesla M2070Q GPUs each running 448 processing cores each. Doing the math, this equals the possibility of having 4,928 processing cores in a single 9u IBM BladeCenter H chassis. That means you could have 19,712 processing cores PER RACK. With such astonishing numbers, let’s take a deeper look at the IBM BladeCenter GPU Expansion blade.

IBM recently announced a new addition to their BladeCenter family – the IBM BladeCenter GPU Expansion blade. This new offering provides a single HS22 with the capability of hosting up to 4 x NVIDIA Tesla M2070 or Tesla M2070Q GPUs each running 448 processing cores each. Doing the math, this equals the possibility of having 4,928 processing cores in a single 9u IBM BladeCenter H chassis. That means you could have 19,712 processing cores PER RACK. With such astonishing numbers, let’s take a deeper look at the IBM BladeCenter GPU Expansion blade.

What’s the Big Deal about GPUs?

Before you can appreciate the “wow” factor of this new GPU Expansion blade, you need some fundamental understanding on what GPUs (or Graphics Processing Units) do. GPUs are designed to perform general purpose graphical, scientific, and engineering computing and allow the offloading of compute-intensive tasks. They are commonly used in industries like atmospheric modeling, data analytics, finance, fluid dynamics, life sciences, and of course, large scale graphic rendering. These environments run applications that uses large amounts of data that can be broken into smaller chunks and processed at the same time, or in parallel. This is where the GPUs come in – they are designed to run parallel operations. The NVIDIA GPUs used on the IBM BladeCenter GPU Expansion blade have 448 cores providing a peak double-precision capacity of 515 Gigaflops (billions of floating point operations per second) . In the case of this offering, the CPU of the IBM HS22 blade server and the GPU from the expansion blade work together where the main sequential part of the application runs on the CPU, and the computationally-intensive part runs on the GPU.

Details on the IBM BladeCenter GPU Expansion Blade

IBM is offering two flavors of the GPU Expansion blade – one with an NVIDIA Tesla M2070 GPU (IBM part # 46M6740) and one with an NVIDIA Tesla M2070Q (IBM part # 68Y7466). The difference between the two is that the M2070Q adds an additional NVIDIA Quadro advanced virtualization engine in the same GPU (and it probably costs more.) For those of you who are familiar with GPUs and care about the speeds and feeds, here’s what the NVIDIA M2070 offers:

IBM is offering two flavors of the GPU Expansion blade – one with an NVIDIA Tesla M2070 GPU (IBM part # 46M6740) and one with an NVIDIA Tesla M2070Q (IBM part # 68Y7466). The difference between the two is that the M2070Q adds an additional NVIDIA Quadro advanced virtualization engine in the same GPU (and it probably costs more.) For those of you who are familiar with GPUs and care about the speeds and feeds, here’s what the NVIDIA M2070 offers:

- NVIDIA Fermi GPU engine

- 448 processor cores

- 1.566 GHz clock speed

- 6 GB GDDR5 (graphics DDR) memory

- PCIe x16 host interface

- 225W (TDP) power consumption

- Double Precision floating point performance (peak): 515 Gflops

- Single Precision floating point performance (peak): 1.03 Tflops

The IBM BladeCenter GPU Expansion blade attaches to the HS22 blade server to form a “double-wide” server (60mm.) The cool thing is that you can add up to 4 GPU expansion blades together. In this configuration, the top most expansion unit offers a single CFF-h daughter card (mezzanine) slot for additional I/O useage. Combined with the HS22, an addition of 4 GPU Expansion blades would take up 5 of the IBM BladeCenter’s 14 server bays. Doing the math, that means you could put 2 x HS22 (with 4 x GPU Expansion Units) and 1 x HS22 (with 3 x GPU Expansion Units). As mentioned above, each GPU Expansion unit provides 448 cores, therefore a single IBM BladeCenter H could host 4,928 cores (11 expansion units x 448 cores.)

Compatibility

According to IBM, the IBM BladeCenter GPU Expansion Unit only works with the HS22 blade server however it is compatible with the IBM BladeCenter H and the IBM BladeCenter HT models as well as the IBM BladeCenter E chassis. A couple of caveats of working with the IBM BladeCenter E: the expansion unit is only supported in BladeCenter E models 8677-3xx and -4xx only AND the chassis must have the 2,320 watt AC power supplies.

- Windows Server 2008 HPC Edition (64-bit)

- Windows HPC Server 2008 (64-bit)

- Windows 2008 R2 (64-bit)

- Red Hat Enterprise Linux 5 (64-bit)

- SUSE Linux Enterprise Server Edition 11 for x86_64 (64-bit)

For more information on the IBM BladeCenter GPU Expansion blade, please visit IBM’s website at http://www-03.ibm.com/systems/bladecenter/hardware/expansion/gpu.html.

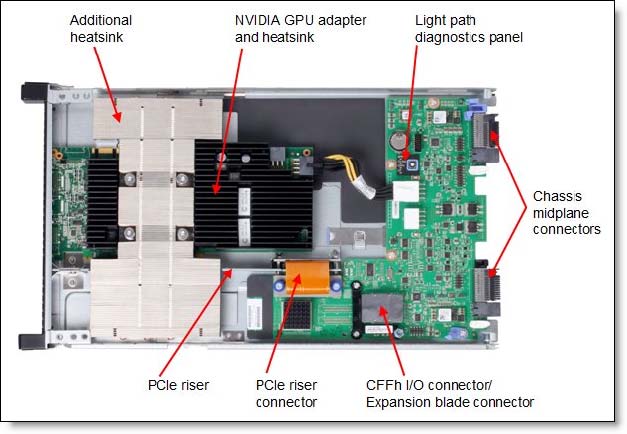

UPDATE: Image and content referenced from IBM’s Redbook titled “IBM BladeCenter GPU Expansion Blade – IBM BladeCenter at-a-glance guide” located at http://www.redbooks.ibm.com/Redbooks.nsf/RedbookAbstracts/tips0798.html?Open.

Thanks for stopping by!

Pingback: Kevin Houston

Pingback: Frank Owen

Can you imagine playing games on this thing ? :D I WANT ONE!

Wonder if Dell can match this with dual Tesla cards in their M610x blade server? Not sure if two Tesla cards are possible yet. I’m quite sure it was at least going to be possible. That would be a total of 7168 GPU processing cores in a 10U M1000e chassi.

Another option for Dell would be to put 1-2 HIC-cards (Host Interface Controller) into the M610x and connect each blade to 2-8 Tesla cards in the C410x GPGPU chassis.

If possible, a maxed out configuration would be a 10U M1000e with eight M610x equipped with two HIC-cards for eight Tesla cards per M610x. That would require four 3U C410x chassis with a total of 64 Tesla cards. That would be a total of 22U and give access to 28672 GPU processing cores.

Pingback: Andreas Erson

The Dell M610x only has 2 PCIe slots side-by-side and I believe that the Tesla GPUs require a double-wide slot and greater than 200W. The M610x could only support one GPU per server.

Yes, right now there only seems to be dual slot Tesla graphic cards. The M610x support two 250W cards or one 300W card according to Dell specs. I might be wrong but I do remember the promise of single slot Tesla cards being released though. Not sure if that was the 448 GPU processing cores version though.And regarding the solution with the C410x I’m not sure that the M610x can actually use two HIC-cards for a total of eight Tesla (4+4 from same C410x or 4+4 from two different C410 chassis). It might be a limitation that only allows you to use one HIC-card per server.I’m hoping Chris Christian from Dell (thanks @KongY_Dell) can come here and shed some light on this.

Pingback: IBM System x

Pingback: Kong Yang

Pingback: B2BCliff

Pingback: Andreas Erson

Pingback: Dmitry

This idea is really interesting, but I am concerned about the practicality of it. There is the cost of the card, then there is the cost of the PCI Express I/O Unit. if we use the 43W4391 as a reference point (http://www-304.ibm.com/shop/americas/webapp/wcs/stores/servlet/default/ProductDisplay?catalogId=-840&storeId=1&langId=-1&dualCurrId=73&categoryId=4611686018425093834&productId=4611686018425636129) of $789.00 Retail US, then adding 4 cards would add about $2800 without the cost of the Teslas. I find this availability fantastic, but wouldn’t most persons look to get a better non-rack system that could accommodate 2 teslas and be way out ahead in the $$ factor

Andreas (i work for Dell)- there are no single wide cards YET, but there will be single wide options in the near future. they wont have all the performance of the double wide cards, but i think the performance ratios will be very good. i think this is one of the major weaknesses of IBM’s offering, as they either cant support the single wide cards or have to do major modifications to support them. also note there is not an ATI version either.

we are not able to support the HIC cards yet either, but are working on that. adding 3 or 4 of these GPU expansion blades i dont think makes a lot of sense due to the cost and also poor leverage of the chassis infrastructure (you divide the high cost of the chassis over very few blades). the 410x is clearly the superior solution where customers need higher GPU to CPU ratios.

Mike

Mike, thanks for clearing up my guesstimates on the current and future support for single wide Tesla cards and HIC cards on the M610x.

Pingback: Kevin Houston

Pingback: Alex Yost

Pingback: Andreas Erson

Pingback: Jeff Royer

Pingback: Arseny Chernov

The #IBM BladeCenter GPU Expansion Blade comes with the NVIDIA card,so there is no “extra” cost of the card. Thanks for your comment.

the GPU expansion blade is not the one you linked to, that is their “standard” PCIe expansion blade. they have a special one with the gpu integrated, it doesnt have a price yet on IBM’s site

http://www-03.ibm.com/systems/bladecenter/hardware/expansion/gpu.html

Pingback: IBM Bill xibmbill