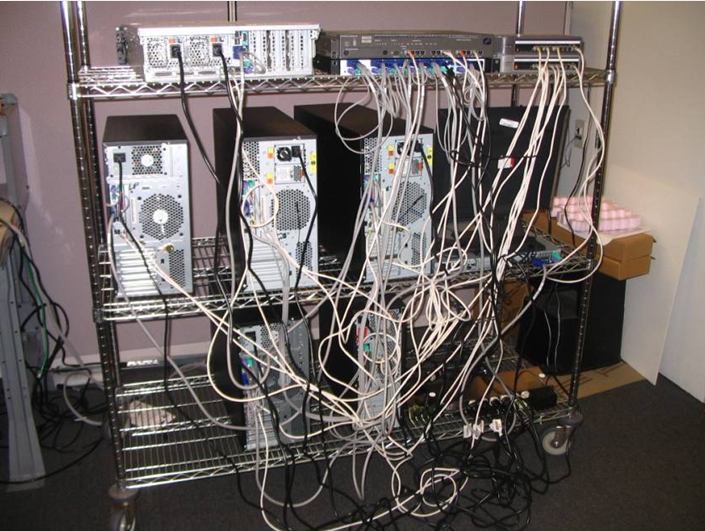

Over the past couple of weeks, I’ve been researching what are the “ideal” configurations for a home VMware vSphere lab. Ideally, it would be small enough to run a few virtual machines and demonstrate high availability features like vMotion, DRS, HA or even FT. The problem that I’ve run into is that I haven’t found a really good site that has a detailed list of parts and pieces to buy. The problem that I’ve run into is that some people recommend rack servers, others recommend you use tower servers and all of the designs includes external switching, connectivity to storage. As you can imagine, this leads to a mess.

Sure, it’s probably okay to have a pile of cables, switches and servers generating noise and heat in your home office, but what if you didn’t have to? One of the big value propositions that blade servers offer is the ability to reduce your cable, management and power complexity – so what if there was a blade infrastructure that would be cost efficient for a home lab and was robust enough to server your virtual needs. I’m working on finding a blade solution to be able to write about that will solve your lab needs, but this is where I need your help. I need to know what your ideal lab environment would look like. Take a few minutes and help me out by answering the following questions (either via comments below, or email me at kevin AT bladesmadesimple.com):

- What’s the MINIMUM amount of servers would you need for your lab?

- What’s the MINIMUM memory you’d want for your lab?

- What’s the MINIMUM storage you’d need for your lab?

- What’s the MAXIMUM price you’d pay for a bundled blade solution (servers/storage/networking)?

Thanks for taking the time to answer – feel free to comment about what your needs would be for a Citrix or Microsoft lab as well (no bias here.)

creative writing prompts

evo 3d review

hennepin technical college

corporate tax rate

jones international university

have you looked at simon gallaghers posting for the vTardis?

http://vinf.net/vtardis/

Or Christian Mohns lab postings?

http://vninja.net/virtualization/vmware-vsphere-lab-virtual-edition-part-1/

Simon Seagrave also has a bit where people have described their homelab setups:

http://www.techhead.co.uk/tell-us-about-your-server-lab

I agree about the vTardis posts, you can even do it on a single laptop, be advised that you _need_ SSD, otherwise the performance is sluggish at best.

Thanks for the links on the #vmware lab. They are good ones that I’ll add to my Helpful Links page soon. I’d really like to find out what the max price someone would pay for a complete solution, as well as what the minimum configs are. Can you give me your thoughts on this? Thanks for reading!

Thanks for the #VMware home lab feedback as well. Is there a max price you’d pay for a “complete” home lab offering? I appreciate you reading!

I guess something between $1500 and $2000 including the SSD disk, I can be wrong, prices here in Chile are way too different from US or EU.

Hi Kevin

This should be interesting. I have been struggling with this topic myself and have gained a lot of experience (from poor purchases of course). I think the biggest factor that will influence a home lab strategy is going to be if you go with a single, heavy spec’d out host, or multiple hosts.

Here’s why;

– with a single host, you can get away without shared storage and external network gear providing VLAN routing. You can use Vyatta for you inter-VLAN routing needs and then virtualize ESX/i and run nested x86 VM’s. Plus with a single host, you can run any number of virtual storage appliances (Uber Celerra, P4000 VSA, OpenFiler) and still use HA/DRS on those virtualized ESX/i clusters. The downside is no FT (hacks available, didn’t work for me very well).

– with multiple hosts, I think you can save some money on the host side. To get a single host with a lot of RAM, you are probably going to pick a low-end Xeon and need Registered ECC RAM for 24+Gb. With multiple hosts, you can get away with 8-12Gb unbuffered RAM which is a lot cheaper, and non-Xeon CPU’s are way cheaper and faster still. The downside will be that you need some form of external shared storage and network gear gets more expensive. Yes there are home-routers that can run DD-WRT on the cheap but I like hardware switching/VLAN routing and that isn’t cheap if you want good performance.

It will be interesting to see your hardware selection. FWIW, checkout the Cisco SG300-10, for <$300 you get up to 32 routed Layer 3 VLAN's in hardware (with TCAM's! yay) over 10 gigabit ports!

Thanks for putting a lot of thought into my question about #vmware home labs. The information you’ve provided is definitely helpful. Just curious – what is the max you’d pay for a packaged home lab offering? Thanks for reading!

Pingback: Kevin Houston

Tough question to answer Kevin, depends on your audience. I’m pursuing a VCDX level of certification and have invested @$4K into my lab over the last 12 months. Usually in $400-600 spurts. I definitely couldn’t have afforded it all up front. I would think the package would have to start around $1500 to gain a greater audience with upgrade packages in $500 increments.

What do you think about my home lab? ;) It’s blade based and has SAN storage. :)

http://blog.tschokko.de/wp-content/uploads/2009/07/homelab1.jpg

Kind regards

Tschokko

Pingback: Kevin Houston

That’s not a home lab! That’s a home datacenter!

Pingback: Gregg Robertson

Okay, your absolutely right… it’s a little datacenter. But what do you think about a HP C3000 chassis. Insert 3 BL460c G1 servers, one of them connected to a SB40c storage blade. Place six SFF disks into the storage blade and install OpenFiler, FreeNAS, etc onto the dedicated storage blade server. Put in a Gbe2c Interconnect on the backside an you have a compact and affordable blade-based virtualization environment with iSCSI. You can enhance the system by adding a SAN switch interconnect module. Extend every blade server with a Emulex mezzanine card and install OpenSolaris/Solaris Express onto the storage blade server. Enable COMSTAR and provision your storage thru fibre channel. :) That’s it !

Lovin’ it, a little outside my price range though :)

Blades sounds cool, but who can wire and dedicate 16+ amps for fully loaded bladechassis into home environment? Use half or third populated chassis? Does not make sense – just go for tower.

Of course, the same electricity applies to multiple rack/tower installations but that’s too much for homes anyways.

2x DL380 G6 (96GB/ea)

~6TB SATA, ~1TB SAS, 256GB SSD behind Nexenta

APC SmartUPS 1500

Cisco 3750G

Cisco 2921 ISR

2x Cisco 1141 AP’s

All fits into a half height rack. Full disclosure, I work from home 85% of the time so this isn’t my “home lab”, its my only lab.

IMO, you either care about your vocation enough to invest heavily in yourself, or you should find a new vocation.

I am doing the very same thing, well sort of.

I have C7000 enclosure with many blades, g1-g7.

I have dedicated 1 g1 with partner SB40c with 6x 300Gb sas (10k- raid 10) and installed openfiler. My hope is to use this for shared iscsi storage to xen servers in a pool in the same enclosure, however am getting poor io (30-40Mb/s).

Any tips?

I am doing the very same thing, well sort of.

I have C7000 enclosure with many blades, g1-g7.

I have dedicated 1 g1 with partner SB40c with 6x 300Gb sas (10k- raid 10) and installed openfiler. My hope is to use this for shared iscsi storage to xen servers in a pool in the same enclosure, however am getting poor io (30-40Mb/s).

Any tips?

For home lab price is very important. I would love to have a blade enclosure with low power/noise without a dedicate AC.

I have the following collection over the past couple years.

Storage, customer build server with 20 bays running StarWind iSCSI on Windows Storage Server 2008 with file deduplication.

ESX

1 x Dell PE 2900 with 48 GB RAM with SAS/Sata/SSD

2 x Dell PE 110 with 16 GB RAM running iSCSI

XenServer

2 x Dell PE 110 with 16 GB RAM running iSCSI

Hyper-V

2 x Dell PE 110 with 16 GB RAM running iSCSI

Firewall ASA 5505

Switch Dell PC 6624, Cisco 2960

Dell PE T110 is a great platfrom. It can runs any hypervisor plus I can even add GPU to it to test RemoteFX. Sometimes you can get deal around $350 and get memory from newegg. So you can have X3430 with 16 GB RAM for $500.

I am running XenDesktop, View, vWorkspace on them and it’s lots of fun. The main issues is power and noise at home. My wife does not like the fact how much we are paying for the power :)

I don’t have a SB40c storage blade, but I transfered data within the C7000 chassis with over 100MByte/s between two servers.

Can you describe your RAID config, what kind of file system do you run on OpenFiler, which interconnect modules did you installed and what’s your network configuration? All these components can impact your iSCSI performance.

Kind regards.

Tschokko

Pingback: korematic

I’m definitely a fan of blade servers. Currently, I have a HP ML370 G5 with 2 QC Procs, 16GB of RAM and an HP ML110 G4 with a single DC proc and 2.5GB of RAM. I just use the local storage with the HP VSA for iSCSI.

I have now added an HP C3000 blade chassis to my lab but unfortunatly it’s not very useful yet. My plan is to add a few blades, Virtual Connect Flex10 modules and add either an MSA1000 or just jump to an EVA4400.

May take a while, these items or not cheap.

What does your power bill look like?

Pingback: Kevin Houston