Dell appears to be first to the market today with complete details on their Nehalem EX blade server, the PowerEdge M910. Based on the Nehalem EX technology (aka Intel Xeon 7500 Chipset), the server offers quite a lot of horsepower in a small, full-height blade server footprint.

Dell appears to be first to the market today with complete details on their Nehalem EX blade server, the PowerEdge M910. Based on the Nehalem EX technology (aka Intel Xeon 7500 Chipset), the server offers quite a lot of horsepower in a small, full-height blade server footprint.

Some details about the server:

- uses Intel Xeon 7500 or 6500 CPUs

- has support for up to 512GB using 32 x 16 DIMMs

- comes standard two embedded Broadcom NetExtreme II Dual Port 5709S Gigabit Ethernet NICs with failover and load balancing.

- has two 2.5″ Hot-Swappable SAS/Solid State Drives

- 3 4 available I/O mezzanine card slots

- comes with a Matrox G200eW w/ 8MB memory standard

- can function on 2 CPUs with access to all 32 DIMM slots

Dell (finally) Offers Some Innovation

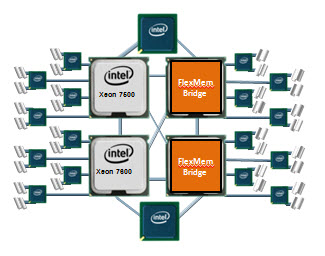

I commented a few weeks ago that Dell and innovate were rarely used in the same sentence, however with today’s announcement, I’ll have to retract that statement. Before I elaborate on what I’m referring to, let me do some quick education. The design of the Nehalem architecture allows for each processor (CPU) to have access to a dedicated bank of memory along with its own memory controller. The only downside to this is that if a CPU is not installed, the attached memory banks are not useable. THIS is where Dell is offering some innovation. Today Dell announced the “FlexMem Bridge” technology. This technology is simple in concept as it allows for the memory of a CPU socket that is not populated to still be used. In essence, Dell’s using technology that bridges the memory banks across un-populated CPU slots to the rest of the server’s populated CPUs.  With this technology, a user could start of with only 2 CPUs and still have access to 32 memory DIMMs. Then, over time, if more CPUs are needed, they simply remove the FlexMem Bridge adapters from the CPU sockets then replace with CPUs – now they would have a 4 CPU x 32 DIMM blade server.

With this technology, a user could start of with only 2 CPUs and still have access to 32 memory DIMMs. Then, over time, if more CPUs are needed, they simply remove the FlexMem Bridge adapters from the CPU sockets then replace with CPUs – now they would have a 4 CPU x 32 DIMM blade server.

Congrats to Dell. Very cool idea. The Dell PowerEdge M910 is available to order today from the Dell.com website.

Let me know what you guys think.

Pingback: Kevin Houston

Pingback: Kong Yang

Pingback: Dennis Smith

Pingback: unix player

Pingback: Scott Hanson

Pingback: Jason St. Peter

Kevin,

thanks for the great summary.

one quick correction is that M910 has 4 IO mezz card slots, not 3.

It is also the only blade i know of that has the capability to support dual (redundant) SD cards to provide failover for embedded hypervisors.

Finally, the FlexMem Bridge also connects the 2nd QPI link between the 2 processors, so you get full bandwidth between procs in a 2 socket configuration.

Mike

Pingback: uberVU - social comments

IBM also released the Hx5 blade today as well as x5 versions of their rack mount servers. These use the 7500 series processors.

Thanks for the comment – just posted technical details on the #ibm HX5. Appreciate you reading!

new #dell M910: Thanks, Mike – I've updated the page to reflect the #dell M910 has 4 I/O Mezzanine slots. The dual SD cards are interesting, but I didn't want to add it to the summary (for now.) Appreciate the continued support!

thanks kevin,

to me the dual SD/failsafe hypervisor is another example of innovation that solves real customer concerns/painpoints. you'd be amazed how many customers we talked to when we first put the SD cards in for emb. hypervisors were concerned about this as a single point of failure, so we addressed it uniquely. another example of innovating to solve real customer problems/concerns. here is a paper on it if you are interested

http://www.dell.com/downloads/global/products/p…

Pingback: Kevin Houston

Pingback: unix player

Pingback: Nik

Got block diagram on this?

The 7500 only has 4 memory channels period.

The only way I can see this working is if each CPU socket gets access to only half of the SMI Channels.

In a 2P config each proc gets 4 channels, 2 local and 2 off the remote socket.

But in a 4P config this would mean each processor now only has 1/2 the total bandwidth of an Intel reference design. So I get twice the CPU power, the same memory as before but now my memory bandwidth is cut in half? That basically means you cripple the memory performance when you “upgrade” to 4P.

Based on your overhead shot, it also looks like the physical connections would be uneven in a 2P design. Though its not a huge deal, the “local” DIMM slots are a certain trace distance from the CPU but the “remote” DIMM slots are about double that since you have to go through the other socket first. Wouldn't this lead to slight differences in how long it takes (electrically which is why this probably matters less) the data to flow from DIMM to CPU?

Easy Earning money in online never been this easy and transparent. You would find great tips on how to make that dream amount every Day.Sitting in the home earn around $100 perday. So go ahead and click here for more details and open floodgates to your online income. All the best.

Thanks.