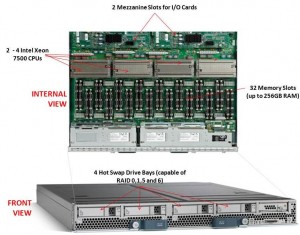

Cisco recently announced their first blade offering with the Intel Xeon 7500 processor, known as the “Cisco UCS B440-M1 High-Performance Blade Server.” This new blade is a full-width blade that offers 2 – 4 Xeon 7500 processors and 32 memory slots, for up to 256GB RAM, as well as 4 hot-swap drive bays. Since the server is a full-width blade, it will have the capability to handle 2 dual-port mezzanine cards for up to 40 Gbps I/O per blade.

Cisco recently announced their first blade offering with the Intel Xeon 7500 processor, known as the “Cisco UCS B440-M1 High-Performance Blade Server.” This new blade is a full-width blade that offers 2 – 4 Xeon 7500 processors and 32 memory slots, for up to 256GB RAM, as well as 4 hot-swap drive bays. Since the server is a full-width blade, it will have the capability to handle 2 dual-port mezzanine cards for up to 40 Gbps I/O per blade.

Each Cisco UCS 5108 Blade Server Chassis can house up to four B440 M1 servers (maximum 160 per Unified Computing System).

How Does It Compare to the Competition?

Since I like to talk about all of the major blade server vendors, I thought I’d take a look at how the new Cisco B440 M1 compares to IBM and Dell. (HP has not yet announced their Intel Xeon 7500 offering.)

Processor Offering

Both Cisco and Dell offer models with 2 – 4 Xeon 7500 CPUs as standard. They each have variations on speeds – Dell has 9 processor speed offerings; Cisco hasn’t released their speeds and IBM’s BladeCenter HX5 blade server will have 5 processor speed offerings initially. With all 3 vendors’ blades, however, IBM’s blade server is the only one that is designed to scale from 2 CPUs to 4 CPUs by connecting 2 x HX5 blade servers. Along with this comes their “FlexNode” technology that enables users to have the 4 processor blade system to split back into 2 x 2 processor systems at specific points during the day. Although not announced, and purely my speculation, IBM’s design also leads to a possible future capability of connecting 4 x 2 processor HX5’s for an 8-way design. Since each of the vendors offer up to 4 x Xeon 7500’s, I’m going to give the advantage in this category to IBM. WINNER: IBM

Memory Capacity

Both IBM and Cisco are offering 32 DIMM slots with their blade solutions, however they are not certifying the use of 16GB DIMMs – only 4GB and 8GB DIMMs, therefore their offering only scales to 256GB of RAM. Dell claims to offers 512GB DIMM capacity on their the PowerEdge 11G M910 blade server, however that is using 16GB DIMMs. REalistically, I think the M910 would only be used with 8GB DIMMs, so Dell’s design would equal IBM and Cisco’s. I’m not sure who has the money to buy 16GB DIMMs, but if they do – WINNER: Dell (or a TIE)

Server Density

As previously mentioned, Cisco’s B440-M1 blade server is a “full-width” blade so 4 will fit into a 6U high UCS5100 chassis. Theoretically, you could fit 7 x UCS5100 blade chassis into a rack, which would equal a total of 28 x B440-M1’s per 42U rack.Overall, Cisco’s new offering is a nice addition to their existing blade portfolio. While IBM has some interesting innovation in CPU scalability and Dell appears to have the overall advantage from a server density, Cisco leads the management front.

Dell’s PowerEdge 11G M910 blade server is a “full-height” blade, so 8 will fit into a 10u high M1000e chassis. This means that 4 x M1000e chassis would fit into a 42u rack, so 32 x Dell PowerEdge M910 blade servers should fit into a 42u rack.

IBM’s BladeCenter HX5 blade server is a single slot blade server, however to make it a 4 processor blade, it would take up 2 server slots. The BladeCenter H has 14 server slots, so that makes the IBM solution capable of holding 7 x 4 processor HX5 blade servers per chassis. Since the chassis is a 9u high chassis, you can only fit 4 into a 42u rack, therefore you would be able to fit a total of 28 IBM HX5 (4 processor) servers into a 42u rack.

WINNER: Dell

Management

The final category I’ll look at is the management. Both Dell and IBM have management controllers built into their chassis, so management of a lot of chassis as described above in the maximum server / rack scenarios could add some additional burden. Cisco’s design, however, allows for the management to be performed through the UCS 6100 Fabric Interconnect modules. In fact, up to 40 chassis could be managed by 1 pair of 6100’s. There are additional features this design offers, but for the sake of this discussion, I’m calling WINNER: Cisco.

Cisco’s UCS B440 M1 is expected to ship in the June time frame. Pricing is not yet available. For more information, please visit Cisco’s UCS web site at http://www.cisco.com/en/US/products/ps10921/index.html.

Pingback: Kevin Houston

any news about HP ?

No info on #hp Nehalem EX blades yet. Maybe soon. Thanks for reading!

Pingback: Jake Tracy

kevin,

I understand it is impossible to do winner/loser for this kind of thing as it really comes down to what an individual customer wants/needs. but i just have some comments:

1) Processor – real stretch to give IBM an advantage here:

– IBM doesn't offer the most attractive 6500 series procs (the 8 core) and they don't offer any 130W 7500 skus even in a 2S configuration. IBM talks about wanting to play in high memory 2S use cases, but they dont have the processor offerings to do that.

– i dont see how you take IBM connecting 2 HX5's together with a single point of failure (the “lego”) to create a REALLY expensive 4S as an advantage. M910 scales from 2S to 4S just by removing the FlexMem bridges and adding procs. you end up with the same thing, but IBM takes a much more expensive, complex, and failure prone path to get there.

– Cisco doesn't offer 6500 series that i can see and i don't see them offering a 2S configuration. this is a major disadvantage

2) Memory

– don't be so sure about 16GB dimms not being used by customers, the cost is dropping dramatically on them as well as with 8GB dimms.

– not sure why cisco isn't talking about 16GB, but lack of 16GB support for IBM is due to them having to use VLP memory. the tradeoff to IBM requiring VLP memory in order to cram everything in is cost premium of the memory itself and also ability to hit the high capacity points.

3) Density

– more than pure servers/rack, the advantage M910 density has is that you get more leverage of the chassis & IO infrastructure, which lowers cost per unit.

4) Management

-first of all, doubt you'll run into anyone with 40 chassis on a pair of 6100s. cisco just came out with a new 8 port FEX for their chassis, so clearly they got pushback that the 4 uplinks they had previously was oversubscribed. the more uplinks you use out of their FEX, the lower the scope of management of the 6100.

-IBM, Dell, HP… everyone has 1:many consoles which can manage way more than 320 servers (rack/blade/tower).

-i'd be skeptical about something that is supposed to be super easy to manage, but requires you to go to a week of school to learn how to use.

In addition to these excellent points from mike I think you would have remembed the FlexMem-capability of the Dell M910 that you posted about a couple of days earlier. Being able to use all 32 dimm slots while only using 2 CPUs really make the M910 stand out compared to the Cisco and IBM offerings in regard to RAM per CPU ratio.

Great points vs my opinions on #cisco 's new blade, B440-M1, although #dell slanted. I appreciate the comments.

I dunno Mike.

The “lego” is a passive, solid bridge, with no components. Its considerably LESS likely to fail than the Dell non-redundant backplane design..or any system board component for that matter. You can try to “spin” it as you like, but from an engineering perspective, its a simple solid connector.

Flexmem is a proprietary ASIC that Dell HAD to develop, since your model is starting with a more expensive 4S design, and only populating 2S, rather than the less expensive, flexible building block approach of HX5. Want more sockets? Attach two blades together. Want more memory? Add an extra blade. Dell has to START big, and use proprietary technology to “reduce down”. Also, that design is going to incur a latency hit..we'll see how much when benchmarks release.

16Dimm cost is still exorbitant. Customers are far mroe likely to use 4 or 8GB. 8GB+Max5 blade is still far less than a blade full of 16GB.

Also note that on Nehalem-EX, you MUST have 8 DIMMS per CPU to get max memory performance. Anything less cuts bandwidth down dramatically. Why? Because you have 2 memory controllers per socket, and 4 channels per controller.

The 7500 is a 4-way system. It only has enough QPI’s for 4 sockets. So you give a win based on speculation that it is incapable of. The 6500 is the same socket as the 7500 but with QPI for a two socket system. Loser: IBM for depending on an expansion chassis. TIE: Dell & Cisco

Density: “Theoretically” is nice – but do you have enough power for that many chassis per rack?

Memory: Tie. Dell allows the use of cheaper UDIMM’s – The 16GB RDIMM’s require more thorough testing. 32 DIMM slots are 32 DIMM slots.

To Mike’s comment on management. It takes a week to unlearn all the bad habits.