Tolly.com announced on 2/25/2010 a new Test Report that compares the network bandwidth scalability between HP BladeSystem c7000 with BL460 G6 Servers and Cisco UCS 5100 with B200 Servers, and the results were interesting. The report simply tested 6 HP blades, with a single Flex-10 Module vs 6 Cisco blades using their Fabric Extender + a single Fabric Interconnect. I’m not going to try and re-state what the report says (for that you can download it directly), instead, I’m going to highlight the results. It is important to note that the report was “commissioned by Hewlett-Packard Dev. Co, L.P.”

Result #1: HP BladeSystem C7000 with a Flex-10 Module Tested to have More Aggregate Server Throughput (Gbps) over the Cisco UCS with a Fabric Extender connected to a Fabric Interconnect in a Physical-to-Physical Comparison

>The test shows when 4 physical servers were tested, Cisco can achieve an aggregate throughput of 36.59 Gbps vs HP achieving 35.83Gbps (WINNER: Cisco)

>When 6 physical servers were tested, Cisco achieved an aggregate throughput of 27.37 Gbps vs HP achieving 53.65 Gbps – a difference of 26.28 Gbps (WINNER: HP)

Result #2: HP BladeSystem C7000 with a Flex-10 Module Tested to have More Aggregate Server Throughput (Gbps) over the Cisco UCS with a Fabric Extender connected to a Fabric Interconnect in a Virtual-to-Virtual Comparison

>Testing 2 servers each running 8 VMware Red Hat Linux hosts showed that HP achieved an aggregate throughput of 16.42 Gbps vs Cisco UCS achieving 16.70 Gbps (WINNER: Cisco).

The results of the above was performed with the 2 x Cisco B200 blade servers each mapped to a dedicated 10Gb uplink port on the Fabric Extender (FEX). When the 2 x Cisco B200 blade servers were designed to share the same 10Gb uplink port on the FEX, the achieved aggregate throughput on the Cisco UCS decreased to 9.10 Gbps.

A few points to note about these findings:

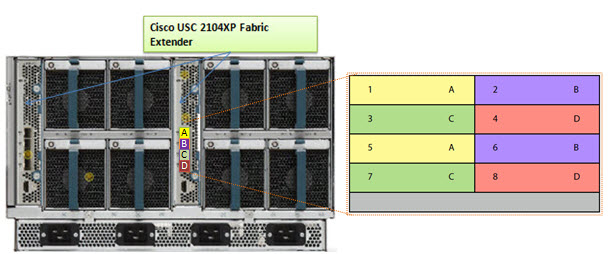

a) the HP Flex-10 Module has 8 x 10Gb uplinks whereas the Cisco Fabric Extender (FEX) has 4 x 10Gb uplinks

b) Cisco’s FEX Design allows for the 8 blade servers to extend out the 4 external ports in the FEX a 2:1 ratio (2 blades per external FEX port.) The current Cisco UCS design requires the servers to be “pinned”, or permanently assigned, to the respective FEX uplink. This works well when there are 4 blade servers, but when you get to more than 4 blade servers, the traffic is shared between two servers, which could cause bandwidth contention.

Furthermore, it’s important to understand that the design of the UCS blade infrastructure does not allow communication to go from Server 1 to Server 2 without leaving the FEX, connecting to the Fabric Interconnect (top of the picture) then returning to the FEX and connecting to the server. This design is the potential cause of the decrease in aggregate throughput from 16.70Gbps to 9.10Gbps as shown above.

One of the “Bottom Line” conclusions from this report states, “throughput degradation on the Cisco UCS cased by bandwidth contention is a cause of concern for customers considering the use of UCS in a virtual server environment” however I encourage you to take a few minutes, download this full report from the Tolly.com website and make your own conclusions about this report.

Let me know your thoughts about this report – leave a comment below.

Disclaimer: This report was brought to my attention while attending the HP Tech Day event where airfare, accommodations and meals are being provided by HP, however the content being blogged is solely my opinion and does not in anyway express the opinions of HP.