Wow. HP continues its “blade everything” campaign from 2007 with a new offering today – HP Integrity Superdome 2 on blade servers. Touting a message of a “Mission-Critical Converged Infrastructure” HP is combining the mission critical Superdome 2 architecture with the successful scalable blade architecture. Continue reading

Wow. HP continues its “blade everything” campaign from 2007 with a new offering today – HP Integrity Superdome 2 on blade servers. Touting a message of a “Mission-Critical Converged Infrastructure” HP is combining the mission critical Superdome 2 architecture with the successful scalable blade architecture. Continue reading

Monthly Archives: April 2010

Will LIGHT Replace Cables in Blade Servers?

Part of the Technology Behind Lightfleet's Optical Interconnect Technology (courtesy Lightfleet.com)

CNET.com recently reported that for the past 7 years, a company called Lightfleet has been working on a way to replace the cabling and switches used in blade environments with light, and in fact has already delivered a prototype to Microsoft Labs. Continue reading

Behind the Scenes at the InfoWorld Blade Shoot-Out

Daniel Bowers, at HP, recently posted some behind the scenes info on what HP used for the InfoWorld Blade Shoot-Out that I posted on a few weeks ago (check it out here) which led to HP’s blade design taking 1st place. According to Daniel, HP’s final config consisted of:

- a c7000 enclosure

- 4 ProLiant BL460c G6 server blades (loaded with VMWare ESX) built with 6-core Xeon processors and 8GB LVDIMMs

- 2 additional BL460c G6’s with StorageWorks SB40c storage blades for shared storage

- a Virtual Connect Flex-10 module

- a 4Gb fibre switch

Jump over to Daniel’s HP blog at to read the full article including how HP almost didn’t compete in the Shoot-Out and how they controlled another c7000 enclosure 3,000 miles away (Hawaii to Houston).

Jump over to Daniel’s HP blog at to read the full article including how HP almost didn’t compete in the Shoot-Out and how they controlled another c7000 enclosure 3,000 miles away (Hawaii to Houston).

Daniel’s HP blog is located at http://www.communities.hp.com/online/blogs/eyeonblades/archive/2010/04/13/behind-the-scenes-at-the-infoworld-blade-shoot-out.aspx

IBM Announces New Blade Servers with POWER7 (UPDATED)

UPDATED 4/14/2010 –  IBM announced today their newest blade server using the POWER7 processor. The BladeCenter PS700, PS701 and PS702 servers are IBM’s latest addition to the blade server family, behind last month’s announcement of the BladeCenter HX5 server, based on the Nehalem EX processor. The POWER7 processor-based PS700, PS701 and PS702 blades support AIX, IBM i, and Linux operating systems. (For Windows operations systems, stick with the HS22 or the HX5.) For those of you not familiar with the POWER processor, the POWER7 processor is a 64-bit, 4 core with 256KB L2 cache per core and 4MB L3 cache per core. Today’s announcement reflects IBM’s new naming schema as well. Instead of being labled “JS” blades like in the past, the new POWER family blade servers will be titled “PS” – for Power Systems. Finally – a naming schema that makes sense. (Will someone explain what IBM’s “LS” blades stand for??) Included in today’s announcement are the PS700, PS701 and PS702 blade. Let’s review each.

IBM announced today their newest blade server using the POWER7 processor. The BladeCenter PS700, PS701 and PS702 servers are IBM’s latest addition to the blade server family, behind last month’s announcement of the BladeCenter HX5 server, based on the Nehalem EX processor. The POWER7 processor-based PS700, PS701 and PS702 blades support AIX, IBM i, and Linux operating systems. (For Windows operations systems, stick with the HS22 or the HX5.) For those of you not familiar with the POWER processor, the POWER7 processor is a 64-bit, 4 core with 256KB L2 cache per core and 4MB L3 cache per core. Today’s announcement reflects IBM’s new naming schema as well. Instead of being labled “JS” blades like in the past, the new POWER family blade servers will be titled “PS” – for Power Systems. Finally – a naming schema that makes sense. (Will someone explain what IBM’s “LS” blades stand for??) Included in today’s announcement are the PS700, PS701 and PS702 blade. Let’s review each.

IBM BladeCenter PS700

The PS700 blade server is a single socket, single wide 4-core 3.0GHz POWER7

processor-based server that has the following:

- 8 DDR3 memory slots (available memory sizes are 4GB, 1066Mhz or 8GB, 800Mhz)

- 2 onboard 1Gb Ethernet ports

- integrated SAS controller supporting RAID levels 0,1 or 10

- 2 onboard disk drives (SAS or Solid State Drives)

- one PCIe CIOv expansion card slot

- one PCIe CFFh expansion card slot

The PS700 is supported in the BladeCenter E, H, HT and S chassis. (Note, support in the BladeCenter E requires an Advanced Management Module and a minimum of two 2000 watt power supplies.)

IBM BladeCenter PS701

The PS701 blade server is a single socket, single wide 8-core 3.0GHz POWER7

processor-based server that has the following:

- 16 DDR3 memory slots (available memory sizes are 4GB, 1066Mhz or 8GB, 800Mhz)

- 2 onboard 1Gb Ethernet ports

- integrated SAS controller supporting RAID levels 0,1 or 10

- 2 1 onboard disk drive (SAS or Solid State Drives)

- one PCIe CIOv expansion card slot

- one PCIe CFFh expansion card slot

The PS701 is supported in the BladeCenter H, HT and S chassis only.

IBM BladeCenter PS702

The PS702 blade server is a dual socket, double-wide 16–core (via 2 x 8-core CPUs) 3.0GHz POWER7 processor-based server that has the following:

- 32 DDR3 memory slots (available memory sizes are 4GB, 1066Mhz or 8GB, 800Mhz)

- 4 onboard 1Gb Ethernet ports

- integrated SAS controller supporting RAID levels 0,1 or 10

- 2 onboard disk drives (SAS or Solid State Drives)

- 2 PCIe CIOv expansion card slots

- 2 PCIe CFFh expansion card slots

The PS702 is supported in the BladeCenter H, HT and S chassis only.

For more technical details on the PS blade servers, please visit IBM’s redbook page at: http://www.redbooks.ibm.com/redpieces/abstracts/redp4655.html?Open

Rumour? New 8 Port Cisco Fabric Extender for UCS

I recently heard a rumour that Cisco was coming out with an 8 port Fabric Extender (FEX) for the UCS 5108, so I thought I’d take some time to see what this would look like. NOTE: this is purely speculation, I have no definitive information from Cisco so this may be false info.

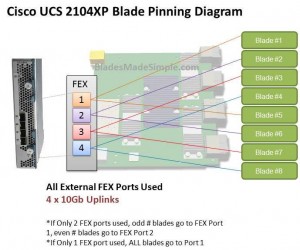

Before we discuss the 8 port FEX, let’s take a look at the 4 port UCS 2140XP FEX and how the blade servers connect, or “pin” to them. The diagram below shows a single FEX. A single UCS 2140XP FEX has 4 x 10Gb uplinks to the 6100 Fabric Interconnect Module. The UCS 5108 chassis has 2 FEX per chassis, so each server would have a 10Gb connection per FEX. However, as you can see, the server shares that 10Gb connection with another blade server. I’m not an I/O guy, so I can’t say whether or not having 2 servers connect to the same 10Gb uplink port would cause problems, but simple logic would tell me that two items competing for the same resource “could” cause contention. If you decide to only connect 2 of the 4 external FEX ports, then you have all of the “odd #” blade servers connecting to port 1 and all of the “even # blades” connecting to port 2. Now you are looking at a 4 servers contending for 1 uplink port. Of course, if you only connect 1 external uplink, then you are looking at all 8 servers using 1 uplink port.

Before we discuss the 8 port FEX, let’s take a look at the 4 port UCS 2140XP FEX and how the blade servers connect, or “pin” to them. The diagram below shows a single FEX. A single UCS 2140XP FEX has 4 x 10Gb uplinks to the 6100 Fabric Interconnect Module. The UCS 5108 chassis has 2 FEX per chassis, so each server would have a 10Gb connection per FEX. However, as you can see, the server shares that 10Gb connection with another blade server. I’m not an I/O guy, so I can’t say whether or not having 2 servers connect to the same 10Gb uplink port would cause problems, but simple logic would tell me that two items competing for the same resource “could” cause contention. If you decide to only connect 2 of the 4 external FEX ports, then you have all of the “odd #” blade servers connecting to port 1 and all of the “even # blades” connecting to port 2. Now you are looking at a 4 servers contending for 1 uplink port. Of course, if you only connect 1 external uplink, then you are looking at all 8 servers using 1 uplink port.

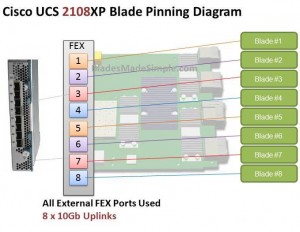

Introducing the 8 Port Fabric Extender (FEX)

I’ve looked around and can’t confirm if this product is really coming or not, but I’ve heard a rumour that there is going to be an 8 port version of the UCS 2100 series Fabric Extender. I’d imagine it would be the UCS 2180XP Fabric Extender and the diagram below shows what I picture it would look like. The biggest advantage I see of this design would be that each server would have a dedicated uplink port to the Fabric Interconnect. That being said, if the existing 20 and 40 port Fabric Interconnects remain, this 8 port FEX design would quickly eat up the available ports on the Fabric Interconnect switches since the FEX ports directly connect to the Fabric Interconnect ports. So – does this mean there is also a larger 6100 series Fabric Interconnect on the way? I don’t know, but it definitely seems possible.

The biggest advantage I see of this design would be that each server would have a dedicated uplink port to the Fabric Interconnect. That being said, if the existing 20 and 40 port Fabric Interconnects remain, this 8 port FEX design would quickly eat up the available ports on the Fabric Interconnect switches since the FEX ports directly connect to the Fabric Interconnect ports. So – does this mean there is also a larger 6100 series Fabric Interconnect on the way? I don’t know, but it definitely seems possible.

What do you think of this rumoured new offering? Does having a 1:1 blade server to uplink port matter or is this just more

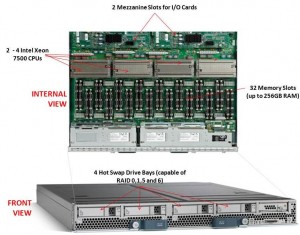

New Cisco Blade Server: B440-M1

Cisco recently announced their first blade offering with the Intel Xeon 7500 processor, known as the “Cisco UCS B440-M1 High-Performance Blade Server.” This new blade is a full-width blade that offers 2 – 4 Xeon 7500 processors and 32 memory slots, for up to 256GB RAM, as well as 4 hot-swap drive bays. Since the server is a full-width blade, it will have the capability to handle 2 dual-port mezzanine cards for up to 40 Gbps I/O per blade.

Cisco recently announced their first blade offering with the Intel Xeon 7500 processor, known as the “Cisco UCS B440-M1 High-Performance Blade Server.” This new blade is a full-width blade that offers 2 – 4 Xeon 7500 processors and 32 memory slots, for up to 256GB RAM, as well as 4 hot-swap drive bays. Since the server is a full-width blade, it will have the capability to handle 2 dual-port mezzanine cards for up to 40 Gbps I/O per blade.

Each Cisco UCS 5108 Blade Server Chassis can house up to four B440 M1 servers (maximum 160 per Unified Computing System).

How Does It Compare to the Competition?

Since I like to talk about all of the major blade server vendors, I thought I’d take a look at how the new Cisco B440 M1 compares to IBM and Dell. (HP has not yet announced their Intel Xeon 7500 offering.)

Processor Offering

Both Cisco and Dell offer models with 2 – 4 Xeon 7500 CPUs as standard. They each have variations on speeds – Dell has 9 processor speed offerings; Cisco hasn’t released their speeds and IBM’s BladeCenter HX5 blade server will have 5 processor speed offerings initially. With all 3 vendors’ blades, however, IBM’s blade server is the only one that is designed to scale from 2 CPUs to 4 CPUs by connecting 2 x HX5 blade servers. Along with this comes their “FlexNode” technology that enables users to have the 4 processor blade system to split back into 2 x 2 processor systems at specific points during the day. Although not announced, and purely my speculation, IBM’s design also leads to a possible future capability of connecting 4 x 2 processor HX5’s for an 8-way design. Since each of the vendors offer up to 4 x Xeon 7500’s, I’m going to give the advantage in this category to IBM. WINNER: IBM

Memory Capacity

Both IBM and Cisco are offering 32 DIMM slots with their blade solutions, however they are not certifying the use of 16GB DIMMs – only 4GB and 8GB DIMMs, therefore their offering only scales to 256GB of RAM. Dell claims to offers 512GB DIMM capacity on their the PowerEdge 11G M910 blade server, however that is using 16GB DIMMs. REalistically, I think the M910 would only be used with 8GB DIMMs, so Dell’s design would equal IBM and Cisco’s. I’m not sure who has the money to buy 16GB DIMMs, but if they do – WINNER: Dell (or a TIE)

Server Density

As previously mentioned, Cisco’s B440-M1 blade server is a “full-width” blade so 4 will fit into a 6U high UCS5100 chassis. Theoretically, you could fit 7 x UCS5100 blade chassis into a rack, which would equal a total of 28 x B440-M1’s per 42U rack.Overall, Cisco’s new offering is a nice addition to their existing blade portfolio. While IBM has some interesting innovation in CPU scalability and Dell appears to have the overall advantage from a server density, Cisco leads the management front.

Dell’s PowerEdge 11G M910 blade server is a “full-height” blade, so 8 will fit into a 10u high M1000e chassis. This means that 4 x M1000e chassis would fit into a 42u rack, so 32 x Dell PowerEdge M910 blade servers should fit into a 42u rack.

IBM’s BladeCenter HX5 blade server is a single slot blade server, however to make it a 4 processor blade, it would take up 2 server slots. The BladeCenter H has 14 server slots, so that makes the IBM solution capable of holding 7 x 4 processor HX5 blade servers per chassis. Since the chassis is a 9u high chassis, you can only fit 4 into a 42u rack, therefore you would be able to fit a total of 28 IBM HX5 (4 processor) servers into a 42u rack.

WINNER: Dell

Management

The final category I’ll look at is the management. Both Dell and IBM have management controllers built into their chassis, so management of a lot of chassis as described above in the maximum server / rack scenarios could add some additional burden. Cisco’s design, however, allows for the management to be performed through the UCS 6100 Fabric Interconnect modules. In fact, up to 40 chassis could be managed by 1 pair of 6100’s. There are additional features this design offers, but for the sake of this discussion, I’m calling WINNER: Cisco.

Cisco’s UCS B440 M1 is expected to ship in the June time frame. Pricing is not yet available. For more information, please visit Cisco’s UCS web site at http://www.cisco.com/en/US/products/ps10921/index.html.