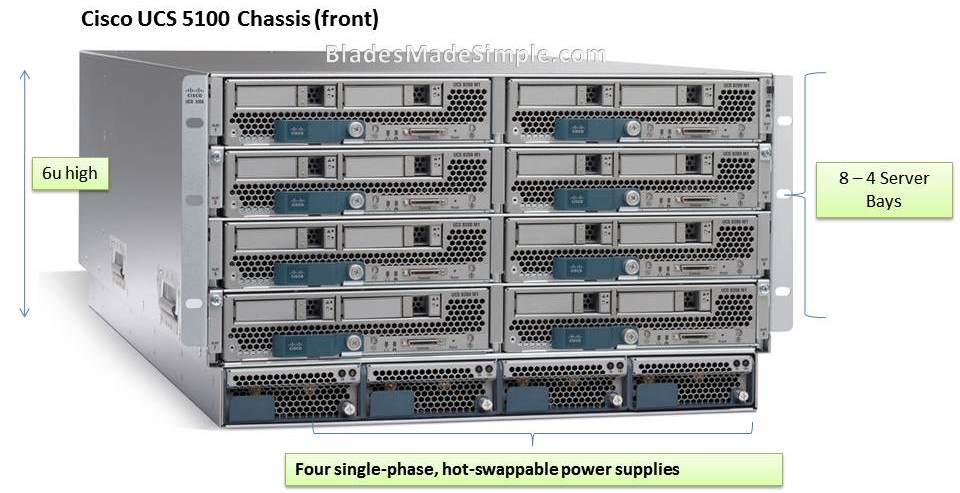

Cisco’s Unified Computing System (UCS) is a bit unique in that the chassis is a small component of the overall offering. Cisco’s UCS is a “system” of components that consists of blade servers, blade chassis, fabric extenders and fabric interconnects. The blade chassis is called the UCS 5100. It is a 6 rack unit (6u) tall chassis that can hold anywhere from 4 to 8 blade servers (dependent upon the blade form factor). The chassis comes with 4 front-accessible 2500W single-phase, hot-swappable power supplies that are 92 percent efficient and can be configured to support non-redundant, N+1 redundant, and grid-redundant configurations.

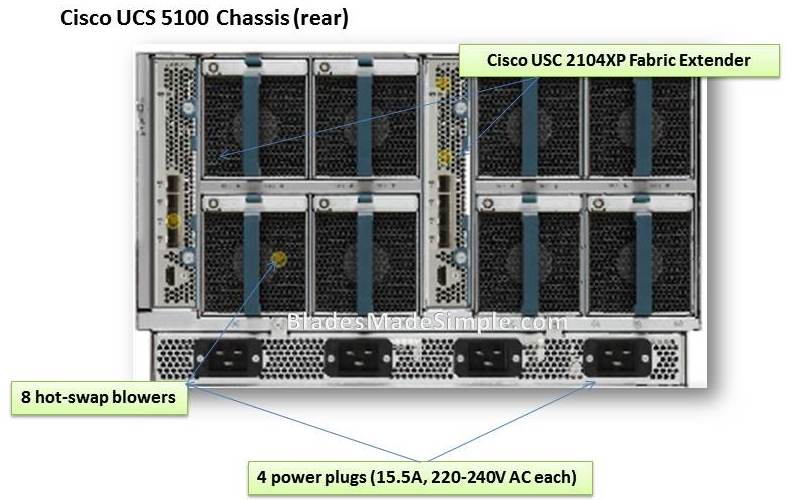

Cisco’s Unified Computing System (UCS) is a bit unique in that the chassis is a small component of the overall offering. Cisco’s UCS is a “system” of components that consists of blade servers, blade chassis, fabric extenders and fabric interconnects. The blade chassis is called the UCS 5100. It is a 6 rack unit (6u) tall chassis that can hold anywhere from 4 to 8 blade servers (dependent upon the blade form factor). The chassis comes with 4 front-accessible 2500W single-phase, hot-swappable power supplies that are 92 percent efficient and can be configured to support non-redundant, N+1 redundant, and grid-redundant configurations.  The rear of the UCS5100 chassis offers 4 hot-swap blowers, 4 power plug connectors requiring 15.5A, 220-240V AC. There are also a pair of redundant fabric extenders. This is where Cisco’s design differs from everyone else. These “fabric extenders”, known as UCS 2104XP Fabric Extender simply extend the reach of the onboard 10Gb or Converged Network Adapters (CNAs) I/O fabric from the blade server bays to the management console, known as the fabric interconnect. I previously blogged that there were rumours at one time that there would be an 8 port version of the fabric extender, however to date, I have not seen any proof of this. The UCS 2104XP Fabric Extender provides 4 x 10Gb uplinks, so if you have 8 blade servers, you theoretically would be looking at a 2:1 ratio (8 blade servers to 4 uplinks.) There have been several comments and blog posts on the functionality of the fabric extender, including the infamous Tolly Report that received several comments from the Tolly Group, Cisco employees and HP employees – but in summary, the 4 x 10Gb uplinks are adequate for handling all the I/O that the max 8 blade servers can throw at it. Yes, you can put two in for redundant pathways as well. The Fabric Extenders connect in to the brains of the solution – the fabric interconnect.

The rear of the UCS5100 chassis offers 4 hot-swap blowers, 4 power plug connectors requiring 15.5A, 220-240V AC. There are also a pair of redundant fabric extenders. This is where Cisco’s design differs from everyone else. These “fabric extenders”, known as UCS 2104XP Fabric Extender simply extend the reach of the onboard 10Gb or Converged Network Adapters (CNAs) I/O fabric from the blade server bays to the management console, known as the fabric interconnect. I previously blogged that there were rumours at one time that there would be an 8 port version of the fabric extender, however to date, I have not seen any proof of this. The UCS 2104XP Fabric Extender provides 4 x 10Gb uplinks, so if you have 8 blade servers, you theoretically would be looking at a 2:1 ratio (8 blade servers to 4 uplinks.) There have been several comments and blog posts on the functionality of the fabric extender, including the infamous Tolly Report that received several comments from the Tolly Group, Cisco employees and HP employees – but in summary, the 4 x 10Gb uplinks are adequate for handling all the I/O that the max 8 blade servers can throw at it. Yes, you can put two in for redundant pathways as well. The Fabric Extenders connect in to the brains of the solution – the fabric interconnect.  The function of the UCS 6100 fabric interconnect is to connect ALL of the UCS 5100 chassis to the network and storage fabrics.The Cisco UCS 6100 series fabric interconnect currently comes in two flavors – a 20 port (UCS 6120XP) and a 40 port (UCS 6140XP). A 20 port could connect 5 x UCS5100 chassis’ fabric extenders (4 ports x 5 chassis = 20) all the way up to 20 x UCS5100 (1 port per fabric extender). This last example doesn’t seem to be ideal, as you would be running up to 8 x blade servers’ 10Gb I/O traffic up a single 10Gb uplink – but, who knows – I’m not a networking guy, so I’ll have to leave those comments to the experts. Personally, I think that it’s a bunch of marketing fluff…

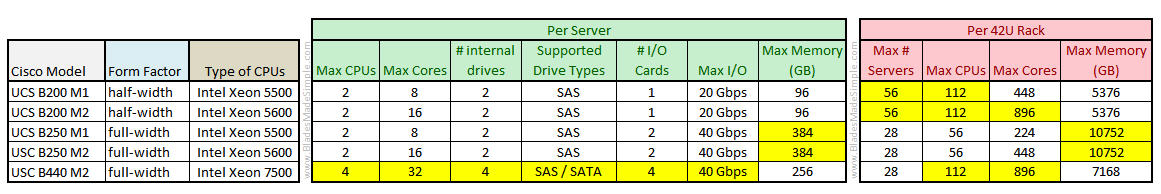

The function of the UCS 6100 fabric interconnect is to connect ALL of the UCS 5100 chassis to the network and storage fabrics.The Cisco UCS 6100 series fabric interconnect currently comes in two flavors – a 20 port (UCS 6120XP) and a 40 port (UCS 6140XP). A 20 port could connect 5 x UCS5100 chassis’ fabric extenders (4 ports x 5 chassis = 20) all the way up to 20 x UCS5100 (1 port per fabric extender). This last example doesn’t seem to be ideal, as you would be running up to 8 x blade servers’ 10Gb I/O traffic up a single 10Gb uplink – but, who knows – I’m not a networking guy, so I’ll have to leave those comments to the experts. Personally, I think that it’s a bunch of marketing fluff… When we look at the sheer capacity of the quantity of blade servers that you can fit into a 42u rack, we see that Cisco can offer a maximum of 7 chassis into a rack (6u tall). The stats below provide a good comparison between the different server offerings from Cisco.

A few things to point out:

- there are no AMD options (Intel only)

- half-width blade servers have a 1 x I/O card whereas full-width blade servers have 2 x I/O cards

I/O Card Options

Cisco offers 4 different I/O Network Card options for their blade servers:

- Cisco UCS 82598KR-CI 10 Gigabit Ethernet Adapter – based on the Intel 82598 10 Gigabit Ethernet controller, which is designed for efficient high-performance Ethernet transport.

- Cisco UCS M71KR-E Emulex Converged Network Adapter – uses an Intel 82598 10 Gigabit Ethernet controller for network traffic and an Emulex 4-Gbps Fibre Channel controller for Fibre Channel traffic all on the same mezzanine card.

- Cisco UCS M71KR-Q QLogic Converged Network Adapter – uses an Intel 82598 10 Gigabit Ethernet controller for network traffic and a QLogic 4-Gbps Fibre Channel controller for Fibre Channel traffic, all on the same mezzanine card.

- Cisco UCS M81KR Virtual Interface Card – a dual-port 10 Gigabit Ethernet mezzanine card that supports up to 128 virtual interfaces that can be dynamically configured so that both their interface type (network interface card [NIC] or host bus adapter [HBA]) and identity (MAC address and worldwide name [WWN]) are established using just-in-time provisioning.

Fellow blogger, Sean McGee, has written up a nice post on the Cisco UCS B-Series I/O Card Options. I recommend you go read it (after you finish this post). You can find Sean’s article here.

(For more details on these card options, please visit http://www.cisco.com/en/US/products/ps10280/products_data_sheets_list.html)

As you may notice, there are no card options with fibre-channel only or Infiniband. This is part of Cisco’s UCS strategy – the network and the storage traffic travel over the same cable from the blade server though the fabric extender to the fabric interconnect where the traffic is separated into network fabrics and storage fabrics. This design allows for Cisco to require a maximum of 8 cables (4 from each fabric extender) per blade chassis and as few as 2 cables (1 per fabric extender). Compared to a traditional server environment using multiple 1Gb Ethernet and 4Gb fibre connections per connection, there is a huge savings in cables.

Chassis Switch Options

As I have previously mentioned, the architecture of Cisco’s UCS blade environment takes an approach of “extending” the I/O connectivity from the blades to the fabric interconnect. With this design, there are no “switches”, therefore there are no switch options.

Server Management

Cisco’s blade infrastructure management lies within the Cisco UCS 6100 fabric interconnect. The base management software, called UCS Manager, is the central point of management for the entire UCS environment. It manages the UCS system, including the blades, the chassis, and the network (both LAN and SAN) – configuration, environmentals, etc. Take a few minutes to look at the UCS Manager software in this short video:

While the UCS Manager is rich in features, it does have the following limitations:

•(Hardware) Templates can NOT be shared across systems

•Available MAC addresses are scoped per UCS Manager instance (not across the Enterprise)

•Available WWN addresses are scoped per UCS Manager instance (not across the Enterprise)

•Available UUIDs are scoped per UCS Manager instance (not across the Enterprise)

Bottom line – the UCS Manager is limited to each UCS chassis and most of the features are manual steps. Never fear, however. Cisco offers BMC’s BladeLogic which adds the following:

•UCS template creation and editing

•Cross-UCS template management

•Cross-UCS MAC and WWN Management

•Local disk provisioning for UCS

•SAN provisioning for UCS

•ESX provisioning for UCS

•Consolidated UCS operator and management action

•Manages UCS resources, VMs, guest OS, and business applications

The catch, however, is that the BMC BladeLogic is an extra cost. How much – I’m not sure, but it adds something… Cisco has a really good simulator that highlights what you can do with the UCS Manager software, so if you are interested, take a few minutes to watch. There is no narration, just a walk-through of the UCS Manager. I also recommend you view in full screen:

So let me know what you think. Is there anything I’m missing – anything else you would like to see on this? Let me know in the comments below. Make sure to keep an eye on this site as I’ll be posting information on Dell, HP and IBM in the following weeks.

“Oo-rah” (that’s for @jonisick)

beauty and essex nyc

how to use photoshop

taco salad recipe

htc touch pro

zulily coupon code

Instead of “the UCS Manager is limited to each UCS chassis”, I think you mean “the UCS Manager is limited to each UCS Manager instance”. A nice thing about UCS architecture is cross-chassis management capability for servers plugged into the same Interconnect without management stacking connects between chassis.

And to be fair (or “not bleak” in Joe Onisick lingo), CLI scripting via UCS Manager does provide some “native” (non-BladeLogic) capability for automation.

Er, that didn’t sound right either. How about “the UCS Manager is limited to one pair of fabric interconnects”.

And I forgot my disclaimer – I work for HP.

Dan – thanks for the comment. I got the phrasing for the #Cisco UCS management from a BMC presentation from last year. I think the point is that it is limited per UCS Manager session (not across all of the enterprises). Honestly, I’d welcome any links to a more detailed blog post that highlights what UCS Manager can do. I’m trying to “keep things simple” not do a technical deep dive. I’ve updated the post to reflect this. Thanks for the feedback!

Pingback: Kevin Houston

Pingback: Thomas Jones

Pingback: Kevin Houston

Pingback: Joe Onisick

Thanks for the post Kevin. I’m looking forward to hearing more information on other vendors’ blade offerings.

Do you have a link to the Cisco UCS Manager simulator or is it only available privately?

Kevin a BMC presentation is intended to sell their product, therefore they highlight points where they add value, and ‘limitations’ they fix. They’ve got a great product, but without it UCSM natively:

– Manages deployment all network settings, BIOS settings, hardware addressing, and boot settings / targets. Basically all hardware functionality and settings from the server itself to the access layer switching.

– Provides pool, policy and template functionality allowing a design once deploy many server architecture.

– Provides a central point of firmware management for the entire compute system.

– Does this for the architectural limit of 40 chassis / 320 Servers. You could argue that scaling to max is not realistic, but it actually is in some cases. Hitting the 40 chassis max still provides 20G of bandwidth to 8 blades, that’s over 2G per blade which is more than enough for some applications, and not enough for others. Even if you only scale to 10 chassis, 80 servers with one point of managment is a big deal, and if you pack those with 20 VMs each that’s everything you need for 1600 servers.

– Is extensbile through the XML API to tie into any DC management suite a customer chooses, free or otherwise, and or can be fully scripted.

The management system of UCS is one of the crown jewels of the system. I’ve not had a single server admin who does day to day tactical tasks that wasn’t extremely impressed by it once they had a chance to get their hands on it and I’ve put it in the hands of hundreds of admins worldwide.

My bleak comment was in regards to the way this post states a series of facts, followed by a negative comment. It’s like a pro/con with no pros. If the Dell, IBM, and HP posts follow suite, there’s nothing wrong with that.

Pingback: unix player

Kevin,

I’m not sure if anybody is interested in this, but VMware has announced that their companies direction is squared with ESXi (VMware vSphere Hypervisor) and not the bigger/older brother ESX. In this case it is often attractive to operate ESXi from an embedded USB key, which is not an option in the Cisco blade portfolio. The alternatives would be spinning disks or boot-from-SAN. My complaint with boot-from-SAN is that it is a bit more complex, and may require additional licensing form your SAN vendor (think IBM DSx000 partition licensing).

Just food for thought,

John

Pingback: Michael Campbell

John,

You’re correct Cisco blades do not have an embedded USB or flash key from which to boot ESXi, where other blade options do. In the past this has been a very attractive option for the lightweight ESXi kernel. VMware will also be moving forward with only ESXi deployments as you state.

Two new options are available for ESXi boot, one of which is boot from SAN. This option works well but may be more complex than some customers prefer. The other option available as of 4.1 is PXE boot. This will prove to be a very popular option moving forward because it allows network boot of diskless blades with no OS state on the blade itself. It also allows central management of a single OS image for security, stability, and simplicity. Lastly it removes the SSD/USB failure point from the blade. PXE is supported on UCS.

Pingback: Andreas Erson

Like the idea for the series. I hope you will go into more depth on the servers from Dell, HP and IBM which covers a much wider spectrum than the blade offerings from Cisco.

I noticed two errors:

* You say “4 hot-swap blowers” in the text. Should obviously be eight.

* In the server chart the B200 M2 and B250 M2 should have 12 cores in the “Max Cores”-column instead of 16.

Keep up the good work.

Perhaps you should include a “Max Threads”-column in the server chart as well since it’s quite relevant with the new and much improved HT from Intel.

Kevin, you state that a second FEX can be added “for redundant pathways” but in fact a second FEX can be added for extra BANDWIDTH as well as redundancy. The fabric extenders (FEXes) are active/active, not active/passive as the Tolly Group would have you believe. That provides 80Gb of bandwidth per chassis or 10Gb/blade when 8 blades are installed.

Disclaimer: I work for Cisco but my opinions are my own.

Pingback: Kevin Houston

Pingback: M. Sean McGee

Pingback: Blades Made Simple » Blog Archive » Cisco Announces 32 DIMM, 2 Socket Nehalem EX UCS B230-M1 Blade Server

Pingback: Cisco Announces 32 DIMM, 2 Socket Nehalem EX UCS B230-M1 Blade Server « BladesMadeSimple.com (MIRROR SITE)

The link to the #Cisco UCS Manager simulator is at the end of the video at the bottom of the post. Thanks for reading!

PXE boot on #Cisco UCS for #VMware ESXi is a great idea. Thanks for bringing that up, Joe. I didn’t realize PXE boot was possible with vSphere 4.1. Thanks for supporting this site, and for all of your comments. Hope this wasn’t too bleak ;)

Guys – be careful there. PXE boot ESXi for INSTALL is supported. PXE boot of ESXi as a stateless boot device still is NOT supported at this time.

Pingback: Kevin Houston

Pingback: Kevin Houston

Pingback: Kevin Houston

Pingback: Andreas Erson

Pingback: Andreas Erson

Pingback: Kevin Houston