A white paper released today by Dell shows that the Dell M1000e blade chassis infrastructure offers significant power savings compared to equivalent HP and IBM blade environments. In fact, the results were audited by an outside source, the Enterprise Management Associates (http://www.enterprisemanagement.com). After the controversy with the Tolly Group report discussing HP vs Cisco, I decided to take the time to investigate these findings a bit deeper.

The Dell Technical White Paper titled, “Power Efficiency Comparison of Enterprise-Class Blade Servers and Enclosures” was written by the Dell Server Performance Analysis Team. This team is designed to run competitive comparisons for internal use, however the findings of this report were decided to be published external to Dell since the results were unexpected. The team used an industry standard SPECpower_ssj2008 benchmark to compare the power draw and performance per watt of blade solutions from Dell, HP and IBM. SPECpower_ssj2008 is the first industry-standard benchmark created by the Standard Performance Evaluation Corporation (SPEC) that evaluates the power and performance characteristics of volume server class and multi-node class computers. According to the white paper, the purpose of using this benchmark was to establish a level playing field to examine the true power efficiency of the Tier 1 blade server providers using identical configurations.

What Was Tested

Each blade chassis was fully populated with blade servers running a pair of Intel Xeon X5670 CPUs. In the Dell configuration, 16 x M610 blade servers were used, in the HP configuration, 16 x BL460c G6 blade servers were used and in the IBM configuration, 14 x HS22 blade servers was used since the IBM BladeCenter H holds a x maximum of 14 servers. Each server was configured with 6 x 4GB (24GB total) and 2 x 73GB 15k SAS drives, running Microsoft Windows Server 2008 Enterprise R2. Each chassis used the maximum amount of power supplies – Dell: 6, HP: 6 and IBM: 4 and was populated with a pair of Ethernet Pass-thru modules in the first two I/O bays.

Summary of the Findings

I don’t want to re-write the 48 page technical white paper, so I’ll summarize the results.

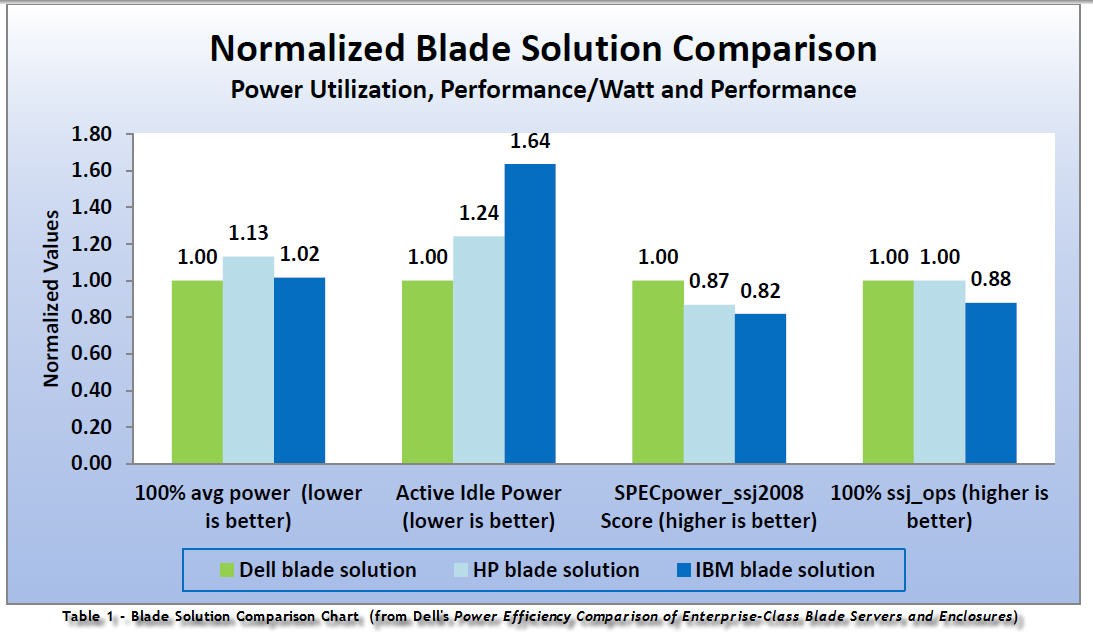

- While running the CPUs at 40 – 60% utilization, Dell’s chassis used 13 – 17% less power than the HP C7000 with 16 x BL460c G6 servers

- While running the CPUs at 40 – 60% utilization, Dell’s chassis used 19 – 20% less power than the IBM BladeCenter H with 14 x HS22s

- At idle power, Dell’s chassis used 24% less power than the HP C7000 with 16 x BL460c G6 servers

- At idle power, Dell’s chassis used 63.6% less power than the IBM BladeCenter H with 14 x HS22s

Following a review of the findings I had the opportunity to interview Dell’s Senior Product Manager for Blade Marketing, Robert Bradfield, , where I asked some questions about the study.

Question – “Why wasn’t Cisco’s UCS included in this test?”

Answer – The Dell testing team didn’t have the right servers. They do have a Cisco UCS, but they don’t have the UCS blade servers that would equal the BL460 G6 or the HS22’s.

Question – “Why did you use pass-thru modules for the design, and why only two?”

Answer – Dell wanted to create a level playing field. Each vendor has similar network switches, but there are differences. Dell did not want for those differences to impact the testing at all, so they chose to go with pass-thru modules. Same reason as to why they didn’t use more than 2. With Dell having 6 I/O bays, HP having 8 I/O bays and IBM having 8 I/O bays, it would have been challenging to create an equal environment to measure the power accurately.

Question – “How long did it take to run these tests?”

Answer – It took a few weeks. Dell placed all 3 blade chassis side-by-side but they only ran the tests on one chassis at a time. They wanted to give the test in progress absolute focus. In fact, the two chassis that were not being tested were not running at all (no power) because the testing team wanted to ensure there were no thermal variations.

Question – “Were the systems on a bench, or did you have them racked?”

Answer – All 3 chassis were racked – in their own rack. They were properly cooled with perforated doors with vented floor panels under the floor. In fact, the temperatures never varied by 1 degree between all enclosures.

Question – “Why do you think the Dell design offered the lowest power in these tests?”

Answer – There are three contributing factors to the success of Dell’s M1000e chassis offering a lower power draw over HP and IBM. The first is the 2700W Platinum certified power supply. It offers greater energy efficiency over previous power supplies and they are shipping as a standard power supply in the M1000e chassis now. However, truth be told, the difference in “Platinum” certified and “Gold” certified is only 2 – 3%, so this adds very little to the power savings seen in the white paper. Second is the technology of the Dell M1000e fans. Dell has patent pending fan control algorithms that help provide better fan efficiency. From what I understand this patent helps to ensure that at no point in time does the fan rev up to “high”. (If you are interested in reading about the patent pending fan control technology, pour yourself a cup of coffee and read all about it at the U.S. Patent Office website – application number 20100087965). Another interesting fact is that the fans used in the Dell M1000e are balanced by the manufacturer to ensure proper rotation. It is a similar process to the way your car tires are balanced – there is one or two small weights on each fan. (This is something you can validate if you own a Dell M1000e). Overall, it really comes down to the overall architecture of the Dell M1000e chassis being designed for efficient laminar airflow. In fact (per Robert Bradfield) when you look at the Dell M1000e as tested in this technical white paper versus the IBM BladeCenter H, the savings in power realized in a one year period would be enough power saved to power a single U.S. home for one year.

I encourage you, the reader, to review this Technical White Paper (Power Efficiency Comparison of Enterprise-Class Blade Servers and Enclosures) for yourself and see what your thoughts are. I’ve looked for things like use of solid state drives or power efficient memory DIMMs, but this seems to be legit. However I know there will be critics, so voice your thoughts in the comments below. I promise you Dell is watching to see what you think…

Great post. I am using the blade enclosure and I am very happy that I chose it! I will definitely read this white paper.

Pingback: Kevin Houston

Pingback: Thomas Jones

Pingback: Shawn Cannon

Pingback: Andre Fuochi

I like that Dell used an 3rd party benchmark geared toward power, and like that Dell shares lots of info on configs & setup. Kudos to the paper authors (listed as John Beckett and Robert Bradfield).

I generally don’t like SPECpower for blades, and these results partly demonstrate why. If you compare the numbers Dell reports here for the M1000e versus published PowerEdge R610 / R710 results, then this paper from Dell proves that Dell PowerEdge rack servers are around 15% more power efficient than equivalent Dell blade servers. I don’t think that’s generally true. I attribute this nonsensical result to biases in the benchmark that skew against multi-node blades (and this skew would have different impacts on Dell, IBM, and HP results).

I’ll voice two specific beefs on the specific configs tested, hoping that these spark some more disclosures:

* There are some holes in the claim that it was like-for-like hardware configuration, and older HP hardware was used. For example, different DIMMs models were used (which can actually have a big impact), older HP firmware was used, and HP’s Platinum Efficiency power supplies were not used.

* Some key power efficiency settings were left off on the HP gear (or if they were enabled, they weren’t disclosed). Some examples on the server side: “Persistent Keyboard and Mouse” was enabled in iLO on the servers, and Wake-on-LAN was likely left enabled.

One other oddity: The reported ambient temperature was lower when the Dell equipment was tested versus the HP and IBM equipment.

Ack, I forgot the disclosure: I work for HP. Sorry, Kevin.

RE: #HP vs #Dell – Power Testing on the chassis. Thanks for the positive yet constructive comments, Dan but I don’t see where the spec.org benchmark shows #dell rack servers as being more power efficient. In fact, I see the equivalent R610 showing a SPECpower_ssj2008 = 2,938 vs 2,530 with the M610 (lower is better). You can see the details of that here: http://www.spec.org/power_ssj2008/results/res2010q2/power_ssj2008-20100504-00258.txt

As for the like-for-like comments – I don’t see where different DIMMs were used. Page 25 of the white paper shows the same type, speed, timing/latency and number of RAM modules across each server. Dell and IBM used Samsung whereas HP’s was Micron. My hands on experience leads me to believe that these were the OEM memory – meaning they used IBM and HP part number memory DIMMs and these types are what showed up. (My speculation since I wasn’t involved in the testing.)

Regarding the statement of firmware – that’s a point that I’ll have to refer to HP on. I’m glad you bring it up, as I’m looking for holes as well.

The comment on the testing not using the HP platinum efficiency power supplies was brought up during my interview with Dell and the reason was HP wasn’t shipping those power supplies at the time of testing, however Dell DID use the Gold Certified HP Power supplies. My understanding is that the differences between “gold certified” and “Platinum certified” power supplies is about a 2 – 3 % efficiency difference. That does not equate to the double digit difference between HP and Dell’s results as shown in the white paper.

In terms of the ambient temperatures between each chassis, Dell told me “In terms of the ambient temperature for the benchmark tests, the range is between 22.9C and 24.9C” – Andre Fuochi (Dell Public Relations).

Great comments, Dan. I hope to hear from IBM and Cisco as well.

Glad to hear the #Dell chassis is working for you. As a user, do you agree it is as power efficient as the report shows? Thanks for reading!

Pingback: Kevin Houston

Pingback: Enrico Signoretti

Pingback: unix player

Pingback: Kong Yang

Pingback: Kong Yang

Pingback: 小薗井

Pingback: 小薗井

Pingback: David Morse

Hi Daniel,

Thanks for the constructive review comments. Let me address your concerns:

-SPECpower_ssj2008 is just as applicable for multi-node results as single-node results. HP, in fact, has published 11 multi-node SPECpower results, including 16- and 32-blade configurations in the C7000 enclosure, the same model tested in this study. Our 16-blade M610/M1000e published SPEC result (3094 ssj_ops/watt) is actually slightly more efficient than comparable 1U and 2U Dell rack servers (3004 and 3034 respectively).

-Regarding memory differences: the quantity, rank, CAS latency, and frequency were identical across all three solutions. We felt obligated to utilize the DIMMs shipped to us by HP and IBM respectively, since often different OEMs use different suppliers.

-As for firmware and power supply components, the latest revisions of both were used when the hardware was purchased/assembled for testing. As Kevin said, the HP chassis contained Gold efficiency power supplies, because that was the highest efficiency available for purchase at the time.

-The power efficiency settings for Dell, HP, and IBM were determined by examining their respective relevant SPECpower publications on http://www.spec.org. Neither of the two settings you mentioned were changed in the ProLiant BL-series SPEC publications, so they were not modified for this study.

The ambient temperature variance was minimal (< 1 deg C difference between Dell and HP), so this does not have a material impact on the results.

Thanks again; I appreciate the scrutiny, and worked hard to ensure as close to an "apples-to-apples" comparison as possible.

John Beckett

Dell Inc.

John, thanks for replying. I stand corrected on my 15% number; I hadn’t seen Dell’s new M610 results on spec.org until you pointed it out.

My issue with the DIMMs is that variability within the same DIMM architecture (say vendor-to-vendor) will impact results. I’ve seen that Samsung DIMMs used and the Hitachi drive used, are (coincidentally) better w/r/t power in this benchmark than the others.

I believe that combining these “small”, “minor” and “negligible” differences, or simply picking a different config, will yield notably different results. However I acknowledge that’s up to HP (/IBM) to demonstrate!

My general unease with SPECpower for blades remains. The negligible difference between the posted M610/M1000e and PE710 results is at odds with claims that blade servers are typically more power efficient than ‘equivalent’ stand-alone servers.

Hey Kevin. I was able to track down a few thoughts on this lab test from from Michael S. Talplacido, WW Product Manager, IBM BladeCenter.

“Based on the lab test conducted by Dell, the testing was done to examine on a level playing field the true power efficiency of Dell, HP, & IBM.

However, the test has significant flaws on the selection of configuration components that skewed the results in favor of Dell. While IBM does not contest the methodology used, the test missed important information reflecting updates on the IBM chassis design such as the upgrade of BladeCenter H’s PSU from 2900W to 2980W with 95% efficiency.

IBM’s portfolio includes a variety of data center options that meet different customer needs, and this flexibility, along with backwards-compatibility functionality, is what differentiates IBM from Dell and HP, who both have very limited selections of chassis and blades options. The Dell test selected BladeCenter H chassis, which is designed primarily for high-performance IT environments. A more relevant chassis model that fits the I/O requirements of the testing (having only used 1G pass-through modules) is the BladeCenter E, which is more energy efficient because of its lighter architecture.

One other factor to consider is the discrepancy between HP/Dell and IBM in terms of max number of blades (16 for HP & Dell, and 14 for IBM). Because of this, the absolute maximum compute performance of an individual chassis for both HP and Dell is higher than IBM, and the per blade power consumption will be lower for HP and Dell because the usage is amortized/distributed across more blades. IBM focused on providing more chassis offerings for the datacenter, BladeCenter E and H, to allow clients to optimize for density and power efficiency based on their specific environments and application/workload needs.”

Thanks for giving us an platform for discussion!

Cliff Kinard, IBM Social Media Manager System x

Pingback: Mike Talplacido

Glad to hear you are happy with the #Dell M1000e chassis. I appreciate the comment, and thanks for reading!

Cliff – thanks for your comments regarding #Dell ‘s power efficiency study. While I can’t speak on Dell’s behalf, I believe the #IBM power supplies upgrades were not yet released at the time that Dell performed the test. Even if they were, I wouldn’t expect for the additional efficiency built into the power supplies to equal the 20% difference between the IBM and Dell offering.

On your second point, while it is true that Dell could have used the BladeCenter E chassis, they were comparing “apples-to-apples” as closely as possible. As you know, neither Dell nor HP offers a blade chassis with only two redundant I/O fabrics, so it wouldn’t have been an equal or fair test for Dell to have used the BladeCenter E chassis.

Regarding your last point – I fail to see how a reduction of servers (IBM – 14 to Dell’s 16) would equal a decrease in power efficiency. Can you help me understand that point?

Thanks for your feedback – your comments are very helpful in keeping my posts honest!

Pingback: Blades Made Simple™ » Blog Archive » The Best Blade Server Option Is…[Part 2 - A Look at Dell]

The absence of a Cisco server is very suspect.

The Cisco B200 is the equivalent to the servers tested and have been out since UCS was introduced. What blades would they have if they didn’t have these?

They should not say they are the most efficient in the world…just more efficient than IBM and HP, unless they test all vendors first.

Pingback: Kevin Houston

Pingback: Mark S A Smith

Pingback: isyed1

Pingback: Kong Yang