Okay, I’ll be the first to admit. I’m a geek. I’m not an uber-geek, but I’m a geek. When things get slow, I like digging around in the U.S. Patent Archives for hints as to what might be coming next in the blade server market place. My latest find uncovered a couple of “interesting” patents that were published by the International Business Machines Corporation, also known as IBM.

Liquid Cooled Blades?

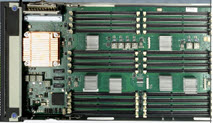

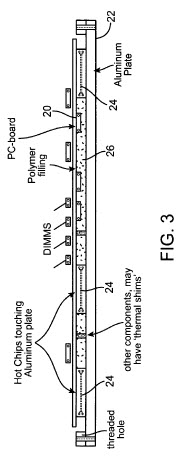

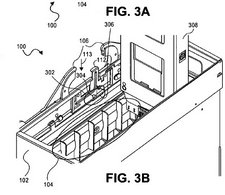

United States Patent #7552758, titled “Method for high-density packaging and cooling of high-powered compute and storage server blades” (published 6/29/2009) may be IBM’s clever way of disguising a method to liquid cool blade servers.

United States Patent #7552758, titled “Method for high-density packaging and cooling of high-powered compute and storage server blades” (published 6/29/2009) may be IBM’s clever way of disguising a method to liquid cool blade servers. According to the patent, the invention is “A system for removing heat from server blades, comprising: a server rack enclosure, the server rack enclosure enclosing: a liquid distribution manifold; a plurality of cold blades attached to the liquid distribution manifold, wherein liquid is circulated through the liquid distribution manifold and the cold blades; and at least one server blade attached to each of the cold blades, wherein the server blade includes a base portion, the base portion is a heat-conducting aluminum plate, the base portion is positioned directly onto the cold blade, and contact blocks penetrate the aluminum plate and make contact with corresponding contact points of the cold blades.”

According to the patent, the invention is “A system for removing heat from server blades, comprising: a server rack enclosure, the server rack enclosure enclosing: a liquid distribution manifold; a plurality of cold blades attached to the liquid distribution manifold, wherein liquid is circulated through the liquid distribution manifold and the cold blades; and at least one server blade attached to each of the cold blades, wherein the server blade includes a base portion, the base portion is a heat-conducting aluminum plate, the base portion is positioned directly onto the cold blade, and contact blocks penetrate the aluminum plate and make contact with corresponding contact points of the cold blades.”

You can read more about this patent, in detail, at http://www.freepatentsonline.com/7552758.html

New Storage Blade?

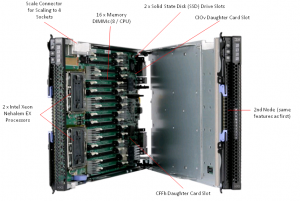

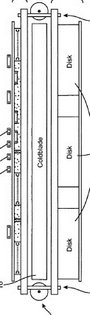

Another search revealed a patent for a “hard disk enclosure blade” (patent # 7499271), published on 3/3/2009, is a design that IBM seems to have been working on for a few years, as this design stems back to 2006. It appears to be a “double-wide” enclosure that will allow for 8 disk drives to be inserted.

It appears to be a “double-wide” enclosure that will allow for 8 disk drives to be inserted.

This is an interesting idea, if the goal were to be used inside a normal bladecenter chassis. It would be like having the local space of an IBM BladeCenter S, but in the IBM BladeCenter E or IBM BladeCenter H. On the other hand, it could have been the invention that was used for the storage modules of the IBM BladeCenter S. You can read more about this invention at http://www.freepatentsonline.com/7499271.html.

New IBM BladeCenter Chassis?

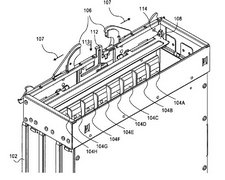

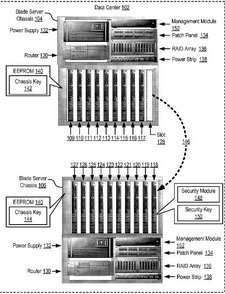

The final invention that I uncovered is very mysterious to me. Titled, “Securing Blade Servers in a Data Center,” patent application # 20100024001 shows a new concept from IBM encompassing a blade server chassis, a router, a patch panel, a RAID Array, a power strip and blade servers all inside of a single enclosure, or “Data Center.” An important note is that this device is not yet approved as a patent – it’s still a patent application. Filed on 7/25/2008 and published as a patent application on 1/28/2010, this patent application lists an abstract description of, “Securing blade servers in a data center, the data center including a plurality of blade servers installed in a plurality of blade server chassis, the blade servers and chassis connected for data communications to a management module, each blade server chassis including a chassis key, where securing blade servers includes: prior to enabling user-level operation of the blade server, receiving, by a security module, from the management module, a chassis key for the blade server chassis in which the blade server is installed; determining, by the security module, whether the chassis key matches a security key stored on the blade server; if the chassis key matches the security key, enabling, by the security module, user-level operation of the blade server; and if the chassis key does not match the security key, disabling, by the security module, operation of the blade server.” I’ve tried a few times to decipher what this patent is really for, but I’ve not had any luck. I encourage you to head over to http://www.freepatentsonline.com/y2010/0024001.html and take a look. If it makes sense to you, leave me a comment.

The final invention that I uncovered is very mysterious to me. Titled, “Securing Blade Servers in a Data Center,” patent application # 20100024001 shows a new concept from IBM encompassing a blade server chassis, a router, a patch panel, a RAID Array, a power strip and blade servers all inside of a single enclosure, or “Data Center.” An important note is that this device is not yet approved as a patent – it’s still a patent application. Filed on 7/25/2008 and published as a patent application on 1/28/2010, this patent application lists an abstract description of, “Securing blade servers in a data center, the data center including a plurality of blade servers installed in a plurality of blade server chassis, the blade servers and chassis connected for data communications to a management module, each blade server chassis including a chassis key, where securing blade servers includes: prior to enabling user-level operation of the blade server, receiving, by a security module, from the management module, a chassis key for the blade server chassis in which the blade server is installed; determining, by the security module, whether the chassis key matches a security key stored on the blade server; if the chassis key matches the security key, enabling, by the security module, user-level operation of the blade server; and if the chassis key does not match the security key, disabling, by the security module, operation of the blade server.” I’ve tried a few times to decipher what this patent is really for, but I’ve not had any luck. I encourage you to head over to http://www.freepatentsonline.com/y2010/0024001.html and take a look. If it makes sense to you, leave me a comment.

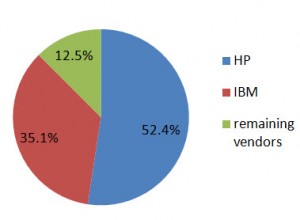

While this was nothing but a trivial attempt at finding the next big thing before it’s announced, I walk away from this amazed at the number of patents that IBM has, just for blade servers. I hope to do a similar exercise for HP, Dell and Cisco in the near future, after tomorrow’s Westmere announcements.

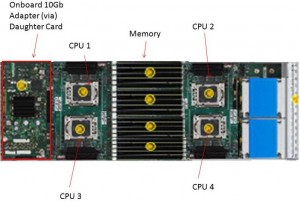

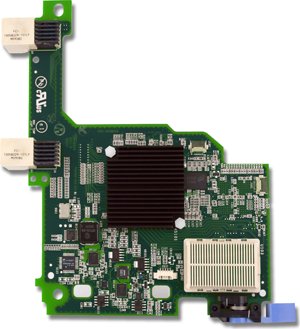

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I