As I’m talking with customers about rack or blade servers, I always start with industry trends. I make sure they understand the things to be aware of with Intel Xeon SP processors, what’s new with AMD EPYC processors, NVMe drives and I wrap it up discussing Storage Class Memory If you aren’t familiar with Storage Class Memory, you better start learning about it, because it is a game changer.

Before I begin, let me set expectations. The goal of this post is to enlighten you, not to make you a technical expert. I urge you to go do research on Storage Class Memory, Persistent Memory and NVDIMMs with your O/S or Hypervisor of choice to get very deep tech guides on how this technology can be used. With that said, let’s dig in.

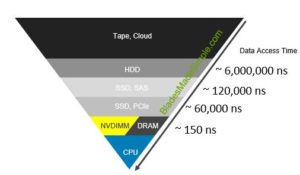

Low Latency

First – the basics. Storage Class Memory (SCM), also known as Persistent Memory,  is simply traditional memory with NAND flash attached along with a power source to insure that data is not lost in the event of a power failure or blue/purple screen crash. One of the key advantages of SCM is that it provides much lower latency compared to SSD or NVMe drives. In fact, NVMe drives have an average latency of 60,000 nanoseconds (half of the latency of SSDs) while SCM has a latency of ~150 nanoseconds! That’s not a typo, it’s that much faster than NVMe, so it’s ideal for workloads that thrive on low latency. More on that in a minute.

is simply traditional memory with NAND flash attached along with a power source to insure that data is not lost in the event of a power failure or blue/purple screen crash. One of the key advantages of SCM is that it provides much lower latency compared to SSD or NVMe drives. In fact, NVMe drives have an average latency of 60,000 nanoseconds (half of the latency of SSDs) while SCM has a latency of ~150 nanoseconds! That’s not a typo, it’s that much faster than NVMe, so it’s ideal for workloads that thrive on low latency. More on that in a minute.

Application Adoption

Some of you may be thinking “this isn’t new” and you’d be right. Storage Class Memory isn’t new. It’s been available for a few years in the form of NVDIMM (Non-Volatile Dual Inline Memory Modules) however the software ecosystem was not available to support it. It wasn’t until Red Hat and Microsoft started supporting it via RHEL 7.3 and Windows Server 2016 and most recently, VMware announced support of Persistent Memory with vSphere 6.7. Beyond the O/S and hypervisor layer, SCM can work directly with applications as demonstrated by Microsoft and SQL Server 2016. Now that we have software vendors starting to come on board, adoption of this technology is almost ready for prime time. There’s one more thing to keep into consideration.

Capacity

(updated 6/27/18 at 6:30 pm Eastern)

Currently, NVDIMMs are offered in 16GB increments by memory manufacturers. Even if the blade server vendors allowed for 1/2 of the memory slots (12) to be used in a 2 processor Intel Xeon SP system, that would only give 192GB of capacity. This is not enough to put entire databases on, but ideal for logs, cache or metadata. So why is this technology a big deal? Answer: Intel. The Intel Xeon SP CPU will get a refresh (version 2) sometime next year. Along with that we will see an introduction of the Intel Optane DIMM, Intel’s proprietary version of Storage Class Memory (note you may hear it called Apache Pass, or AP, which was a previous code name). Optane DIMMs are rumored to come in capacities starting at 128GB and ranging up to 512GB. This means that those systems with 12 slots would support a minimum of 12 x 128GB (1.5TB – thanks Howard Marks for catching my previous math error) SCM. Now we have a storage footprint that would host entire databases or even virtual machine farms. This opens up a whole new consideration (imagine vMotioning VMs out of persistent memory and the NIC bandwidth required…) With the low latency and large capacities, Storage Class Memory will become a new storage target for architects to utilize therefore changing the way we do business in the near future. But, there are some gotchas.

Gotchas

When I first heard about SCM, I was pumped (still am) but shortly after, I started realizing it is a technology that is still in its infancy. First – how do you protect it? With SSDs, we have RAID, so how do we insure the data inside of the SCM space doesn’t get lost. Answer – the same way we do with NVMe. As you know, NVMe drives don’t use RAID controllers, but connect directly into the PCIe infrastructure so we have to rely on software RAID to support it. I imagine we’ll have to do the same with SCM. Honestly, I haven’t researched this enough, but I would have to imagine Red Hat and Microsoft have protection options in their use cases.

The O/S providers may offer a way to protect the data from being lost, but I haven’t seen any way to prevent the data from walking away. With SCM using NAND flash to hold that data in the event of a power loss, there is a security risk that someone could find a way to capture that data and use it maliciously. The answer to this concern would be encryption, but we don’t yet have that option with SCM. Keeping in mind SCM is still in early adoption phase with most organizations, I imagine we’ll see hardware vendors provide some sort of encryption in the future, but for now, be aware this is a concern and something to plan for.

Lastly, in most cases, using NVDIMMs require a regular DIMM (no LRDIMMs) in the memory slot adjacent. Of course, check with your server manufacture on this, but regardless, that could impact your server architecture design, so make sure you plan accordingly.

Summary

In conclusion, this is a very exciting technology that impacts server, storage and network teams across organizations. It is clear SCM technology is early in its infancy but it will continue to grow and will become a mainstream technology in server builds in upcoming years. Make sure you invest in a blade server vendor that has the ability to support Storage Class Memory and do your homework to see how SCM can help your organization.

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has over 21 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin has been at Dell EMC for 7 years as the Chief Technical Architect supporting the Central Enterprise market.

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has over 21 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin has been at Dell EMC for 7 years as the Chief Technical Architect supporting the Central Enterprise market.

Pingback: This Server Technology Is a Game Changer so You Better Be Ready – Real World UCS