Chassis Overview

Dell’s blade platform relies on the M1000e Blade Chassis. Like Cisco, Dell offers a single chassis design for its blade portfolio. The chassis is 10 rack units tall (17.5″) and holds 16 half height or 8 full height servers in any combination. The front of the chassis provides two USB Keyboard/Mouse connections and one Video connection (requiring the optional integrated Avocent iKVM switch to enable these ports) for local front “crash cart” console connections that can be switched between blades.

Dell’s blade platform relies on the M1000e Blade Chassis. Like Cisco, Dell offers a single chassis design for its blade portfolio. The chassis is 10 rack units tall (17.5″) and holds 16 half height or 8 full height servers in any combination. The front of the chassis provides two USB Keyboard/Mouse connections and one Video connection (requiring the optional integrated Avocent iKVM switch to enable these ports) for local front “crash cart” console connections that can be switched between blades.

Also standard is a LCD control panel with interactive Graphical LCD that offers an initial configuration wizard and provides local server blade, enclosure, and module information and troubleshooting. The chassis also contains a power button that gives the user the ability to shut power off to the entire enclosure.

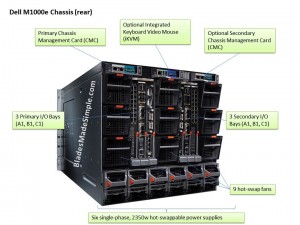

Taking a look at the rear of the chassis, we see that the Dell M1000e offers up to 6 redundant 2350 – 2700 watt hot plug power supplies and 9 redundant fan modules. The 2700 watt power supplies are part of Dell’s newest addition to the chassis offering a higher efficiency, according to a recent study performed by Dell.

Taking a look at the rear of the chassis, we see that the Dell M1000e offers up to 6 redundant 2350 – 2700 watt hot plug power supplies and 9 redundant fan modules. The 2700 watt power supplies are part of Dell’s newest addition to the chassis offering a higher efficiency, according to a recent study performed by Dell.

The M1000e allows for up to six total I/O modules to be installed providing three redundant fabrics. I’ll cover the I/O module offerings later on this post.

The chassis comes standard with a Chassis Management Controller (CMC) that gives a single management window to view the inventory, configurations, monitoring and alerting for the chassis and all components. There is also a slot for an optional secondary CMC for redundancy. The CMC can be connected to another Dell chassis’ CMC to provide consolidation and reduction of port consumption on external switches.

Between the primary and secondary CMC slots is a slot to add the optional Integrated KVM (iKVM) module. The iKVM provides local keyboard, video and mouse connectivity into the blade chassis and associated blade servers. The iKVM also contains a dedicated RJ45 port with an Analog Console Interface (ACI) that is compatible with most Avocent switches letting one port on an external Avocent KVM switch for all 16 blade servers within a Dell M1000e chassis.

Server Review

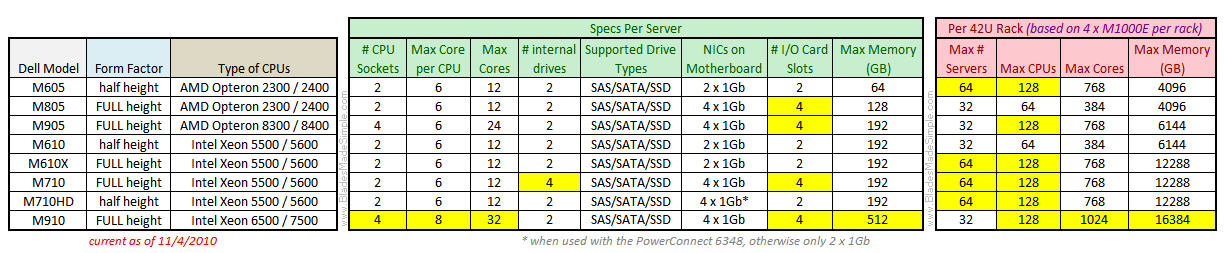

Dell offers a full range of servers available in half height and full height form factors with a full height server taking up 2 half height server bays. Dell offers both AMD and Intel in their portfolio, with AMD based blades ending in “5” and Intel based blades ending in “0”.

Dell offers a full range of servers available in half height and full height form factors with a full height server taking up 2 half height server bays. Dell offers both AMD and Intel in their portfolio, with AMD based blades ending in “5” and Intel based blades ending in “0”.

Looking across the entire spectrum of blade server offerings, the M910 offers the most advantages with the highest CPU, core and memory within the Dell blade server family. This blade server also currently has the #1 VMware VMmark score for a 16 core blade server, although I’m sure it will get trumped soon. An advantage that I think the Dell blade server have is they provide 4 I/O card slots on their full height servers. This is a big deal to me because it gives redundant mezzanine (daughter) cards for each fabric (for more on I/O connectivity see below.) While that leads to twice the mezzanine card cost compared to their competitors, it can provide a piece of mind for that user looking for high redundancy. At the same time, having 4 I/O card slots gives users the ability to gain more I/O ports per server.

In the list of Dell blade server offerings are a few specialized servers – the M610x and the M710HD. The M610x offers local PCI-express expansion on the server (not via an expansion blade) while the M710HD offers greater memory density along with a unique modular Lan-On-Motherboard expansion card called “Network Daughter Card”. I’ve written about these servers in a previous post, so I encourage you to take a few minutes to read that post if you are interested.

I/O Card Options

Dell offers a wide variety of daughter cards, aka “mezzanine cards”, for their blade servers. Let’s take a quick look at what is offered across the three major I/O categories – Ethernet, Fibre and Infiniband.

Ethernet Cards

(click on the link to read full details about the card)

- Broadcom NetXtreme II 5709 Dual Port Ethernet Mezzanine Card with TOE and iSCSI Offload

- Broadcom NetXtreme II 5709 Quad Port Ethernet Mezzanine Card

- Intel Gigabit ET Quad Port Mezzanine Card with Virtualization technology and iSCSI Acceleration

- Broadcom NetXtreme II 57711 Dual Port 10Gb Ethernet Mezzanine Card with TOE and iSCSI Offload

- Intel Ethernet X520 10Gb Dual Port –x/k Mezzanine Card

- Mellanox ConnectX-2 VPI Dual Port 10Gb Low Latency Ethernet -x/k Mezzanine Card

- Emulex OCM10102FM 10 Gb Fibre Channel over Ethernet Mezz Card

- Qlogic QME8142 10Gb Fibre Channel over Ethernet Adapter

Fibre Cards

(click on the link to read full details about the card)

- Emulex LPe1105-M 4Gb Fibre Channel I/O Card

- QLogic QME2472 4Gb Fibre Channel I/O Card

- Emulex LPe1205-M 8Gb Fibre Channel I/O Card

- QLogic QME2572 8Gb Fibre Channel I/O Card

Infiniband Cards

(click on the link to read full details about the card)

- Mellanox QDR & DDR ConnectX-2 Dual-Port Quad & Dual Data Rate (QDR & DDR) InfiniBand Host Channel Adapters (HCA)

- Mellanox ConnectX-2 VPI Dual Port 10Gb Low Latency Ethernet -x/k Mezzanine Card

A key point to realize is that each mezzanine card requires an I/O module to connect to. Each card contains at least two ports – one goes to I/O module in one bay and the other port goes to the I/O module in another bay. (More on this below.) Certain mezzanine cards work with certain switch modules, so make sure to review the details of each card to understand what switch is compatible with the I/O card you want to use.

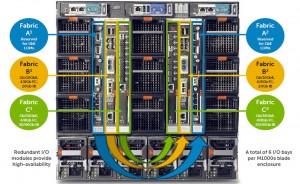

Chassis I/O Switch Options

One of the most challenging components of any blade server architecture is understanding how the I/O modules, or switches work within a blade infrastructure. The concept is quite simple – in order for an I/O port on a blade server to get outside of the chassis into the network or storage fabric there must be a module in the chassis that correlates to that specific card. It is important to understand that each I/O port is hardwired inside the M1000e chassis to connect to an I/O bay. On two port cards, port 0 would go to I/O Module Bay 1 and port 1 would go to I/O Module Bay 2. On four port cards, the even # ports (0 and 2) would go to I/O Module Bay 1 and the odd # ports (1 and 3) go to I/O Module Bay 2. This is an important point to understand. If you have a dual port card, but only put an I/O module in one of the two I/O Bays, you only get 1 of the 2 ports on the card lit up AND you have no redundant path, so it’s always best practice to put I/O modules in both bays of the I/O fabric.

One of the most challenging components of any blade server architecture is understanding how the I/O modules, or switches work within a blade infrastructure. The concept is quite simple – in order for an I/O port on a blade server to get outside of the chassis into the network or storage fabric there must be a module in the chassis that correlates to that specific card. It is important to understand that each I/O port is hardwired inside the M1000e chassis to connect to an I/O bay. On two port cards, port 0 would go to I/O Module Bay 1 and port 1 would go to I/O Module Bay 2. On four port cards, the even # ports (0 and 2) would go to I/O Module Bay 1 and the odd # ports (1 and 3) go to I/O Module Bay 2. This is an important point to understand. If you have a dual port card, but only put an I/O module in one of the two I/O Bays, you only get 1 of the 2 ports on the card lit up AND you have no redundant path, so it’s always best practice to put I/O modules in both bays of the I/O fabric.

For example, the NICs that reside on the blade motherboard need to have Ethernet modules in I/O bays A1 and A2. (Click on each image to enlarge for better viewing.)

The mezzanine cards in slots 1 and 3 need to have a related I/O module in I/O bays A2, so if you put in an Ethernet card in Mezzanine Slot 1 and/or 3, you’d have to have an Ethernet module in I/O bay B1 and B2.

As you can imagine, the same applies for the mezzanine cards in slots 2 and 4, which map to C1 and C2.

The images shown above reflect Dell’s full height server offerings. In the event that half height servers are used, then only mezzanine slots 1 and 2 would be connected.

When we review the I/O module offerings from Dell we see there is quite a list of Ethernet, Fibre and Infiniband devices available to work with the mezzanine cards listed above. I/O modules come in two offerings: switches and pass-thru modules. Dell M1000e Blade Chassis I/O Switches function the same way as external switch modules – they provide a consolidated switched connection into a fabric. For a fully loaded blade chassis, you could use a single connection per I/O module to connect your servers into each fabric. With a switch you often have fewer uplinks than internal connections, very similar to an external I/O switch.

In comparison, a pass-thru module provides no switching, only a direct one-for-one connection from the port on the blade server to the port on an external switch. For example, if you had the Dell M1000e populated with 16 servers and you wanted to get network connectivity and redundancy for the NICs on the motherboard of each blade server and you put in an Ethernet pass-thru module in I/O Bay A1 and A2, you would need 32 ports available on your external network switch fabric (16 from A1 and 16 from A2). In summary, switches = fewer cable connections to your external fabric.

Here’s a list of what Dell offers:

Ethernet Modules

(click on the link to read full details about the module)

- Dell 16 Port Gigabit Ethernet Pass-Through

- Cisco Catalyst Blade Switch 3032

- Cisco Catalyst Blade Switch 3130G – Stackable Managed Gigabit Ethernet Switch

- Cisco Catalyst Blade Switch 3130X – StackableManaged 1Gb/10Gb Uplink Ethernet Switch

- Dell PowerConnect M6220 – High Performance Layer 3, Managed Gigabit Ethernet Switch with resilient stacking and 10Gigabit Ethernet capabilities

- Dell PowerConnect M6348 – 48 Port Gigabit Ethernet

- Dell 10Gb Ethernet Pass-Through I/O Module

- Dell PowerConnect M8024 – All 10Gb Ethernet performance and bandwidth Layer 3 Managed Switch

Fibre Modules

(click on the link to read full details about the module)

- 4Gb Fibre Channel Pass-Through Module

- Dell 8/4Gbps FC SAN Module

- Brocade® M5424 – 8Gb Fibre Channel Module

Infiniband Modules

(click on the link to read full details about the module)

- Mellanox M2401G – Dual Data Rate (DDR) InfiniBand Switch

- Mellanox M3601Q – Quad Data Rate (QDR) InfiniBand Switch

A few things to point out about these offerings.

a) The Dell PowerConnect M6348 is classified as a “48 Port Gigabit Ethernet switch” providing 32 internal GbE ports (2 per blade) and 16 external fixed 10/100/1000Mb Ethernet ports ONLY when using the quad port GbE mezzanine cards (Broadcom 5709 or Intel ET 82572). If Dual port GbE cards are used only half of the switch’s internal ports will be used. It can also be used in Fabric A when using M710HD with its quad port Broadcom 5709C NDC. (thanks to Andreas Erson for this point.)

b) If you are connecting to a converged fabric, i.e. to a Cisco Nexus 5000, use the Emulex OCM10102FM 10 Gb Fibre Channel over Ethernet Mezz Card or the Qlogic QME8142 10Gb Fibre Channel over Ethernet Adapter along with the Dell 10Gb Ethernet Pass-Through I/O Module.

New addition to previous post- c) The Infiniband QDR Mellanox M3601Q is a dual-width I/O Module occupying both B- and C-fabric slots.

Server Management

Dell’s chassis management is controlled by the onboard Chassis Management Controller (CMC). The CMC provides multiple systems management functions for the Dell M1000e, including the enclosure’s network and security settings, I/O module and iDRAC network settings, and power redundancy and power ceiling settings. There is a ton of features that are available in the Dell CMC console, so I thought I would put together a video of the different screen shots. This video includes all areas of the console, based on version 3.0 and was taken from my work lab so I’ve blacked out some data.

A Review in Pictures of the Dell Chassis Management Controller (CMC)

Here’s the YouTube link for those of you without Flash: http://www.youtube.com/watch?v=4uCGHw8jK3M&hd=1

A Review in Pictures of the Dell blade iDRAC

Once you get beyond the CMC you also have the ability to access the onboard management console on the individual blades, called the iDRAC (short for “Integrated Dell Remote Access Controller”). The features found here are unique to management / monitoring of the individual blade, but it also is the gateway to launching a remote console session – which is in the next session.

Here’s the YouTube link for those of you without Flash: http://www.youtube.com/watch?v=gjOsPorqhcw&hd=1

A Review in Pictures of the Dell blade Remote Console

Finally – if you were to launch a remote console session from the iDRAC, you would have complete remote access. Think of an RDP session, but for blade servers. Take a look, it’s pretty interesting. With newer blade servers (PowerEdge 11G models), you can launch the Remote Console from the CMC – a nice addition. My videos were used with older blade servers (M600’s) so I didn’t have any screen shot, but my friend from Dell, Scott Hanson (@DellServerGeek), gave me some photos to point to:

Here’s a couple screenshots :

From the Chassis Overview – http://www.twitpic.com/33vv87

From the Server Overview – http://www.twitpic.com/33vvj2

From the Individual Server Overview – http://www.twitpic.com/33vvz0

Here’s the YouTube link for those of you without Flash: http://www.youtube.com/watch?v=h6S9nuPv7XE&hd=1

So that’s it. For those of you who have been waiting the past few months for me to finish, let me know what you think. Is there anything I’m missing – anything else you would like to see on this? Let me know in the comments below. Make sure to keep an eye on this site as I’ll be posting information on HP and IBM in the following weeks (months?).

The M610x is listed as a half height blade in the table. M610x is a full height blade.

URL for Qlogic QME814 mezzanine:

http://www.dell.com/us/en/enterprise/networking/hba-qlogic-qme8142-ethernet/pd.aspx?refid=hba-qlogic-qme8142-ethernet&s=biz&cs=555

URL for Emulex OCm10102-F-M mezzanine:

http://www.dell.com/us/en/enterprise/networking/emulex-ocm10102-f-m/pd.aspx?refid=emulex-ocm10102-f-m&s=biz&cs=555

I would include a link to this very informational pdf about the available mezzanines and corresponding I/O Modules:

http://www.dell.com/downloads/global/products/pedge/en/blade_io_solutions_guide_v1.2_jn.pdf

The M6348 special note “a)” should include a mention that it can also be used in Fabric A when using M710HD with its quad port Broadcom 5709C NDC.

I would add a “c)” to point out that the Infiniband QDR Mellanox M3601Q is a dual-width I/O Module occupying both B- and C-fabric slots.

Regarding connecting with Remote Console I’m quite sure that most M1000e admins launch it from the CMC and not from the iDRAC. And you can also gain entrance to the web-GUIs for the various I/O Modules directly from the CMC.

I also think it should be worth noting that the PSUs supports 3+3 (AC Redundancy).

At last I think something should be said about the future of the M1000e. The chassi has been built to be ready for the next wave of I/O-speeds and is fully capable of a total of 80Gbit/s per mezzanine using 4+4 lanes of 10GBASE-KR. That could mean that there is a dual port 40Gbit/s ethernet mezzanine and even a 8-port (most likely 4-port) 10Gbit/s ethernet mezzanine would be doable if controllers and I/O Modules were made available to handle such mezzanines.

Pardon my previous comments but I really enjoy your blog posts and this series particularly is in my opinion your best work so far. Hats off to you…

Pingback: Kevin Houston

I updated the #dell server comparison page to reflect that the M610x is a full height. I appreciate the comments!

I added the links to these #dell mezzanine cards to the post. Thanks for the comments!

Great feedback – I’ve updated the notes you recommended. I wasn’t sure about the Remote Console access from the CMC – my systems, including M600’s don’t offer that capability, but if it is an offering with the 11G servers, I’d love to know about it. Thanks for all your feedback. Glad to make the changes.

Pingback: unix player

Kevin, the support is definitely there on 11G servers. Requesting help from Dell to help us sort out if you need 11G with the new lifecycle controller to get it directly from CMC or if it’s a iDRAC-version issue.

Yes, one of the new features is the ability to launch Remote Console directly from the CMC interface. However, as you eluded to, requires 11G and above systems.

Here’s a couple screenshots :

From the Chassis Overview – http://www.twitpic.com/33vv87

From the Server Overview – http://www.twitpic.com/33vvj2

From the Individual Server Overview – http://www.twitpic.com/33vvz0

Feel free to use in the blog post. If you need other screencaptures, just let me know.

Pingback: Andreas Erson

Pingback: Scott Hanson

Pingback: Kong Yang

Pingback: Michael Dell

Pingback: Dennis Smith

Pingback: Kevin Houston

Thanks, @DellServerGeek #dell – I’ve made changes to the blog post (https://bladesmadesimple.com/2010/11/dell-m1000e/). Thanks for your support!

Thanks, @DellServerGeek #dell – I’ve made changes to the blog post (https://bladesmadesimple.com/2010/11/dell-m1000e/). Thanks for your support!

Pingback: Mary Ward Badillo

Pingback: Jeff Sullivan

Pingback: mdomsch

Pingback: Kevin Houston

Pingback: Corus360

Pingback: Emulex Links

Pingback: Brad Hedlund

Pingback: Arseny Chernov

Pingback: Kevin Houston

Pingback: Kevin Houston

Pingback: JBGeorge

Pingback: Dell LargeEnterprise

Pingback: Kevin Houston

Pingback: Christian Young

Hey Kevin,

Peter Tsai here – I’m replacing Scott Hanson as Dell’s Systems Management Evangelist. I’ve put together a demo of the CMC 3.0 interface that walks through the menus and features of the CMC. You can find it at the following location:

http://www.youtube.com/watch?v=tI3wJIzKPQY

Pingback: Stan Brinkerhoff