After teasing the public at Dell Technologies World, Dell EMC has finally released details of its next generation modular architecture – the PowerEdge MX. This generation of modular has something that gives you investment protection for years to come. In this post, you’ll get a good overview of Dell EMC’s new blade server architecture, so grab a cup of coffee and enjoy this first look. I’ve been waiting since the fall of 2016 to talk about this new addition to Dell EMC’s modular family so I’m excited to share the details. I’ll break it down showing the chassis, then I’ll review the sled options and I’ll wrap it up with the I/O architecture. I’ll save the management for another post, but here’s a hint – google “OpenManage Enterprise” and you’ll find out a lot about how what the management looks like for PowerEdge MX. Lastly, as you read through this, remember this is an architecture that is designed to last for 3+ generations to come. If you don’t see something, it doesn’t mean it will never be available – it just may take time. Also, this product will be live and in person at the Dell EMC booth at VMworld so make sure you go see it (it’ll be in the back right of the big Dell EMC booth in the center of the show floor.)

Chassis

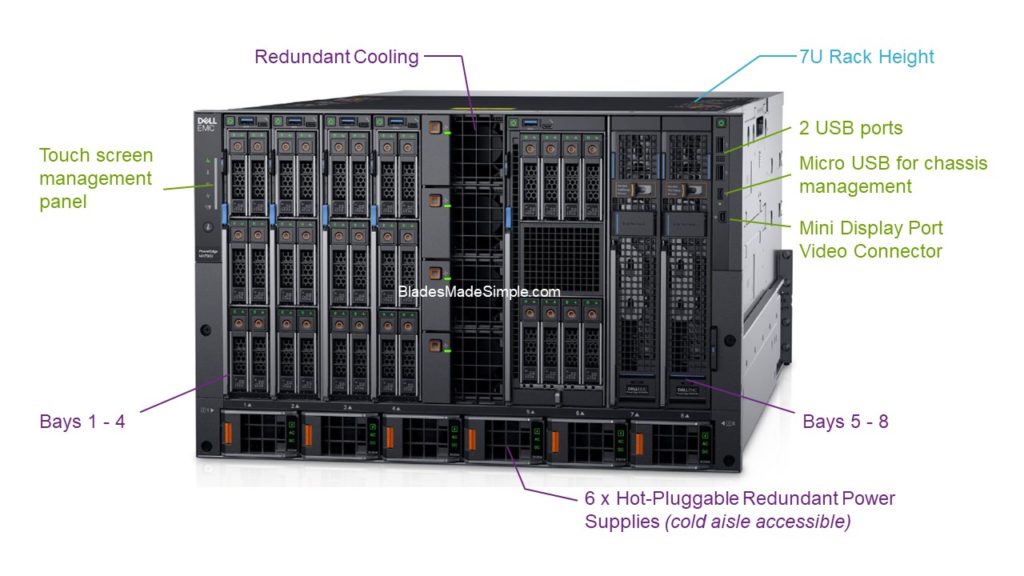

As with any modular (or blade server) infrastructure, the chassis is the primary piece of the architecture. It houses the compute, storage and I/O and offers a centralized method of managing. The chassis for the PowerEdge MX architecture is the MX7000. It is 7 rack units high and can house up to 8 sleds. The front of the chassis provides USB port access for managing via crash cart (keyboard, video, mouse) as well as a touch screen panel that simplifies setting up the chassis or identifying issues while in front of the chassis. The MX7000 also provides cold-aisle accessibility to the power supplies – a change from the PowerEdge M1000e chassis. Along with the 8 sled bays, you’ll find 4 high-speed fans designed to help with cooling the I/O modules in the rear of the chassis (more on those below.)

As with any modular (or blade server) infrastructure, the chassis is the primary piece of the architecture. It houses the compute, storage and I/O and offers a centralized method of managing. The chassis for the PowerEdge MX architecture is the MX7000. It is 7 rack units high and can house up to 8 sleds. The front of the chassis provides USB port access for managing via crash cart (keyboard, video, mouse) as well as a touch screen panel that simplifies setting up the chassis or identifying issues while in front of the chassis. The MX7000 also provides cold-aisle accessibility to the power supplies – a change from the PowerEdge M1000e chassis. Along with the 8 sled bays, you’ll find 4 high-speed fans designed to help with cooling the I/O modules in the rear of the chassis (more on those below.)

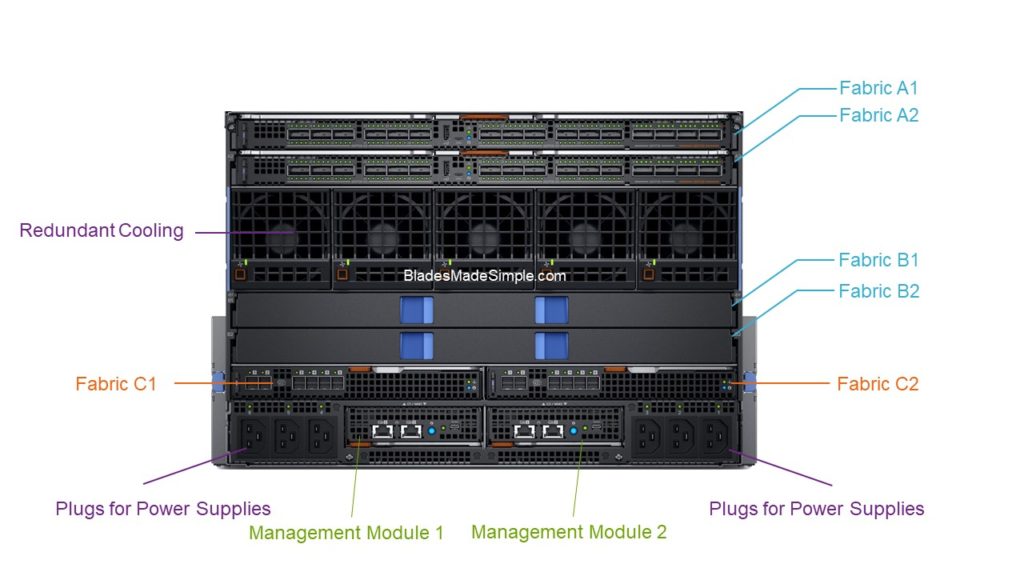

The rear of the MX7000 chassis includes 3 redundant I/O fabrics, 2 management modules, 6 cooling fans and power supply plugs.

Fabric A is developed for networking traffic and is redundantly designed with Fabric A1 and A2. Each server is connected to Fabric A via a network mezzanine card. If additional networking is required, Fabric B is available to handle additional networking traffic and is also redundantly designed with Fabric B1 and B2. To use this fabric, a server would need to have a network mezzanine card in the slot supporting Fabric B. Fabric C is different in that it supports SAS or Fibre storage only. It does not support any networking modules. In order to use this fabric, a server would need to have an SAS , RAID or Fibre HBA mezzanine card.

The management of the MX7000 is provided by redundant management modules that manages the lifecycle of the compute, storage and I/O modules including firmware and server profiles. As additional MX7000 chassis are added, they can be connected together to the lead chassis to give a single management window for lifecycle management of all systems in the architecture. I’ll talk more about the management features of the management module in a future blog post.

Compute and Storage Sleds

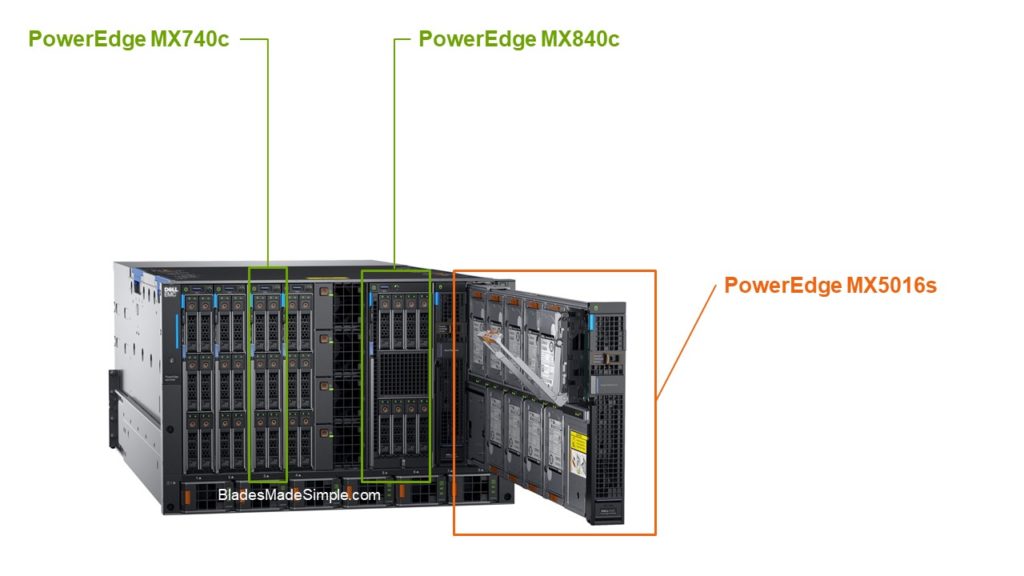

The PowerEdge MX7000 chassis supports two compute sleds (or blade servers) and one storage sled.  The PowerEdge MX740c is a full-height, single-width blade server that holds up to two Intel Xeon SP CPUs, 24 DIMMs, two networking mezzanine card slots, one storage mezzanine card slot and six hot-pluggable drives. With 6 hot-pluggable drive slots, the MX740c is a perfect candidate for a VMware vSAN node.

The PowerEdge MX740c is a full-height, single-width blade server that holds up to two Intel Xeon SP CPUs, 24 DIMMs, two networking mezzanine card slots, one storage mezzanine card slot and six hot-pluggable drives. With 6 hot-pluggable drive slots, the MX740c is a perfect candidate for a VMware vSAN node.

The 2nd server option is the PowerEdge MX840c. This blade server is full-height, double-width and holds four Intel Xeon SP CPUs, 48 DIMMs, four networking mezzanine card slots, two storage mezzanine card slots and eight hot-pluggable drives. While the drive capacity also makes the MX840c a great candidate for vSAN, however with 4 processors, this server is better suited to handle your enterprise applications like databases or ERP.

The PowerEdge MX5016s is a storage sled that is full-height and single-width supporting 16 hot-pluggable drives (SAS HDD or SSD). The storage in the MX5016s can either be shared within all servers in the chassis or can be assigned to specific servers. The connectivity depends upon the type of storage mezzanine card installed on the server. If the MX5016s is shared to the entire chassis, servers needing access require a SAS HBA. Additionally, a clustered file system is required (to make sure the servers play nice with the storage.) If a server needs a few drives from a MX5016s sled, a RAID controller (PERC) is required in the server’s storage mezzanine card slot. Keep in mind, the MX5016s is completely optional, but provides flexible options to add local storage to the blades servers inside the MX7000.

I/O Architecture

I previously mentioned that the MX7000 has 3 I/O fabrics: two for networking, one for storage. I’ll start with the storage fabric first.

I previously mentioned that the MX7000 has 3 I/O fabrics: two for networking, one for storage. I’ll start with the storage fabric first.

As discussed above, Fabric C is the storage fabric. As with all the fabrics within the MX7000, Fabric C offers redundant pathways with two I/O modules (designated as C1 and C2.) Fabric C provides blade servers connectivity to the storage sleds via a SAS switch or it can offer connectivity to external fibre storage arrays.

Fabrics A and B are designed for networking traffic. Like Fabric C, both Fabrics A and B are designed to be redundant with each fabric split into two with A1, A2 and B1 and B2. Blade server connectivity is based on traditional blade networking – place a NIC in the corresponding fabric network mezzanine slot, then I/O modules into the respective fabric (i.e. blade server > NIC in Mezz A > Fabric A1 and A2). With this design, Fabric B is only needed if additional network ports are required. The networking architecture described above may be traditional, but the physical connectivity is a game changer.

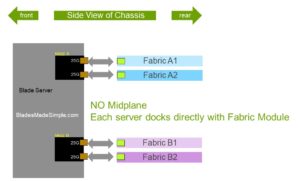

No Midplane

Dell EMC’s PowerEdge M1000e architecture uses a server mezz card to switch design, but there is a midplane that all traffic traverses. When it was designed in 2008, the M1000e was built to handle 40G Ethernet but 40G is quickly being replaced with 100G/200G so the M1000e is no longer suited to handle future networking. The MX7000 network architecture is different from the M1000e in that it has no midplane. No midplane means a new way of connectivity for blade servers. The MX architecture provides direct server to switch connectivity. Each server’s networking mezzanine card docks directly with each I/O module. This removes any future networking barriers as seen in the M1000e – as new networking technologies (like Gen-Z) are released in the future, simply change the NIC on each server and replace the network switch module.

Dell EMC’s PowerEdge M1000e architecture uses a server mezz card to switch design, but there is a midplane that all traffic traverses. When it was designed in 2008, the M1000e was built to handle 40G Ethernet but 40G is quickly being replaced with 100G/200G so the M1000e is no longer suited to handle future networking. The MX7000 network architecture is different from the M1000e in that it has no midplane. No midplane means a new way of connectivity for blade servers. The MX architecture provides direct server to switch connectivity. Each server’s networking mezzanine card docks directly with each I/O module. This removes any future networking barriers as seen in the M1000e – as new networking technologies (like Gen-Z) are released in the future, simply change the NIC on each server and replace the network switch module.

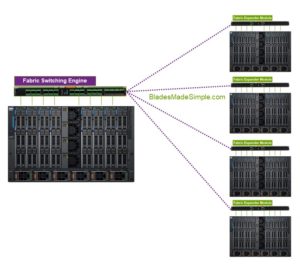

Scalable Fabric Architecture

The PowerEdge MX network architecture offers a new high-speed scalable network architecture ideal for mid to large scale environments. Traditional blade server architectures involve switch modules in every blade chassis which may lead to complexity or higher costs. PowerEdge MX changes this by eliminating switches in chassis and replacing with “expander modules.”

The PowerEdge MX network architecture offers a new high-speed scalable network architecture ideal for mid to large scale environments. Traditional blade server architectures involve switch modules in every blade chassis which may lead to complexity or higher costs. PowerEdge MX changes this by eliminating switches in chassis and replacing with “expander modules.”

There are two components for a scalable fabric architecture: a Fabric Switch Engine (FSE) and a Fabric Expander Module (FEM). In the scalable fabric design, a Fabric Switch Engine is placed within a single MX7000 chassis. The best way to think of the Fabric Switch Engine is as a top of rack network switch. The FSE will provide all the switching inside of the stack. As additional MX7000 chassis are added to the environment, a Fabric Expander Module is installed in the networking fabric. The FEM connects all the blade server NICs inside the MX7000 to the FSE with a single 200Gb cable (2 for redundancy) with no oversubscription. The FEM does not do any switching, nor does it have an O/S or firmware – so it’s one less thing to update in the lifecycle management. If you are wondering about the latency of a server communicating to other servers with this architecture, don’t be. The overall latency of any server to any server is less than 600ns.

As you can imagine, I could write another 10 pages of details about the PowerEdge MX, but I have to save some details for future blog posts. Once the PowerEdge MX becomes “generally available” on September 12, I’ll update my blade server comparison chart, so stay tuned. I’m curious what your thoughts are about this new architecture by Dell EMC. Leave me your thoughts in the comments below.

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has 20 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin has worked at Dell EMC since August 2011 working as a Server Sales Engineer covering the Global Enterprise market from 2011 to 2017 and currently works as a Chief Technical Server Architect supporting the Central Region.

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has 20 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin has worked at Dell EMC since August 2011 working as a Server Sales Engineer covering the Global Enterprise market from 2011 to 2017 and currently works as a Chief Technical Server Architect supporting the Central Region.

Pingback: Overview of the Industry Top Blade Chassis | Blades Made Simple

Pingback: Cisco Next Gen UCS Rumors - Blades Made Simple

Pingback: A First Look at the Dell EMC PowerEdge MX – Real World UCS

Pingback: Want 400GbE in a Blade Server? Here's How - Blades Made Simple

Pingback: It's About Time Cisco - A Look At the Cisco UCS X9508 and UCS x210c M6 - Blades Made Simple