As expected, HP announced today new blade servers to their BladeSystem lineup as well as a new converged switch for their chassis. Everyone expected updates to the BL460 and BL490, the BL2x220c and even the BL680 blade servers, but the BL620 G7 blade server was a surprise (at least to me.) Before I highlight the announcements, I have to preface this by saying I don’t have a lot of technical information yet. I attended the press conference at HP Tech Forum 2010 in Las Vegas, but I didn’t get the press kit in advance. I’ll update this post with links to the Spec Sheets as they become available.

The Details

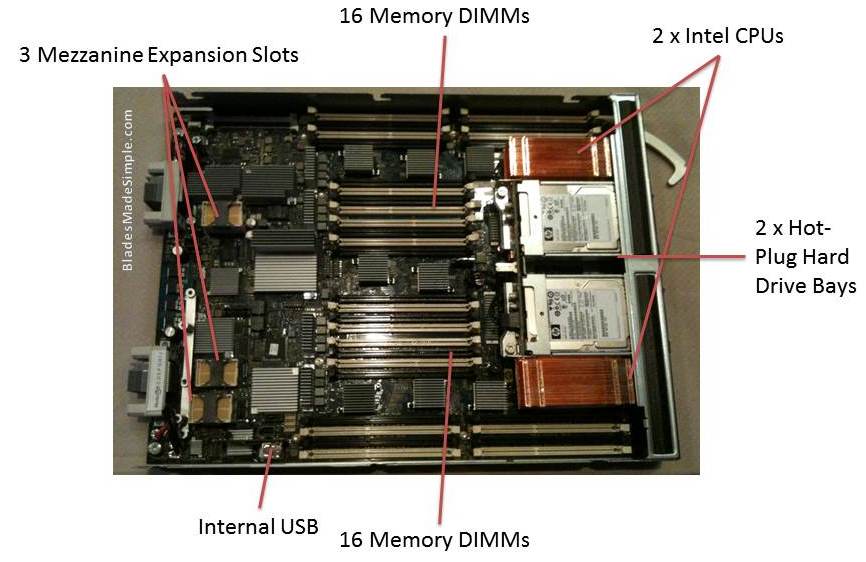

First up- the BL620 G7

The BL620 G7 is a full-height blade server with 2 x CPU sockets designed to handle the Intel 7500 (and possibly the 6500) CPU. It has 32 memory DIMMS, 2 hot-plug hard drive bays and 3 mezzanine expansion slots.

BL680 G7

The BL680 G7 is an upgrade to to the previous generation, however the 7th generation is a double-wide server. This design offers up to 4 servers in a C7000 BladeSystem chassis. This server’s claim to fame is that it will hold 1 terabyte (1TB) of RAM. To put this into perspective, the Library of Congress’s entire library is 6TB of data, so you could put the entire library on 6 of these BL680 G7’s!

“FlexFabric” I/O Onboard

Each of the Generation 7 (G7) servers is coming with “FlexFabric” I/O on the motherboard of the blade server. These are NEW NC551i Dual Port FlexFabric 10Gb Converged Network Adapters (CNA) that supports stateless TCP/IP offload, TCP Offload Engine (TOE), Fibre Channel over Ethernet (FCoE) and iSCSI protocols.

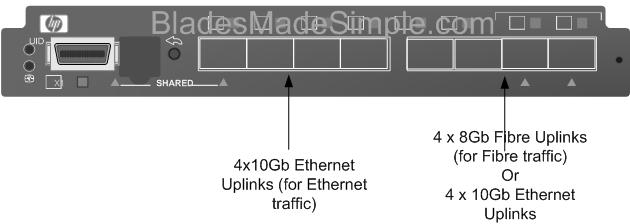

Virtual Connect FlexFabric 10Gb/24-Port Module

The final “big” announcement on the blade server front is a converged fabric switch that fits inside the blade chassis. Titled, the Virtual Connect FlexFabric 10Gb/24-Port Module, it is designed to allow you split the Ethernet fabric and the Fibre fabric at the switch module level, inside the blade chassis INSTEAD OF at the top of rack switch.  You may recall that I previously blogged about this as being a rumour, but now it is true.

You may recall that I previously blogged about this as being a rumour, but now it is true.

The image on the left was my rendition of what it would look like.

And here are the actual images.

HP believes that converged technology is good to the edge of the network, but that it is not yet mature enough to go to the datacenter (not ready for multi-hop, end to end.) When the technology is acceptable and mature and business needs dictate – they’ll offer a converged network offering to the datacenter core.

What do you think about these new blade offerings? Let me know, I always enjoy your comments.

Disclaimer: airfare, accommodations and some meals are being provided by HP, however the content being blogged is solely my opinion and does not in anyway express the opinions of HP.

downtown san diego

child tax credit 2011

irs tax refund

droid 3 review

corpus christi tx

Pingback: Tweets that mention Blades Made Simple » Blog Archive » FIRST LOOK: HP’s New Blade Servers and Converged Switch (#hptf) -- Topsy.com

Kevin,

Do you know if the “FlexFabric” Onboard I/O supports the “FlexNIC” functionality?

Why does the full height BL620 blade only have (2) Xenon 7500 Sockets and (16) DIMM slots? Seems a little dissapointing, doesn't it? Especially when other vendors (such as Cisco) already have a full height blade with (4) Xenon 7500 Sockets and (32) DIMMs. So basically Cisco can fit the same # of Xenon 7500 sockets in one chassis in 4 fewer RU's. Right?

Cheers,

Brad

Kevin,

-BL620 looks like it has 32 DIMM slots, not 16. i've seen where they say it has more memory capacity than any other 2S server which isn't the case since M910 would have same capacity (plus the flexibility of 2S or 4S). think it shows pretty clearly the benefit of FlexMem Bridge, since they had to design a singular 2S only product & a separate 4S.

-BL680 is a puzzler, cant imagine many customers are going to go for that form factor which eats up so much of the chassis. the per server cost of the chassis infrastructure is going to be very expensive. if you need crazy levels of memory expansion, IBM's got them beat also.

-i dont see any native FC ports on the new VC flexfabric switch, they look to be all ethernet ports. if true, they are going to have to do FCoE passthru to some ToR switch. since FCoE can only go through a single hop seems like there would be interop issues with this. another curious choice to me.

Brad – the Intel processors are branded Xeon, Xenon is a colorless gas. you crazy network guys will get these server terms someday. maybe when i understand congestion notification :-)

the flexfabric NICs do say they have FlexNIC/FlexHBA functionality.

You can put 4 fc gbic and get native fc connection

New bl620 and bl680 not available now , but hp released new bl465 g7 and bl686 g7 to market . Someone used and for virtualization ?

Mike,

The “FlexFabric” switch has ports that can be either FC or 10GE. HP is not extending FCoE out of the chassis like Cisco has done with UCS. HP still has a lot of catching up to do on that front.

As for the BL260 & M910, both of which are (2) socket *Xeon* 7500 with memory channels running at 1066 Mhz — I'll be real curious to see how those systems perform in benchmarks versus a (2) socket *Xeon* 5600 with (48) DIMMs and the memory channel running at 1333 Mhz, such as can be done on Cisco UCS B250 M2 blades at a much lower price.

p.s. spell checking? Is that the best you got? :-)

Cheers,

Brad

I'm not sure when the #hp BL680 and BL620 blade servers will become available. I'll check with the HP team at the HP Tech Forum tomorrow, err, today. Thanks for the question. Appreciate your support!

The #hp BL620 does appear to have 32 DIMM slots – good catch! I've updated the blog post to reflect that. I also heard that it has more memory than any other server and I'm a bit confused as well. I'm going to find an HP Expert at the HP Tech Forum later today and get that question addressed.

RE: Your comment on the BL680 – each to his own. You know as well as I that there are hundreds of reasons blade servers are used, and there's always a business case for building a blade server design (otherwise, they wouldn't have done it.)

The ports on the new module appear to be a modular port, so you'd put the type of connector you need inside the port you need. I'm going to verify this as well later today with the HP team.

Thanks for your questions, comments and support!

I absolutely KNEW you'd be the first to tweet, Brad! I enjoy getting reality checks from Cisco and Dell (where's IBM??) The onboard CNA's “should” have the Flex10 functionality (being able to carve up the 10Gb bandwidth) however, they did not address the abilities at any level of depth. It was a press release so they kept it high level. I'm going to find out the answer to that question later today form the HP gurus.

I messed up on my first post – this server has 32 DIMM slots. It still doesn't match up to the Cisco B250 M2 from a memory perspective, but with 3 Mezzanine slots AND built-in CNAs, the HP BL620 should offer a lot more I/O over the Cisco B250 M2 (imagine 8 x 10Gb ports on the BL620).

Thanks for your question and comments. Your feedback is always appreciated, and expected ;)

Kevin,

Looks like an overall great set of announcements out of Vegas. I'm curious to get your opinion on the advantages/isadvantages of splitting out FCoE at the blade chassis switch level. Also what's your feeling on the oversubscription of the FlexFabric module? 4x 10GE and 4x 8GB FC hard coded for the chassis seams limited for many deployments (mainly meaning too much FC not enough FCoE.) 32G of FC is a ton at the edge for a single chassis and 40G of Ethernet could be tight depending on Blade config. Would love to hear your thoughts, thanks for keeping me up to date!

Kevin,

I watched the Mark Potter video that you posted and it seems that they are saying that the 4 ports that you show that can be FC OR Ethernet are the same ports. This is an industry first as far as I know – allowing customers to Wire Once and truly be protocol agnostic – not just between the Ethernet (NFS, iSCSI and FCoE), but also FC. Very cool! It would be nice to know what configurations the FCoE ports can be used for – can they attach to another FCoE switch or native storage.

Brad:

The new FlexFabric LOMs do support the “FlexNIC” capability. Each port supports 4 physical functions and can adjust the bandwidth from 100Mb to 10Gb dynamically on each of those FlexNICs. Part of the physical function capabilities of the FlexFabric chips is the ability to select Ethernet, FCoE, and/or iSCSI on one of the 2 ports.

Pingback: Stuart Miniman

Pingback: Dave Tucker

Pingback: Calvin Zito

Pingback: Kees denHartigh

Pingback: Calvin Zito

That's true, FlexFabric isn't extending FCoE outside of the existing standards :) nor does it require Cisco switches in order to take advantage of CNAs in servers.

The BL620c blade will easily outperform the Cisco B250. The higher core count and larger cache on the Xeon 7500 edge out the 5600's higher frequencies on CPU benchmarks. (Published SPEC CPU results bear this out.) Plus, the memory bandwidth will be higher (4 channels per CPU at 1066MTs beats 3, even at 1333MTs), and memory capacity is be higher (512GB versus 384GB). I/O capacity will be extraordinarily higher.

One interesting note about 1066MTs memory. HP actually published

separate VMMark results on their 2-socket BL490c G6 server blade with memory at 1066MTs and memory at 1333MTs. Memory bandwidth apparently doesn't impact VMMark that much, because the VMMark difference (24.24 versus 24.54) says that the ~6% higher memory bandwidth you get from 1333MTs only resulted in a 1% overall improvement.

In terms of price/performance, the BL685c G7 will probably easily beat them both, since the 4P capable Opteron 6100 series processors are priced like 2P processors.

Disclaimer: I work for HP.

Daniel,

agree with your performance statement, Dell will be publishing vmmark score over 37 soon on M910 that beat Cisco B250, at substatially lower price. we also have the #1 oracle app server score as well for a 2S.

you guys need to look at some of your claims in this announcement that are blatently wrong:

1) BL620 is NOT “the largest memory footprint of any 2S blade” – M910 in 2S mode has the same 32 DIMM capacity and also has the ability to scale to 4S in the same blade.

2) BL680 is NOT the 1st blade with 1TB memory capacity, IBM HX5+Max5+HX5+Max5 can go beyond that. we may actually have 1TB capacity on M910 by the time BL680 ships :-)

mike

Looking forward to more VMMark scores! Maybe this year we can start doing SPECvirt too.

Thanks for flagging the claims for verification. On the first one, I think you're spot on; the BL620c & M910 share that “largest memory footprint” title for a 2S config. I'll take that back to the teams for review.

I think the second claim is justified. I believe IBM tops out at 640GB because they don't have 16GB VLP DIMMs. So in terms of capacity, BL680c = 1TB > HX5+Max5+HX5+Max5. IBM would have the lead in number of DIMM slots, tho. I'm open to correction.

agree that splitting out FC at the chassis edge is a good way to go, you are going to see others going this way as well as it gives you the benefit of convergence without having to replace external switches. technically they shouldnt be able to attach to an external FCoE switch as FCoE is only for single hop. they should be able to connect to native FCoE storage, but would be good to get a 2nd opinion.

joe,

biggest benefit to me is that you dont have to replace external switches to get the cost/power savings of convergence. seems like as more products like this come out, it would slow nexus adoption since they give you convergence benefits to the edge of the chassis and can plug into any external switch (or at least impact FCoE licenses on the nexus switches)

chuck,

note that any CNA today presents itself as 2 functions to the OS, but they dont give you the native bandwidth reservation. my take is that the bandwidth reservation is unnecessary in most cases (10Gb is plenty of bandwidth so why artifically limit bandwidth – you are more likely to be wrong than right). and if you want it, VMWare provides you all the capabilities you need in terms of bandwidth reservation (rate limiting) in a much more flexible manner than doing it in hardware. VMWare's capabilities in this regard even get better in ESX4.1 (can manage bandwidth by traffic type up to the vNIC). specifically for virtualization, i dont see a real benefit (in most cases) for hardware NIC partitioning and a downside in terms of cost, lock-in, and complexity.

“imagine 8 x 10Gb ports on the BL620” – what I'm imagining is the cost of all those 10G Virtual Connect modules to wire up those 3 mezzanine slots (and the cost of the ports I'm attaching them to).

Pingback: Steve Chambers

Mike,

I'm still not seeing the benefit there, and you're missing a bit on the Nexus line. Nexus 4000 can push FCoE into an IBM chassis (HP decided not to OEM it.) Nexus 5000 alone or combined with 2232 can do FCoE direct to both rack mounts, Nexus 4000, or pass-through into blades who's vendors chose not to OEM 4000.

My real question to Kevin is still stated above. Customers aren't going to 'replace external switches' to put in Nexus. They use it to scale-out and or refresh devices.

The reason I'm asking is because the HP split at the chassis is going to require a whole lot more FC and Eth ports without replacing the Edge/Access Layer as Nexus does. I want to know if there is a real architectural benefit I'm missing.

Mike, two things: FCoE standards today support multi-hop, check FC-BB5. Cisco Nexus 4000 is a current shipping example for IBM blade chassis, and Nexus 7000 support is coming.

Second you're missing the mark on network architecture. SAN is typically designed as Core/Edge and LAN is typically Core/Aggregation/Access (so two tier and three tier.) When you add standard blades (Dell, IBM, HP, Sun) you actually add another LAN tier, and typically another SAN tier, meaning you plug the blade switches into an existing SAN/LAN Edge/Access.

In this architecture if HP is splitting FCoE at the Blade Chassis, the only thing they've converged is the blade switches not the access/edge switches. Nexus design reduces 2xLAN and 2xSAN at the top-of-rack or end-of-row for blades and rack mounts (this isn't FUD or koolaid, it's just what it does.)

Obviously HP made a decision to split FCoE at the chassis. What I'd like to know is was there technical merit behind the decision or is it just what they were capable of square one? It's not an insult, every vendor releases in stages as they develop them.

Joe

Pingback: Kevin Houston

Pingback: John Furrier

Daniel,

“FlexFabric isn't extending FCoE outside of the existing standards” << You might want to blow the dust of that FC-BB-5 standards document and give it another read. All of the tools and specifications for FCoE Multi-hop have been there since the standard was ratified in June 2009.

Cheers,

Brad

(Cisco)

Joe,

even cisco classifies integrated blade switches as Access layer

http://www.cisco.com/en/US/prod/collateral/swit…

and that is how most customers i talk to use them. they are not another layer of switching prior to the access layer. for SAN, many customers treat them as edge switches, depends on the implemention but many customers connect blade FC switches into directors also.

i see the HP implementation (and others will do similar things I'm sure) is pushing the functionality that the Nexus 5K delivers into the chassis, making it much more cost effective & letting customers who havent or wont deploy nexus get the benefits of convergence.

the Nexus 4K to 5K combination IBM has is about the most expensive implementation you could do just to get FCoE to ToR.

i dont know HP's technical capabilities, but i'm sure they could have or even can bring FCoE out, but they chose to focus on the abiilty to break out native FC at the back as that is the solution that is resonating with customers best due to cost, interop, legacy protection…

joe,

to me, switches like HP's do replace the access/edge like Nexus. they do the exact same thing (functionally) as nexus 5K w/ FIP license does. if customers want to scale out with 5K, they still can, just dont need to get the FIP license/FC modules. and this gives them the ability to scale out network ports with catalyst or other switches if they dont want to go with Nexus while still converging at the server port & access/edge.

Mike, there is no more interop legacy protection in this implementation than Nexus, and additionally you're locked into FC and Ethernet ports at the chassis level which limits scalability to multi-hop FCoE. Nexus 5K is modular and allows the ports to be used in several combination of FC/Eth including all Ethernet/FCoE with no native FC if it isn't needed now or in the future.

joe,

the legacy protection/interop benefit is for customers who have not or will not adopted nexus, this gives them a path to the benefits of convergence at the server & edge. not everyone is planning on or wants to adopting nexus. this kind of product lets customers with catalyst or other brands of switches (or even nexus without the FIP license) get the benefits of convergence. HP will have to answer if they can have FCoE transit the switch, would think that would be possible.

Pingback: Blades Made Simple » Blog Archive » New BL465c G7 and BL685c G7 (New Features)

Pingback: New BL465c G7 and BL685c G7 (New Features) « BladesMadeSimple.com (MIRROR SITE)

Pingback: HP Ends the Protocol Wars « Wikibon Blog

So Mike what your saying is it's a better investment to purchase two redundant FCoE capable FlexFabric switches per max 16 blade servers then one pair of them for multiple chassis and then Fibre Cable those to the aggregation and core layer? You're also talking about the Fibre license not a FIP license and it's bundled into the cost of the FC ports (expansion modules.)

If that's your thinking then what's the next step for FCoE for these HP customers that don't want Nexus? They either keep FCoE locked into individual chassis or buy an HP/3com mesh FlexFabric FCoE upstream switch and throw away the Fibre ports on each blade chassis when such a device becomes available?

Pingback: Chris Fendya

Pingback: HP’s FlexFabric — Define The Cloud

As always, its just too bloody expensive for what you get. $18500 for one switch, and you obviously need 2 of them for redundancy. Then you need expensive edge switches that will cost even more.

I can't see why you should spend $37000 for just one blade chassis just to get rid of a few cables. Especially since I can get a 4gbit brocade chassis switch for $1500 now, and a passthrough module for ethernet for $500. That's less than 1/10th of the price + I can use regular cheap procurve switches and dont need expensive nexus stuff. I bet most people arent using up 4gbit fc or a couple of 1gbit ethernet anways.

I'm looking for pictures of the inside of the FlexFabric 10Gb/24 port switch module so I can see who is supplying the Ethernet and FCoE switch chips. Does anyone have a photo?

I don't know what chips #hp is using for the VirtualConnect FlexFabric 10Gb/24 port switch module. It has not been full announced, so the technical details are still disclosed. Thanks for reading, and appreciate the comment.

I'm not sure what the cost will be for the #hp VirtualConnect FlexFabric module. The value of this offering is having the capability to have as few as 2 connections per blade chassis for all of your I/O needs. Let's be real – blades aren't for everyone, but for those people who could benefit from the values that a blade infrastructure offers, this innovative offering from HP could make a huge difference. Thanks for reading, appreciate your comment.

FlexFabric is a OEM from QLogic .

http://ir.qlogic.com/phoenix.zhtml?c=85695&p=ir…

it cost : $14,999

“FCoE Port technology” doesnt mean they are the only vendor inside FlexFab. I can’t say anything more than that.

So your comparing 2 x 4Gb FC + 2 x 1Gb Eth to FlexFabric?

Where is the reasoning in that?

How bout this, Add the following costs

1 x Dual Port 4Gb FC HBA

2 x 4Gb FC Ports on an uplink switch (for example, 1/48th of a Cisco MDS line card + 1/576th of an MDS9513 chassis)

4 x 4Gb SFP+ Transceivers

2 x Fibre Patch Cables

1 Quad Port Ethernet Card

6 x 1Gig Uplink ports (similar to MDS cost analysis above)

6 x Copper Patch Cables

Now multiply this subtotal x 16 for 16 blades

Add the following for the chassis

6 x Eth Pass Through Modules

2 x Fibre Pass Through

Now compare that to:

2 x FlexFab modules

0 x FC/Eth Cards (Included onboard)

4 x 10GB DAC Cables (Copper Twinax)

16 x 8GB Fibre SFP+

8 x Fibre Cables

8 x 8Gb MDS Ports (similar analysis as above)

4 x 10Gb Eth ports

And even then, keep in mind the FlexFab solution has 12 cables coming out the back.

The alternate solution has 128.

PER CHASSIS

can you be more specific ?

when FlexFabric will support FCoE to Nexus 5020 / 5010 ?

Mike,

I agree with Joe. I don’t get the advantage. Convergence is across the stack not just in the chassis and with respect to ROI, this is the worse way to go. You’re not saving anything in the rack itself, as every rack still needs 2 ip switches and fc switches. Double the amount as with Nexus 2000 and 5000 series. You admit customers will have 5000’s, it’s cheaper to enable the fcoe license and go pass thru in the blades, save the management, rack space and future proof the environment as opposed to rip and replacing the modules in 12 months to do what you can actually do now?

I’m not anti HP, but this is not a viable step forward compared to other solutions on the market.

Andy

Pingback: Blades Made Simple » Blog Archive » Brocade FCoE Switch Module for IBM BladeCenter

Pingback: Brocade FCoE Switch Module for IBM BladeCenter « BladesMadeSimple.com (MIRROR SITE)

Pingback: Kevin Houston

Pingback: HP Ends the Protocol Wars | MemeConnect: QLogic

Pingback: Blades Made Simple™ » Blog Archive » Keep Up with HP’s Announcement at HP Discover

Pingback: Kevin Houston