I once was a huge fan of HP’s Virtual Connect Flex-10 10Gb Ethernet Modules but with the new enhancements to VMware vSphere 5, I don’t think I would recommend for virtual environments anymore. The ability to divide the two onboard network cards up to 8 NICS was a great feature and still is, if you have to do physical deployments of servers. I do realize that there is the HP Virtual Connect FlexFabric 10Gb/24-port Module but I live in the land of iSCSI and NFS so that is off the table for me.

With vSphere 5.0, VMware improved on its VMware’s Virtual Distributed Switch (VDS) functionality and overall networking ability, so now it’s time to recoup some of that money on the hardware side. The way I see is most people with a chassis full of blade servers probably already have VMware Enterprise Plus licenses, so they are already entitled to VDS, however what you may not have known is that customers with VMware View Premier licenses are also entitled to use VDS. Some of the newest features found in VMware VDS 5 are:

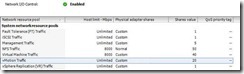

· Supports NetFlow v5 · Port mirror · Support for LLDP (Not just CISCO!) · QoS · Improved Priority features for VM traffic · Network I/O Control (NIOC) for NFSThe last feature is the one that makes me think I don’t need to use HP’s Flex-10 anymore. Network I/O control (NIOC) allows you to assign shares to your network interfaces set priority, limits control congestions all in a dynamic fashion. What I particularly like about NIOC as compared to Flex-10 is the wasted bandwidth with hard limits. In the VDI world, the workload becomes very bursty. One example can be seen when using vMotion. When I’m performing maintenance work in a virtual environment I think it sure would be nice to have more than 2 GB/s a link to move the desktops off – however when you have to move 50+ desktops per blade you have to sit there and wait awhile. Of course, when this is your design, you wait because you wouldn’t want to suffer performance problems during the day by lack of bandwidth on services.

A typical Flex-10 configuration may break down the on board nic (LOM) something like this

| Bandwidth | vmnic | NIC/SLOT | Port | Function |

| 500 Mb/s | 0 | LOM | 0A | Management |

| 2 Gb /s | 1 | LOM | 0B | vMotion |

| 3.5 Gb /s | 2 | LOM | 0C | VM Networking |

| 4 Gb/s | 3 | LOM | 0D | Storage (iSCSI/NFS) |

| 500 Mb/s | 4 | LOM | 1A | Management |

| 2 Gb /s | 5 | LOM | 1B | vMotion |

| 3.5 Gb /s | 6 | LOM | 1C | VM Networking |

| 4 Gb/s | 7 | LOM | 1D | Storage (iSCSI /NFS) |

To get a similar setup with NIOC it may look something like this.

Total shares from above would be: 5 + 50 + 40 + 20 = 115

In this example FT, iSCSI and Replication don’t have to be counted as they will not be used. The shares only kick if there is contention. The shares are also only applied if the traffic type exists on the link. I think it would best practice to limit vMotion traffic as multiple vMotions kicking off could easily exceed the bandwidth. I think 8000 Mbps would be reasonable limit with this sort of setup.

Management: 5 shares; (5/115) X 10 Gb = 434.78 Mbps

NFS: 50 shares; (50/115) X 10 Gb = 4347.83Mbps

Virtual Machine: 40 shares; (40/115) * 10 Gb = 3478.26Mbps

vMotion: 20 shares; (20/115) X 10 Gb = 1739.13Mbps

I think the benefits plus the cost saving is worth moving ahead with a 10GB design with NIOC. Below are some list prices taken on November 28, 2011. Which one are you going to choose?

Dwayne is the newest Contributor to BladesMadeSimple.com and is the author of IT Blood Pressure (http://itbloodpressure.com/) where he provides tips on Virtual Desktops and gives advice on best practices in the IT industry with a particular focus in Healthcare. In his day job, Dwayne is an Infrastructure Specialist in the Healthcare and Energy Sector in Western Canada.

Great post, Dwayne, yes, NIOC can be used very successfully to more dynamically carve up the Nics which is certainly more flexible that Flex-10.

I think the HP 6120G-XG only presents a single 1Gb downlink to the blade so using this you are limited to 2 x 1Gb with two switches. Flex-10 provides you with 2 x 10GbE

Julian, seems your are right about the down links, I guess that’s one way for forcing you to buy the Flex-10. No free meal ticket with 10Gb I guess. Still like the config for VDI even with Flex-10.

Thanks for your comment

Thanks for sharing Dwayne, good post!

But Julian is right:

“The HP 6120G/XG Blade Switch provides sixteen 1Gbdownlinks and four 1Gb copper uplinks, two 1Gb SFP uplinks along with three 10Gb uplinks and a single 10Gb cross-connect”

Pingback: Kevin Houston

Pingback: dwayne lessner

Pingback: Daniel Bowers

Pingback: Eric van der Meer

Pingback: HP Flex 10 vs VMware vSphere Network I/O Control for VDI | Yusuf Mangera

Pingback: Technology Short Take #19 - blog.scottlowe.org - The weblog of an IT pro specializing in virtualization, storage, and servers

Pingback: Hector Martinez

I have many customers that have deployed FlexFabric with NetIOC. This gives you the best of both worlds.

Advantages:

·

Cost savings and

flexibility of FlexFabric

o This is doubly important when you have enclosures that

mix virtualized and non-virtualized servers

Simplified cabling

·

Dynamic bandwidth

management from NetIOC

·

Define ESX

networking at the cluster level instead of the host level

and more that I can’t think of right now.

Disclaimer: I work for HP, but all opinions are my own

Agree 100%, we shifted away from Flex-10 network bandwidth controls to NetIOC about a year ago. FlexFabric is also a non-starter as we are NFS.

Pingback: HP Flex 10 vs VMware vSphere Network I/O Control for VDI « My Blog, My Precious.

Can’t you just use VAAI to send the traffic of VMotion to the Storage Controller? I Think it will give you more performance and will make your envorement more safe. And HP FlexFabric Tetechnoloy give to you so many characeristics diferent from NIC virtualization.

Pingback: Jeff Messer

Pingback: Jani N.

Pingback: Blades Made Simple™ » Blog Archive » BladesMadeSimple – Year in Review