It should be no surprise that the popularity of accelerators in the datacenter continues to grow. In years past, I’ve written a few blog posts on the GPU options for blade servers, but at that time, the options were limited to either small GPUs like the NVIDIA T4 or mezzanine based GPUs. Now the solutions that are available really open up some great options of using GPUs on blade servers. Dell Technologies and Liqid have worked together to provide a way to provide multiple GPUs to blade servers using concepts that we won’t see in the market for another few years, so take a few minutes to learn more.

GPU Expansion over PCIe

The original iteration of this design incorporated a large 7U expansion chassis built upon PCIe Gen 3.0. This design was innovative, but with the introduction of PCIe Gen 4.0 by Intel, it needed an update. We now have one.

The original iteration of this design incorporated a large 7U expansion chassis built upon PCIe Gen 3.0. This design was innovative, but with the introduction of PCIe Gen 4.0 by Intel, it needed an update. We now have one.

This design has 5 major components:

- PowerEdge MX7000 chassis from Dell Technologies

- PCIe HBA from Liqid

- 48-Port PCIe Fabric Switch from Liqid

- PCIe Gen 4.0 expansion chassis from Liqid

- Software from Liqid

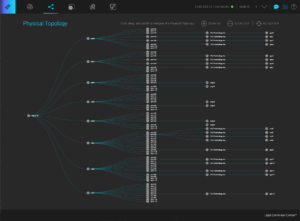

The concept is very interesting: take a PCIe card and put it in a blade server’s drive slots. Then connect that PCIe card to a fabric switch that communicates with an expansion chassis providing access to up to 20 devices. Essentially, any blade server can have access to any device. The magic, though, is in the Liqid Command Center software, which orchestrates how the devices are divided up over

The Components

The Liqid GPU expansion chassis is officially called the “Liqid EX4400 – PCIe Gen 4.0 CDI Device Expansion Chassis“. It is a 4U chassis that is available to support up to 20 Gen4 x8 FHFL, single width devices (EX4420) and 10 Gen4 x16 FHFL devices (EX4410). Note – I didn’t say GPUs because this chassis is very unique. It supports more than just GPUs but also FPGAs, NICs or SSD Add-in Cards. Unlike other GPU options in the market for blade servers, this expansion supports common GPUs like the NVIDIA V100, A100, RTX and T4.

The 48-port PCIe switch from Liqid is an essential part of the solution. This switch provides connectivity between the PCIe HBAs and the PCIe expansion at a bandwidth of 768GB/s (full duplex.)

The last piece of the solution is a PCIe HBA (LQD1416), provided by Liqid. This adapter sits within one of the hot-plug drive slots on the PowerEdge MX blade server. Each blade server needing access to the Liquid expansion chassis would need to have an adapter which connects directly to the EX4400 Expansion chassis.

O nce the PCIe devices are connected to the MX7000, the Liqid Matrix software provides the secret sauce that allows dynamic allocation of GPUs to the PowerEdge MX blade servers.

nce the PCIe devices are connected to the MX7000, the Liqid Matrix software provides the secret sauce that allows dynamic allocation of GPUs to the PowerEdge MX blade servers.

To me, there are a couple of major benefits in this solution compared to what has been in the market for GPUs on blade servers. This solution can support 20 GPUs across 8 servers, 20 GPUs across 1 server or any incremental design in between.

Conclusion

I think this partnership with Dell Technologies and Liqid is a good example of how future datacenters may look. This concept of “kinetic expansion” could be ideal for use in these categories:

- Bare Metal Cloud as a Service

- Artificial Intelligence

- Media & Entertainment (i.e. supporting AI engineering in the day and video rendering at night)

- Data Center Orchestration with Kubernetes, Openstack, Slurm, etc.

Kevin Houston is the founder of BladesMadeSimple.com. With over 24 years of experience in the x86 server marketplace Kevin has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware virtualization. He has worked at Dell Technologies since August 2011 and is a Principal Engineer supporting the East Enterprise Region and is also a CTO Ambassador for the Office of the CTO at Dell Technologies. #IWork4Dell

Kevin Houston is the founder of BladesMadeSimple.com. With over 24 years of experience in the x86 server marketplace Kevin has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware virtualization. He has worked at Dell Technologies since August 2011 and is a Principal Engineer supporting the East Enterprise Region and is also a CTO Ambassador for the Office of the CTO at Dell Technologies. #IWork4Dell

Disclaimer: The views presented in this blog are personal views and may or may not reflect any of the contributors’ employer’s positions. Furthermore, the content is not reviewed, approved or published by any employer. No compensation has been provided for any part of this blog.

Pingback: Dell has Liqid path to CXL reminiscence pooling – Blocks and Information - News Peer

Pingback: Dell has Liqid route to CXL memory pooling – Blocks and Files – ssd land

Pingback: Dell has Liqid route to CXL memory pooling – Blocks and Files - Tech Mono

Pingback: Dell a une route Liqid vers le pooling de mémoire CXL - Blocs et fichiers - Gosusie