I’ve recently posted some rumours about IBM’s upcoming announcements in their blade server line, now it is time to let you know some rumours I’m hearing about HP. NOTE: this is purely speculation, I have no definitive information from HP so this may be false info. That being said – here we go:

Rumour #1: Integration of “CNA” like devices on the motherboard.

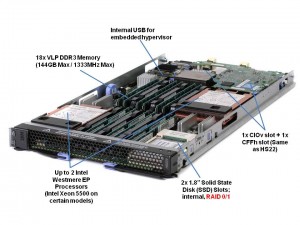

As you may be aware, with the introduction of the “G6”, or Generation 6, of HP’s blade servers, HP added “FlexNICs” onto the servers’ motherboards instead of the 2 x 1Gb NICs that are standard on most of the competition’s blades. FlexNICs allow for the user to carve up a 10Gb NIC into 4 virtual NICs when using the Flex-10 Modules inside the chassis. (For a detailed description of Flex-10 technology, check out this HP video.) The idea behind Flex-10 is that you have 10Gb connectivity that allows you to do more with fewer NICs.

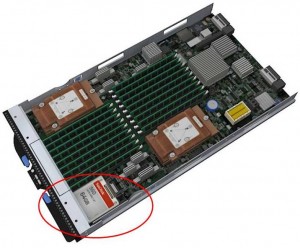

SO – what’s next? Rumour has it that the “G7” servers, expected to be announced on March 16, will have an integrated CNA or Converged Network Adapter. With a CNA on the motherboard, both the ethernet and the fibre traffic will have a single integrated device to travel over. This is a VERY cool idea because this announcement could lead to a blade server that can eliminate the additional daughter card or mezzanine expansion slots therefore freeing up valueable real estate for newer Intel CPU architecture.

Rumour #2: Next generation Flex-10 Modules will separate Fibre and Network traffic.

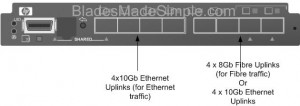

Today, HP’s Flex-10 ONLY allows handles Ethernet traffic. There is no support for FCoE (Fibre Channel over Ethernet) so if you have a Fibre network, then you’ll also have to add a Fibre Switch into your BladeSystem chassis design. If HP does put in a CNA onto their next generation blade servers that carry Fibre and Ethernet traffic, wouldn’t it make sense there would need to be a module that would fit in the BladeSystem chassis that would allow for the storage and Ethernet traffic to exit?

I’m hearing that a new version of the Flex-10 Module is coming, very soon, that will allow for the Ethernet AND the Fibre traffic to exit out the switch. (The image to the right shows what it could look like.) The switch would allow for 4 of the uplink ports to go to the Ethernet fabric and the other 4 ports of the 8 port Next Generation Flex-10 switch to either be dedicated to a Fibre fabric OR used for additional 4 ports to the Ethernet fabric.

If this rumour is accurate, it could shake up things in the blade server world. Cisco UCS uses 10Gb Data Center Ethernet (Ethernet plus FCoE); IBM BladeCenter has the ability to do a 10Gb plus Fibre switch fabric (like HP) or it can use a 10Gb Enhanced Ethernet plus FCoE (like Cisco) however no one currently has a device to split the Ethernet and Fibre traffic at the blade chassis. If this rumour is true, then we should see it announced around the same time as the G7 blade server (March 16).

That’s all for now. As I come across more rumours, or information about new announcements, I’ll let you know.