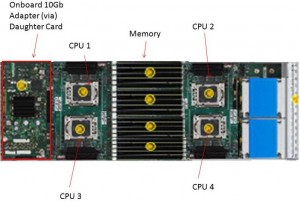

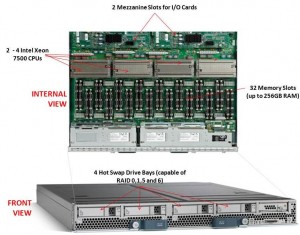

Cisco recently announced their first blade offering with the Intel Xeon 7500 processor, known as the “Cisco UCS B440-M1 High-Performance Blade Server.” This new blade is a full-width blade that offers 2 – 4 Xeon 7500 processors and 32 memory slots, for up to 256GB RAM, as well as 4 hot-swap drive bays. Since the server is a full-width blade, it will have the capability to handle 2 dual-port mezzanine cards for up to 40 Gbps I/O per blade.

Cisco recently announced their first blade offering with the Intel Xeon 7500 processor, known as the “Cisco UCS B440-M1 High-Performance Blade Server.” This new blade is a full-width blade that offers 2 – 4 Xeon 7500 processors and 32 memory slots, for up to 256GB RAM, as well as 4 hot-swap drive bays. Since the server is a full-width blade, it will have the capability to handle 2 dual-port mezzanine cards for up to 40 Gbps I/O per blade.

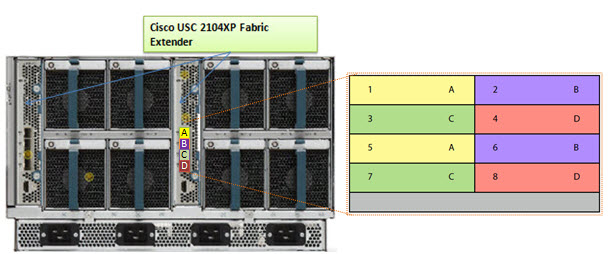

Each Cisco UCS 5108 Blade Server Chassis can house up to four B440 M1 servers (maximum 160 per Unified Computing System).

How Does It Compare to the Competition?

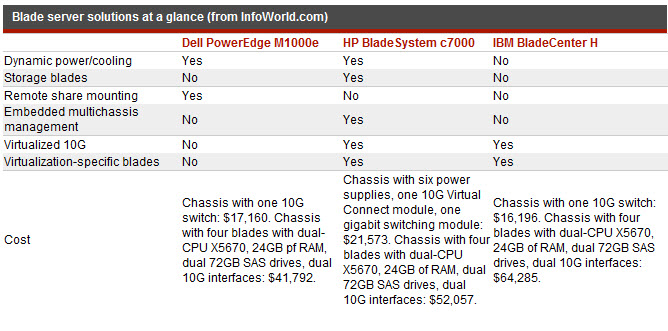

Since I like to talk about all of the major blade server vendors, I thought I’d take a look at how the new Cisco B440 M1 compares to IBM and Dell. (HP has not yet announced their Intel Xeon 7500 offering.)

Processor Offering

Both Cisco and Dell offer models with 2 – 4 Xeon 7500 CPUs as standard. They each have variations on speeds – Dell has 9 processor speed offerings; Cisco hasn’t released their speeds and IBM’s BladeCenter HX5 blade server will have 5 processor speed offerings initially. With all 3 vendors’ blades, however, IBM’s blade server is the only one that is designed to scale from 2 CPUs to 4 CPUs by connecting 2 x HX5 blade servers. Along with this comes their “FlexNode” technology that enables users to have the 4 processor blade system to split back into 2 x 2 processor systems at specific points during the day. Although not announced, and purely my speculation, IBM’s design also leads to a possible future capability of connecting 4 x 2 processor HX5’s for an 8-way design. Since each of the vendors offer up to 4 x Xeon 7500’s, I’m going to give the advantage in this category to IBM. WINNER: IBM

Memory Capacity

Both IBM and Cisco are offering 32 DIMM slots with their blade solutions, however they are not certifying the use of 16GB DIMMs – only 4GB and 8GB DIMMs, therefore their offering only scales to 256GB of RAM. Dell claims to offers 512GB DIMM capacity on their the PowerEdge 11G M910 blade server, however that is using 16GB DIMMs. REalistically, I think the M910 would only be used with 8GB DIMMs, so Dell’s design would equal IBM and Cisco’s. I’m not sure who has the money to buy 16GB DIMMs, but if they do – WINNER: Dell (or a TIE)

Server Density

As previously mentioned, Cisco’s B440-M1 blade server is a “full-width” blade so 4 will fit into a 6U high UCS5100 chassis. Theoretically, you could fit 7 x UCS5100 blade chassis into a rack, which would equal a total of 28 x B440-M1’s per 42U rack.Overall, Cisco’s new offering is a nice addition to their existing blade portfolio. While IBM has some interesting innovation in CPU scalability and Dell appears to have the overall advantage from a server density, Cisco leads the management front.

Dell’s PowerEdge 11G M910 blade server is a “full-height” blade, so 8 will fit into a 10u high M1000e chassis. This means that 4 x M1000e chassis would fit into a 42u rack, so 32 x Dell PowerEdge M910 blade servers should fit into a 42u rack.

IBM’s BladeCenter HX5 blade server is a single slot blade server, however to make it a 4 processor blade, it would take up 2 server slots. The BladeCenter H has 14 server slots, so that makes the IBM solution capable of holding 7 x 4 processor HX5 blade servers per chassis. Since the chassis is a 9u high chassis, you can only fit 4 into a 42u rack, therefore you would be able to fit a total of 28 IBM HX5 (4 processor) servers into a 42u rack.

WINNER: Dell

Management

The final category I’ll look at is the management. Both Dell and IBM have management controllers built into their chassis, so management of a lot of chassis as described above in the maximum server / rack scenarios could add some additional burden. Cisco’s design, however, allows for the management to be performed through the UCS 6100 Fabric Interconnect modules. In fact, up to 40 chassis could be managed by 1 pair of 6100’s. There are additional features this design offers, but for the sake of this discussion, I’m calling WINNER: Cisco.

Cisco’s UCS B440 M1 is expected to ship in the June time frame. Pricing is not yet available. For more information, please visit Cisco’s UCS web site at http://www.cisco.com/en/US/products/ps10921/index.html.