Let’s face it. Virtualization is everywhere.

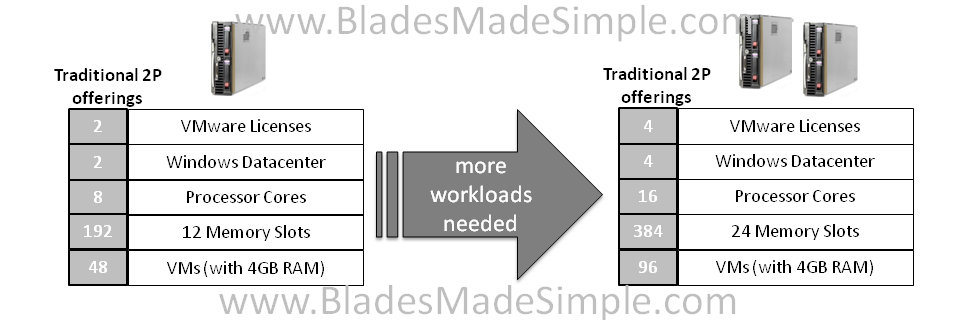

Odds are there is something virtualized in your data center. If not, it soon will be. As more workloads become virtualized, chances are you are going to run out of “capacity” on your virtualization host.  When a host’s capacity is exhausted, 99% of the time it is because the host ran out of memory, not CPU. Typically you would have to add another ESX host server when you run out of capacity. When you do this, you are adding more hardware cost AND more virtualization licensing costs. But what if you could simply add memory when you need it instead of buying more hardware. Now you can with Dell’s FlexMem Bridge.

When a host’s capacity is exhausted, 99% of the time it is because the host ran out of memory, not CPU. Typically you would have to add another ESX host server when you run out of capacity. When you do this, you are adding more hardware cost AND more virtualization licensing costs. But what if you could simply add memory when you need it instead of buying more hardware. Now you can with Dell’s FlexMem Bridge.

Background

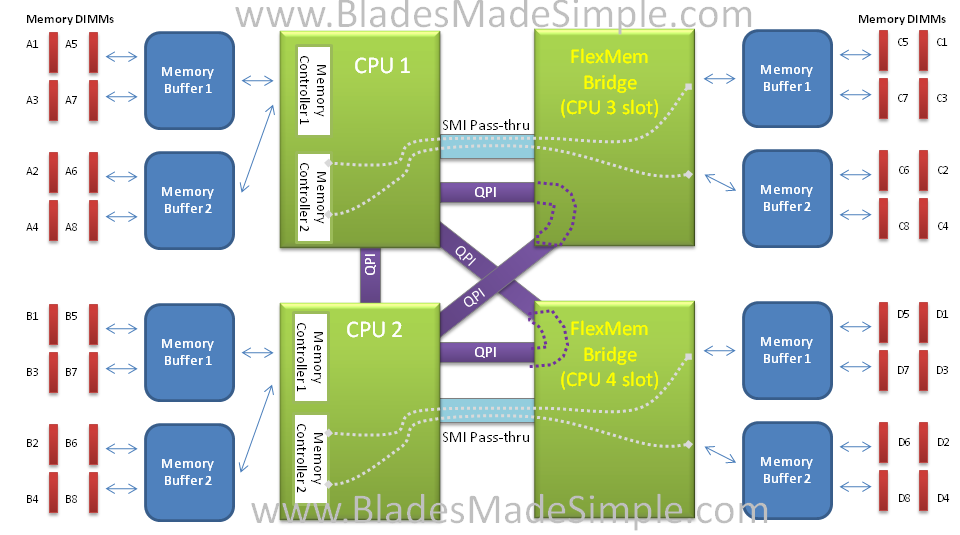

You may recall that I mentioned the FlexMem Bridge technology in a previous post, but I don’t think I did it justice. Before I describe what the FlexMem Bridge technology, let me provide some background. With the Intel Xeon 7500 CPU (and in fact with all Intel Nehalem architectures), the memory is controlled by a memory controller located on the CPU. Therefore you have to have a CPU in place to access the associated memory DIMMs…up until now. Dell’s innovative approach removed the necessity to have a CPU in order to access the memory.

Introducing Dell FlexMem Bridge

Dell’s FlexMem Bridge sits in CPU sockets #3 and #4 and connects a memory controller from CPU 1 to the memory DIMMs associated to CPU socket #3 and CPU 2 to the memory associated to CPU Socket #4.

The FlexMem Bridge does two things:

- It extends the Scalable Memory Interconnects (SMI) from CPU 1 and CPU 2 to the memory subsystem of CPU 3 and CPU 4.

- It reroutes and terminates the 2nd Quick Path Interconnect (QPI) inter-processor communications links to provide optimal performance which would otherwise be disconnected in a 2 CPU configuration.

Sometimes it’s easier to view pictures than read descriptions, so take a look at the picture below for a diagram on how this works.

(A special thanks to Mike Roberts from Dell for assistance with the above info.)

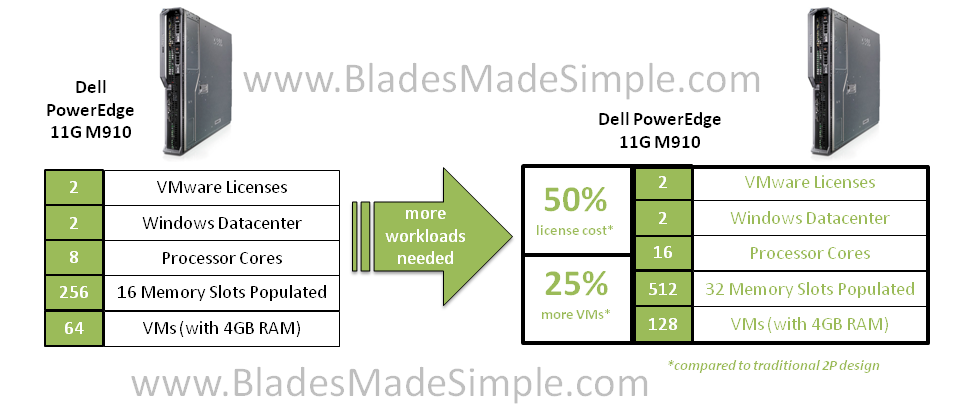

Saving 50% on Virtualization Licensing

So how does this technology from Dell help you save money on virtualization licenses? Simple – with Dell’s FlexMem Bridge technology, you only have to add memory, not more servers, when you need more capacity for VMs.  When you add only memory, you’re not increasing your CPU count, therefore your virtualization licensing stays the same. No more buying extra servers just for the memory and no more buying more virtualization licenses. In the future, if you find you have run out of CPU resources for your VM’s, you can remove the FlexMem bridges and replace with CPUs (for models with the Intel Xeon 7500 CPU only.)

When you add only memory, you’re not increasing your CPU count, therefore your virtualization licensing stays the same. No more buying extra servers just for the memory and no more buying more virtualization licenses. In the future, if you find you have run out of CPU resources for your VM’s, you can remove the FlexMem bridges and replace with CPUs (for models with the Intel Xeon 7500 CPU only.)

Dell FlexMem Bridge is available in the Dell PowerEdge 11G R810, R910 and M910 servers running the Intel Xeon 7500 and 6500 CPUs.

Pingback: Kevin Houston

If the limiting factor is memory and you want to save some money, why not use an extended memory blade like the Cisco B250 M2 with the new EP processors and also save the cost of using the far more expensive EX processors?

<YAWWWN> OK, so Dell managed to get 2X DIMM per socket when Cisco has already given customers 4X DIMM per socket on the B250 blade.

The #Cisco B250 uses slower Westmere CPUs. Also, if concerned about server density you can only get 28 servers in a rack (theoretically), vs 32 #dell servers. Thanks for the comment.

Ahh, a typical #cisco response ;) Yes, Cisco can get more RAM on the B250 blade, however at 4 servers per chassis, the theoretical maximum server density is 28 servers per 42u rack, as opposed to 32 Dell M910 servers. Also – don't miss the point that these are also Intel Xeon 7500 boxes. As you know, the Cisco B250 uses the Westmere EP CPU and as a bonus you can grow these servers into 4 CPU boxes. Don't get me wrong, Cisco's management message in a large server environment is better, but when it comes to memory and CPU scalability, Dell has the edge right now. Thanks for reading, Brad. Always good to hear from you.

Pingback: Ellie Fitzgerld

James, good point, but there are a lot of reasons why products like M910/R810 based on Nehalem EX are better choices than the B250:

1) The memory scalability of EX based products is significantly higher. Nehalem EP based systems, no matter what memory extender technology you use, are capped at 384GB. M910 can do 512GB today and will support 1TB by end of this year.

2) EX gives you up to 8 core processors while EP is capped at 6 cores (and 1/2 the cache). A 2 socket EX based server will outperform any EP based system in database & virtualization benchmarks while providing more scalability.

3) EX based systems have substantial RAS advantages that are critical when you are consolidating or performing high end workloads. Many of these are native to the Intel processor & chipset and are ONLY available in EX (e.g. Machine Check Architecture Recovery helps identify & correct previously uncorrectable errors without bringing the system down, there are a host of others unique to EX here

http://www.intel.com/Assets/en_US/PDF/whitepape…

Some of them like Failsafe Embedded Hypervisor (essentially mirroring for the embedded hypervisor) is Dell unique.

4) Price out an M910 vs. B250 (sure, no one buys at list…). you are going to see M910 in a 2S configuration at nearly 1/2 the price of B250 with 192GB memory. then try it at 384GB and its even more startling. Note that the 6500 series processors on EX systems are priced to go after the high end 2S space.

so, M910 gives you better performance, significantly better RAS features, at a significantly lower price than B250.

Cisco targeted a real need (high memory 2S server optimized for consolidation) with the B250, they just picked the wrong architecture to build it around. They spent significant $$ (that customers have to pay for) developing a memory extender ASICs for EP, when they could have done something much simpler based around EX and provided a much more robust platform.

just my 2 cents, mike

brad,

see my note above. EX products like M910 can scale much higher in memory, perform better, cost less when you scale memory over 96GB, and have superior RAS features vs. EP. EX is clearly the right architecture to base a high end 2S platform on (EP is ideal for mainstream 2S servers). Cisco targeted the right need, but they missed on picking the right platform to base it on.

Pingback: Tom Pacyk

Pingback: Kevin Houston

Pingback: unix player

Great points, Mike. The #Intel RAS features of the Xeon 7500 is something that doesn't get enough attention, so I appreciate you mentioning them as another benefit. As always, thanks for reading!

Pingback: John A Cook

Need to be careful about memory speeed as it clocks down quick and always question performance because the technology only uses 1 of the memory controllers per processor

You are right, servers have become very memory constrained, especially in virtualized environments. However, all Nehalem EX processors are capable of addressing 16 DIMMs per processor, so why add the FlexMem Bridge for a 2 processor, 32 DIMM configuration? This will only get you to the industry standard, plus if you add the 3rd and 4th processor you are still limited to 32 DIMMs.

Have you seen IBM's eX5 systems? With their MAX5 memory expansion they can take their 4-socket x3850 X5 up to 96 DIMMs/1.5TB, and their upcoming 2-socket x3690 X5 and HX5 to 64 DIMMs/1TB and 40 DIMMs/320GB, respectively.

Pingback: Kevin Houston

RE: the #ibm HX5 blade server, I was one of the first to blog about it (https://bladesmadesimple.com/2010/03/technical-d…). The IBM HX5 blade server is a good offering, however you are not able to put as many servers into a 42u rack as you can with the Dell M910 (28 vs 32), so those users with a need for density will run into problems. Let's also face the reality that the MAX5 expansion adds additional overhead in capital costs that is not seen in the Dell M910. When you combine 2 x HX5's for a 4 processor system, you are nearly doubling the costs when compared to the Dell M910. That being said, I try to be fair in my posts, so look for a more detailed comparison post between the technologies soon. Thanks for reading! Please spread the word, I'm always looking for new readers.

Thanks for the response, Kevin. I will look forward to the comparison!

Pingback: Kevin Houston

Just watch out for the 256GB memory limitation on all the VMware licenses except for Enterprise Plus.

When using #Dell FlexMem Bridge, #IBM MAX5 or #cisco 's B250 blade server for large memory with #VMware, licensing is something that needs to be reviewed – you are right! Great point! Thanks for reading and for the comment.

Pingback: Blue Thunder Somogyi

Michelle,

you could do a 32 dimm 2-way only blade, but the beauty of FlexMem Bridge is that you can also easily make a 4-way out of the same blade. and we do it with much less cost and space overhead than IBM. we can also support 16GB dimms while IBM cannot because they had to use special Very Low Profile memory (VLP is also more expensive). so even with M910's 32 dimm slots, we support 512GB today and will be at 1TB in the 2nd half of this year.

mike

Glenn,

with the EX architecture, memory doesnt drop down in speed when you add more DIMMs, that is why the buffers are there

mike

I certainly would not give Dell the edge.

The Flexmem bridge technology has a huge flaw…it cuts Nehalem-EX memory bandwidth in half, by only allowing use of one of the two QPI controllers in each socket, and, even more problematic, it increases latency. It also leads to situations where you pay, in both power, density, and performance for sockets you don't need. With HX5, you use logical building blocks to build up. If you only need 2 sockets, you've got 56 blades in a rack, plus an additional 6U for switches, storage, PDUs, etc. With M910, you're stuck at 32 blades per rack, no matter what. And, you're paying more in density, power and performance for unneeded sockets.

Kevin great post and easy to understand description of what Dell is doing. It overall looks great. I'd like to point out a few things for clarity (not comparison.)

You argue in your post that VMware servers are tapped on memory not CPU, then arguements in comments for or against the Cisco B250 switch to CPU, and state that EX provides better processing and more cores. Both are true but if I'm picking an architecture based on memory constraints then processing power is not my issue.

The second point is in regards to Mike Robert's pricing comparison between Dell and Cisco. That pricing is based on 'List Price' which is not an accurate comparison. Server and network margins are very different so Cisco's list price will always look higher even though the price the customer ends up paying will be much more similiar. When comparing Cisco servers if you want an accurate comparison use Cisco's MSRP vs. competitive List. This isn't a numbers trick, it's due to pricing complexity in data center, and variance between hardware types.

The last thing I'd point out is that the B250 memory achitecture is going to provide better memory access speeds than the Dell architecture. Dell will be running memory at 833mhz to achieve 192+ GB and accessing the QPI for 3/4 of the memory read/writes this will incur 12 nano seconds each time. Cisco's architecture runs 384GB at 1066mhz and will only access the QPI for 1/2 of the memory, the other half will experience < 6 nanoseconds additional latency due to the architecture.

In any event great post. It sounds like Dell is definitely bringing some solid innovation to the table and it gives users great options overall when trying to maximize the use of a 2 socket server.

RE: the #dell FlexMem Bridge – I can not address the performance hits of only using 1 memory controller, however your comments on the HX5 is a bit in accurate. If you only need 2 x Xeon 6500 CPUs on a blade with 16 DIMM slots, then you can put 56 blade servers vs 32 of Dell's M910, however that would be for workloads that need Xeon 6500 CPU performance only. When that workload stretches to needing more memory, then you have to add the MAX5 memory expansion which therefore cuts your blade density in half to 28 blade servers in a 42u rack. IBM would win if you simply need a 2 CPU Intel 6500/7500 need. Great points. Look for a comparison between the two offerings very soon here. Thanks for all of your comments! Your comments and thoughts really help everyone.

Great comments. I don't know if the #dell FlexMem bridge only runs at 833Mhz vs #Cisco 's 1066Mhz. Perhaps our Dell friends can clarify that. Anyway you do a great job clarifying. I appreciate the comments and hope you continue to provide your thoughts on future posts. Thanks for reading!

Joe,

thanks for the comments:

1) most virtualized servers are more memory constrained than processor constrained, that is true, EX just gives you more headroom. its just a more scalable architecture for heavy duty, mission critical consolidation

2) whether pricing at list, MSRP, whatever you want, i'm think you'll see M910 compares really well to B250 while providing a more scalable, higher performance, and more resiliant architecture

3) our memory runs at 1066 (depends on the CPU you select) but EX is not like EP where memory steps down in speed. in a 2S configuration, 16 dimms are connected to 1 processor and 16 are on another, so dont see how you have to go over the QPI bus more in our design in a 2S configuration. There is also additional latency added through Cisco's Catalina memory extender ASICs that come into play as well.

bottom line is still that in a 2S configuration, M910 (or any 2S EX system) will outperform a high memory EP product like B250, provides significantly more memory scalability, will be priced competitively (likely significantly less), and provide superior RAS.

Mike

Proteus,

1) in a 2S configuration, we have 2 QPI links between processors (its in kevin's drawing above – 1 is a direct connection and 1 goes through the FlexMem Bridge).

2) not sure where you get a latency adder. FlexMem Bridge is not an active controller, it just connects traces/wires. it is not like cisco's catalina or IBM's node controller in terms of adding latency.

3) the benefit of HX5 is in its granularity, but the base 2S/16 dimm building block isnt very interesting to customers. a 2S/16 dimm EX server would be a very high cost, high power, not a great performance compare vs. EP (HX5 is also very constrained in the CPU bins it can use)- if you are not using the memory or CPU scalability of EX it is not the right product in my opinion. 2 x HX5's together in a 4S config is less dense than M910 and has same memory footprint. HX5 + MAX5 gives you a bit more DIMM slots, but is very costly in terms of the infrastructure and the requirement to use VLP memory (also doesnt support 16GB dimms today). They do have a spot in the ULTRA high memory 4S config that we cant match fully (we can get very close in capacity though because we can use 16GB dimms), but i see the 80 DIMM 4S blade space as being pretty small.

thanks, Mike

Mike, thanks for the clarification on bus speed, good to know Dell's also running at 1066 with the higher density, and I wasn't aware the EX chip provided that functionality.

As far as my statement that the Dell server would use the QPI 3/4 of the time, that's due to the archietecture defined above. 1/4 of the memory is owned by each processor or flex-mem bridge and connected to the rest via the QPI. This means that for any processor to access 3/4 of the memory the QPI will be used. The QPI experiences something close to 12 nanoseconds of additional latency.

As for price I'd save that for a case by case comparison rather than generalization. Under 192GB total per B250 blade anyone would be hard pressed to beat Cisco's memory price because they can use 48x 4GB DIMMs to get there rather than stacking expensive 8 or 16GB DIMMs. Additionally Cisco recently did away with the expanded memory license significantly reducing cost.

You are also correct in regards to Cisco's chip adding latency. it will add approximately 6 nanoseconds of latency to every memory operation as it sits in between the CPU and the DIMMs. This is about half the latency of the QPI. The benefit here is that each processor owns up to 192GB which greatly reduces the need to use Intel's QPI and can speed overall operation.

Last if we're going back to processor speed under the guise of 'headroom' let's not forget the recently announced Cisco B250-M2 (http://www.cisco.com/go/ucs) which supports the same memory architecture as the M1 using 6 core x5600 processors: X5680, X5670, X5650. Correct me if I'm wrong but aren't these the EX processors?

Overall I think the moral of the post was that more dense 2 socket memory configurations were a big requirement in the market and Dell has one great option to fill that gap.

Quick self correction/answer my own question:

Yes Joe you are wrong, the 5600 are still Westmere processors, but they are 6 core.

Joe, thanks for the great discussion

1) on the QPI/Memory/latency – with flexmem bridge, it is just a passthru, so the memory that is connected to it is “owned” by that processor connected to it. doesnt go over QPI. so i think this is a tie. each processor in a 2S configuration 'owns” 16 dimms

2) i'm pretty confident M910 will beat B250 in any configuration over 128GB. the premium for the 8GB dimms are largely gone and cisco charges a HUGE premium for memory anyway. soon 16GB will be where 8GB is today in terms of a reasonable premium.

3) dont forget the RAS capabilities of EX also, that is a huge difference that was ignored a lot before, but EX is really differentiated in that area.

Pingback: Puneet Dhawan

Mike,

Good stuff, and agreed great discussion. It's always nice to be able to seperate technology from marketechture.

I'm basing my calculations on when and where the QPI will be used on the diagram in the blog above, which I'm assuming is correct. At a 10,000 foot level Dell Flex-Bridge is inserting a a passive passthrough module in place of a processor in sockets 3-4 to allow the use of those DIMM slots without requiring the cost/power/etc of the possibly uneeded processors (memory bound applications being the target.)

Because the flexmem bridge is passive I'll buy 0-latency or close enough that it doesn't matter, but it is still connecting to processors 1 & 2 accross Intel's architecture which is the QPI.

What I mean is that while Proc 1 owns and addresses memory for socket 1 and 3, the memory for socket 3 is physically attached to socket 3 and only accessible accross the physical QPI path. The same would be said for proc 2 which will own and address socket 4's memory but have to use the QPI with additional latency to access it.

This means that for any 1 proc to utilize 3/4 of the memory it will experience QPI latency.

In the Cisco architecture two sockets are able to address a max of 48 DIMMs, 24 per socket. This means that only half the memory must be accessed accross the QPI.

For proper comparison we again have to realize that every memory read/write on Cisco's architecture experiences approxiamtely half the QPI latency due to the active Cisco memory expander chip, while Dell will experience 0 additional latency 1/4 of the time and QPI latency 3/4 of the time.

All and all I think the overall performance differences in memory access speeds would be a wash between the two platforms (only significant enough for a vendor bashing self funded test with no real world purpose.)

That being said you've made a strong point in regards to the advantages of the Intel EX (which flex-mem is built on) processors over the EP (which the B250 uses.) If processing is a constraint this will be a consideration.

Mike

Yes, you have a QPI wrap card. However, note that in your diagram, you're only connecting each bank of memory to one memory controller per socket, not two. Its actually very simple to test. Simply run the STREAM benchmark on an HX5 and a Dell 910 blade. The Dell will likely be substantially lower. I would also argue the 2S/16 Dimm (64-128GB) combo is very interesting. Very few blades run beyond that now..128GB+ is usually the realm of large scale-up rack servers (Where IBM complete dominates now with the 3850X5..up to 1.5TB on a 4-socket).

joe,

to the QPI comment, want to make sure you understand fully. processor 1 has 1 memory controller that connects via SMI to 2 buffers and a 2nd memory controller that connects via SMI (through the FlexMem Bridge) to 2 more buffers. so it owns and connects directly to the buffers of memory that would have been connected to processor 3 (if it was there) – not through QPI. so 1/2 of the memory is connected directly to processor 1 and 1/2 of the memory is connected directly to processor 2.

I also think EX is advantaged beyond just the processor capabilities (8 vs. 6 core and the cache); there is also memory scalability advantages (512GB vs. 384GB today, will be 1TB for M910 before too long while cisco will always be at 384GB) as well as significant RAS advantages (that is actually the most significant advantage really) native to the EX architecture that B250 will never have.

Proteus,

In a 2 socket configuration we use both memory controller on the processors (1 controller is directly connected to memory, 1 goes through FlexMem Bridge), same as IBM.

I absolutely agree that 64-128GB is a sweet spot today for most virtualization enviroments, but that is where EP fits. you are going to take a huge cost and power hit in going with EX for that kind of configuration and the performance of EX is not going to show up. EX is the right product for =>128GB of memory, that is the point where memory cost & power will outstrip the CPU cost/power overhead of EX and also the performance will shine because you are in balance with the high core count and large memory. to me EX is overkill for the <128GB configuration. This is exactly why you see 18 DIMM “virtualization optimized” EP based blades coming to market to address that space. we absolutely see customers looking at 2S blades with 256-512GB as people look to virtualize more intensive applications, that is where M910 shines.

mike

I hate to disagree, but the vast, vast majority of Nehalem-EP based blades I see go out the door with 12 DIMMS and 48GB or 96GB max. Due to the 3-channel EP memory architecture, 16 and 18 DIMM blades incur a massive performance penality (dropping from 1333 to 800 FSB, or even 533 as in the case of some vendors). For some workloads on lightly loaded systems, it doesn't matter, but for others, it hurts a lot. I see EX based systems and blades as being the way to go. If you're putting 512GB in a box, you're likely NOT doing it with hideously expensive 16GB dimms on a blade. The best platform for 512GB+ would be the 3950X5, given its support for 64dimms+32 with MAX5. HP is nowhere to be found..the 4-socket Magny-cours based systems won't perform as well with virtualized workloads, and they are late to the party with EX. Dell only has a 32 DIMM solution, which makes 512GB an expensive proposition.

i think we are violently agreeing. EP is the right thing for up to 96GB, they are way more cost effective, power efficient, and will perform = or better than EX. the 18 DIMM EP systems fit up to 144GB, you take a performance hit on memory today to get there, but for some applications they work well. EX fits from 96GB and beyond. the HX5 alone has a very narrow window where it fits (best case 96-128GB of memory – i think most in that range would go for the 18 DIMM EP blade, but depends on customer). i dont know how to price out the IBM blades or racks with max5, but i'd bet M910 would do pretty well in price vs. 2 x HX5 + 2 x MAX5 blades up to 512GB.

mike

I agree that large amounts of memory are better suited on rack systems like the #ibm x3850 x5 or the #dell R910 server platform if a customer doesn't “need” a blade server. The point of these exercises were to highlight the maximum densities that IBM and Dell would provide in their blade offerings, but I think realistically the chances of a customer filling up racks of blade servers simply for memory density is probably very rare.

Pingback: Blades Made Simple » Blog Archive » Dell M910 Blade Server Achieves #1 VMmark Score

Pingback: Dell M910 Blade Server Achieves #1 VMmark Score « BladesMadeSimple.com (MIRROR SITE)

The Cisco B230 is a better comparison on price and real estate.

Information leaked by Dell implies it will release a Windows 8 tablet in Q1 of 2012. Can that timetable be met? James Kendrick has been using mobile devices since they weighed 30 pounds, and has been sharing his insights on mobile technology for almost …

Hi Everyone, with the latest vSphere 5.0 release. Does the new license

model hurt Dell’s FlexMem virtualization licensing costs now that it

charges for increased memory capacity for vms? Does this diminishes the

FlexMem value-add?