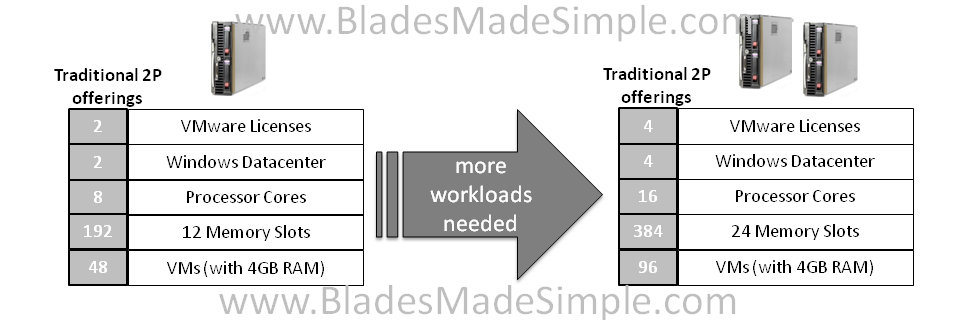

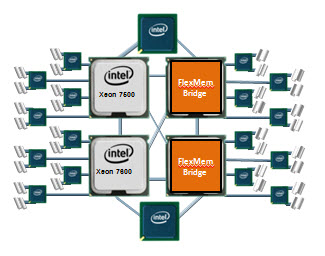

Intel officially announced today the Xeon 5600 processor, code named “Westmere.” Cisco, HP and IBM also announced their blade servers that have the new processor. The Intel Xeon 5600 offers:

- 32nm process technology with 50% more threads and cache

- Improved energy efficiency with support for 1.35V low power memory

There will be 4 core and 6 core offerings. This processor also provide the option of HyperThreading, so you could have up to 8 threads and 12 threads per processor, or 16 and 24 in a dual CPU system. This will be a huge advantage to applications that like multiple threads, like virtualization. Here’s a look at what each vendor has come out with:

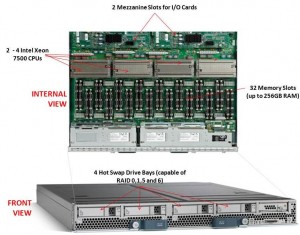

Cisco

The B200 M2 provides Cisco users with the current Xeon 5600 processors. It looks like Cisco will be offering a choice of the following Xeon 5600 processors: Intel Xeon X5670, X5650, E5640, E5620, L5640, or E5506. Because Cisco’s model is a “built-to-order” design, I can’t really provide any part numbers, but knowing what speeds they have should help.

The B200 M2 provides Cisco users with the current Xeon 5600 processors. It looks like Cisco will be offering a choice of the following Xeon 5600 processors: Intel Xeon X5670, X5650, E5640, E5620, L5640, or E5506. Because Cisco’s model is a “built-to-order” design, I can’t really provide any part numbers, but knowing what speeds they have should help.

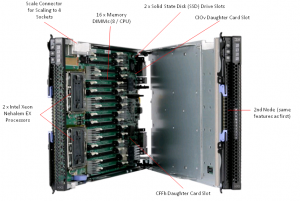

HP

HP is starting off with the Intel Xeon 5600 by bumping their existing G6 models to include the Xeon 5600 processor. The look, feel, and options of the blade servers will remain the same – the only difference will be the new processor. According to HP, “the HP ProLiant G6 platform, based on Intel Xeon 5600 processors, includes the HP ProLiant BL280c, BL2x220c, BL460c and BL490c server blades and HP ProLiant WS460c G6 workstation blade for organizations requiring high density and performance in a compact form factor. The latest HP ProLiant G6 platforms will be available worldwide on March 29.” It appears that HP’s waiting until March 29 to provide details on their Westmere blade offerings, so don’t go looking for part numbers or pricing on their website.

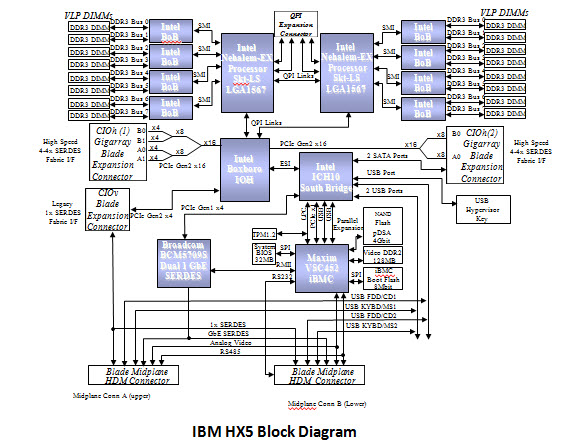

IBM

IBM is continuing to stay ahead of the game with details about their product offerings. They’ve refreshed their HS22 and HS22v blade servers:

HS22

HS22

7870ECU – Express HS22, 2x Xeon 4C X5560 95W 2.80GHz/1333MHz/8MB L2, 4x2GB, O/Bay 2.5in SAS, SR MR10ie

7870G4U – HS22, Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870GCU – HS22, Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 3x2GB, O/Bay 2.5in SAS, Broadcom 10Gb Gen2 2-port

7870H2U -HS22, Xeon 6C X5650 95W 2.66GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870H4U – HS22, Xeon 6C X5670 95W 2.93GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870H5U – HS22, Xeon 4C X5667 95W 3.06GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870HAU – HS22, Xeon 6C X5650 95W 2.66GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS, Emulex Virtual Fabric Adapter

7870N2U – HS22, Xeon 6C L5640 60W 2.26GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870EGU – Express HS22, 2x Xeon 4C E5630 80W 2.53GHz/1066MHz/12MB, 6x2GB, O/Bay 2.5in SAS

HS22V

HS22V

7871G2U – HS22V, Xeon 4C E5620 80W 2.40GHz/1066MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871G4U – HS22V, Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871GDU – HS22V, Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871H4U – HS22V, Xeon 6C X5670 95W 2.93GHz/1333MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871H5U – HS22V, Xeon 4C X5667 95W 3.06GHz/1333MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871HAU – HS22V, Xeon 6C X5650 95W 2.66GHz/1333MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871N2U – HS22V, Xeon 6C L5640 60W 2.26GHz/1333MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871EGU – Express HS22V, 2x Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 6x2GB, O/Bay 1.8in SAS

7871EHU – Express HS22V, 2x Xeon 6C X5660 95W 2.80GHz/1333MHz/12MB, 6x4GB, O/Bay 1.8in SAS

I could not find any information on what Dell will be offering, from a blade server perspective, so if you have information (that is not confidential) feel free send it my way.