Revised with corrections 3/1/2011 10:29 a.m. (EST)

Almost a year ago, I wrote an article highlighting the 4 socket blade server offerings. At that time, the offerings were very slim, but over the past 11 months, that blog post has received the most hits, so I figured it’s time to revise the article. In today’s post, I’ll review the 4 socket Intel and AMD blade servers that are currently on the market. Yes, I know I’ll have to revise this again in a few weeks, but I’ll cross that bridge when I get to it. Continue reading

Tag Archives: PowerEdge M910

Cisco Announces 32 DIMM, 2 Socket Nehalem EX UCS B230-M1 Blade Server

Thanks to fellow blogger, M. Sean McGee (http://www.mseanmcgee.com/) I was alerted to the fact that Cisco announced on today, Sept. 14, their 13th blade server to the UCS family – the Cisco UCS B230 M1.

Thanks to fellow blogger, M. Sean McGee (http://www.mseanmcgee.com/) I was alerted to the fact that Cisco announced on today, Sept. 14, their 13th blade server to the UCS family – the Cisco UCS B230 M1.

This newest addition performs a few tricks that no other vendor has been able to perform. Continue reading

New Cisco Blade Server: B440-M1

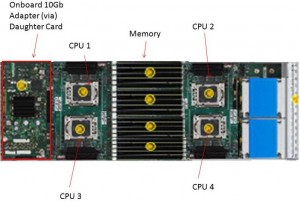

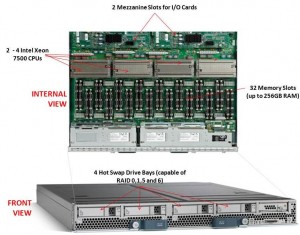

Cisco recently announced their first blade offering with the Intel Xeon 7500 processor, known as the “Cisco UCS B440-M1 High-Performance Blade Server.” This new blade is a full-width blade that offers 2 – 4 Xeon 7500 processors and 32 memory slots, for up to 256GB RAM, as well as 4 hot-swap drive bays. Since the server is a full-width blade, it will have the capability to handle 2 dual-port mezzanine cards for up to 40 Gbps I/O per blade.

Cisco recently announced their first blade offering with the Intel Xeon 7500 processor, known as the “Cisco UCS B440-M1 High-Performance Blade Server.” This new blade is a full-width blade that offers 2 – 4 Xeon 7500 processors and 32 memory slots, for up to 256GB RAM, as well as 4 hot-swap drive bays. Since the server is a full-width blade, it will have the capability to handle 2 dual-port mezzanine cards for up to 40 Gbps I/O per blade.

Each Cisco UCS 5108 Blade Server Chassis can house up to four B440 M1 servers (maximum 160 per Unified Computing System).

How Does It Compare to the Competition?

Since I like to talk about all of the major blade server vendors, I thought I’d take a look at how the new Cisco B440 M1 compares to IBM and Dell. (HP has not yet announced their Intel Xeon 7500 offering.)

Processor Offering

Both Cisco and Dell offer models with 2 – 4 Xeon 7500 CPUs as standard. They each have variations on speeds – Dell has 9 processor speed offerings; Cisco hasn’t released their speeds and IBM’s BladeCenter HX5 blade server will have 5 processor speed offerings initially. With all 3 vendors’ blades, however, IBM’s blade server is the only one that is designed to scale from 2 CPUs to 4 CPUs by connecting 2 x HX5 blade servers. Along with this comes their “FlexNode” technology that enables users to have the 4 processor blade system to split back into 2 x 2 processor systems at specific points during the day. Although not announced, and purely my speculation, IBM’s design also leads to a possible future capability of connecting 4 x 2 processor HX5’s for an 8-way design. Since each of the vendors offer up to 4 x Xeon 7500’s, I’m going to give the advantage in this category to IBM. WINNER: IBM

Memory Capacity

Both IBM and Cisco are offering 32 DIMM slots with their blade solutions, however they are not certifying the use of 16GB DIMMs – only 4GB and 8GB DIMMs, therefore their offering only scales to 256GB of RAM. Dell claims to offers 512GB DIMM capacity on their the PowerEdge 11G M910 blade server, however that is using 16GB DIMMs. REalistically, I think the M910 would only be used with 8GB DIMMs, so Dell’s design would equal IBM and Cisco’s. I’m not sure who has the money to buy 16GB DIMMs, but if they do – WINNER: Dell (or a TIE)

Server Density

As previously mentioned, Cisco’s B440-M1 blade server is a “full-width” blade so 4 will fit into a 6U high UCS5100 chassis. Theoretically, you could fit 7 x UCS5100 blade chassis into a rack, which would equal a total of 28 x B440-M1’s per 42U rack.Overall, Cisco’s new offering is a nice addition to their existing blade portfolio. While IBM has some interesting innovation in CPU scalability and Dell appears to have the overall advantage from a server density, Cisco leads the management front.

Dell’s PowerEdge 11G M910 blade server is a “full-height” blade, so 8 will fit into a 10u high M1000e chassis. This means that 4 x M1000e chassis would fit into a 42u rack, so 32 x Dell PowerEdge M910 blade servers should fit into a 42u rack.

IBM’s BladeCenter HX5 blade server is a single slot blade server, however to make it a 4 processor blade, it would take up 2 server slots. The BladeCenter H has 14 server slots, so that makes the IBM solution capable of holding 7 x 4 processor HX5 blade servers per chassis. Since the chassis is a 9u high chassis, you can only fit 4 into a 42u rack, therefore you would be able to fit a total of 28 IBM HX5 (4 processor) servers into a 42u rack.

WINNER: Dell

Management

The final category I’ll look at is the management. Both Dell and IBM have management controllers built into their chassis, so management of a lot of chassis as described above in the maximum server / rack scenarios could add some additional burden. Cisco’s design, however, allows for the management to be performed through the UCS 6100 Fabric Interconnect modules. In fact, up to 40 chassis could be managed by 1 pair of 6100’s. There are additional features this design offers, but for the sake of this discussion, I’m calling WINNER: Cisco.

Cisco’s UCS B440 M1 is expected to ship in the June time frame. Pricing is not yet available. For more information, please visit Cisco’s UCS web site at http://www.cisco.com/en/US/products/ps10921/index.html.

Dell M910 Blade Server – Based on the Nehalem EX

Dell appears to be first to the market today with complete details on their Nehalem EX blade server, the PowerEdge M910. Based on the Nehalem EX technology (aka Intel Xeon 7500 Chipset), the server offers quite a lot of horsepower in a small, full-height blade server footprint.

Dell appears to be first to the market today with complete details on their Nehalem EX blade server, the PowerEdge M910. Based on the Nehalem EX technology (aka Intel Xeon 7500 Chipset), the server offers quite a lot of horsepower in a small, full-height blade server footprint.

Some details about the server:

- uses Intel Xeon 7500 or 6500 CPUs

- has support for up to 512GB using 32 x 16 DIMMs

- comes standard two embedded Broadcom NetExtreme II Dual Port 5709S Gigabit Ethernet NICs with failover and load balancing.

- has two 2.5″ Hot-Swappable SAS/Solid State Drives

- 3 4 available I/O mezzanine card slots

- comes with a Matrox G200eW w/ 8MB memory standard

- can function on 2 CPUs with access to all 32 DIMM slots

Dell (finally) Offers Some Innovation

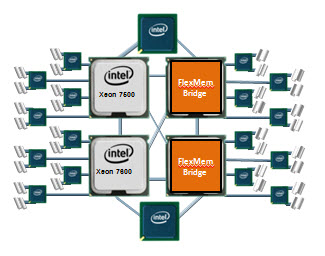

I commented a few weeks ago that Dell and innovate were rarely used in the same sentence, however with today’s announcement, I’ll have to retract that statement. Before I elaborate on what I’m referring to, let me do some quick education. The design of the Nehalem architecture allows for each processor (CPU) to have access to a dedicated bank of memory along with its own memory controller. The only downside to this is that if a CPU is not installed, the attached memory banks are not useable. THIS is where Dell is offering some innovation. Today Dell announced the “FlexMem Bridge” technology. This technology is simple in concept as it allows for the memory of a CPU socket that is not populated to still be used. In essence, Dell’s using technology that bridges the memory banks across un-populated CPU slots to the rest of the server’s populated CPUs.  With this technology, a user could start of with only 2 CPUs and still have access to 32 memory DIMMs. Then, over time, if more CPUs are needed, they simply remove the FlexMem Bridge adapters from the CPU sockets then replace with CPUs – now they would have a 4 CPU x 32 DIMM blade server.

With this technology, a user could start of with only 2 CPUs and still have access to 32 memory DIMMs. Then, over time, if more CPUs are needed, they simply remove the FlexMem Bridge adapters from the CPU sockets then replace with CPUs – now they would have a 4 CPU x 32 DIMM blade server.

Congrats to Dell. Very cool idea. The Dell PowerEdge M910 is available to order today from the Dell.com website.

Let me know what you guys think.

4 Socket Blade Servers Density: Vendor Comparison

IMPORTANT NOTE – I updated this blog post on Feb. 28, 2011 with better details. To view the updated blog post, please go to:

https://bladesmadesimple.com/2011/02/4-socket-blade-servers-density-vendor-comparison-2011/

Original Post (March 10, 2010):

As the Intel Nehalem EX processor is a couple of weeks away, I wonder what impact it will have in the blade server market. I’ve been talking about IBM’s HX5 blade server for several months now, so it is very clear that the blade server vendors will be developing blades that will have some iteration of the Xeon 7500 processor. In fact, I’ve had several people confirm on Twitter that HP, Dell and even Cisco will be offering a 4 socket blade after Intel officially announces it on March 30. For today’s post, I wanted to take a look at how the 4 socket blade space will impact the overall capacity of a blade server environment. NOTE: this is purely speculation, I have no definitive information from any of these vendors that is not already public.

The Cisco UCS 5108 chassis holds 8 “half-width” B-200 blade servers or 4 “full-width” B-250 blade servers, so when we guess at what design Cisco will use for a 4 socket Intel Xeon 7500 (Nehalem EX) architecture, I have to place my bet on the full-width form factor. Why? Simply because there is more real estate. The Cisco B250 M1 blade server is known for its large memory capacity, however Cisco could sacrifice some of that extra memory space for a 4 socket, “Cisco B350“ blade. This would provide a bit of an issue for customers wanting to implement a complete rack full of these servers, as it would only allow for a total of 28 servers in a 42U rack (7 chassis x 4 servers per chassis.)

The Cisco UCS 5108 chassis holds 8 “half-width” B-200 blade servers or 4 “full-width” B-250 blade servers, so when we guess at what design Cisco will use for a 4 socket Intel Xeon 7500 (Nehalem EX) architecture, I have to place my bet on the full-width form factor. Why? Simply because there is more real estate. The Cisco B250 M1 blade server is known for its large memory capacity, however Cisco could sacrifice some of that extra memory space for a 4 socket, “Cisco B350“ blade. This would provide a bit of an issue for customers wanting to implement a complete rack full of these servers, as it would only allow for a total of 28 servers in a 42U rack (7 chassis x 4 servers per chassis.)

On the other hand, Cisco is in a unique position in that their half-width form factor also has extra real estate because they don’t have 2 daughter card slots like their competitors. Perhaps Cisco would create a half-width blade with 4 CPUs (a B300?) With a 42U rack, and using a half-width design, you would be able to get a maximum of 56 blade servers (7 chassis x 8 servers per chassis.)

Dell

The 10U M1000e chassis from Dell can currently handle 16 “half-height” blade servers or 8 “full height” blade servers. I don’t forsee any way that Dell would be able to put 4 CPUs into a half-height blade. There just isn’t enough room. To do this, they would have to sacrifice something, like memory slots or a daughter card expansion slot, which just doesn’t seem like it is worth it. Therefore, I predict that Dell’s 4 socket blade will be a full-height blade server, probably named a PowerEdge M910. With this assumption, you would be able to get 32 blade servers in a 42u rack (4 chassis x 8 blades.)

HP

HP

Similar to Dell, HP’s 10U BladeSystem c7000 chassis can currently handle 16 “half-height” blade servers or 8 “full height” blade servers. I don’t forsee any way that HP would be able to put 4 CPUs into a half-height blade. There just isn’t enough room. To do this, they would have to sacrifice something, like memory slots or a daughter card expansion slot, which just doesn’t seem like it is worth it. Therefore, I predict that HP’s 4 socket blade will be a full-height blade server, probably named a Proliant BL680 G7 (yes, they’ll skip G6.) With this assumption, you would be able to get 32 blade servers in a 42u rack (4 chassis x 8 blades.)

IBM

Finally, IBM’s 9U BladeCenter H chassis offers up 14 servers. IBM has one size server, called a “single wide.” IBM will also have the ability to combine servers together to form a “double-wide”, which is what is needed for the newly announced IBM BladeCenter HX5. A double-width blade server reduces the IBM BladeCenter’s capacity to 7 servers per chassis. This means that you would be able to put 28 x 4 socket IBM HX5 blade servers into a 42u rack (4 chassis x 7 servers each.)

Summary

In a tie for 1st place, at 32 blade servers in a 42u rack, Dell and HP would have the most blade server density based on their existing full-height blade server design. IBM and Cisco would come in at 3rd place with 28 blade servers in a 42u rack.. However IF Cisco (or HP and Dell for that matter) were able to magically re-design their half-height servers to hold 4 CPUs, then they would be able to take 1st place for blade density with 56 servers.

Yes, I know that there are slim chances that anyone would fill up a rack with 4 socket servers, however I thought this would be good comparison to make. What are your thoughts? Let me know in the comments below.