If this widget isn’t working, check out the archive at: http://twapperkeeper.com/hpbladesday/

Yearly Archives: 2010

HP Tech Day – Day 1 Agenda and Attendees

Today kicks off the HP Blades and Infrastructure Software Tech Day 2010 (aka HP Blades Day). I’ll be updating this site frequently throughout the day, so be sure to check back. You can quickly view all of the HP Tech Day info by clicking on the “Category” tab on the left and choose “HPTechDay2010.” For live updates, follow me on Twitter @Kevin_Houston.

Here’s our agenda for today (Day 1):

9:10 – 10:00 ISB Overview and Key Data Center Trends 2010

10:00 – 10:30 Nth Generation Computing Presentation

10:45 – 11:45 Virtual Connect

1:00 – 3:00 BladeSystem in the Lab (Overview and Demo) and Insight Software (Overview and Demo)

3:15 – 4:15 Matrix

4:15 – 4:45 Competitive Discussion

5:00 – 5:45 Podcast roundtable with Storage Monkeys

Note: gaps in the times above indicate a break or lunch.

For extensive coverage, make sure you check in on the rest of the attendees’ blogs:

Rich Brambley: http://vmetc.com

Greg Knieremen: http://www.storagemonkeys.com/

Chris Evans: http://thestoragearchitect.com

Simon Seagrave: http://techhead.co.uk

John Obeto: http://absolutelywindows.com

Frank Owen: http://techvirtuoso.com

Martin Macleod: http://www.bladewatch.com/

Steven Foskett: http://blog.fosketts.net/

Devang Panchigar: http://www.storagenerve.com

Disclaimer: airfare, accommodations and meals are being provided by HP, however the content being blogged is solely my opinion and does not in anyway express the opinions of HP.

More HP and IBM Blade Rumours

I wanted to post a few more rumours before I head out to HP in Houston for “HP Blades and Infrastructure Software Tech Day 2010” so it’s not to appear that I got the info from HP. NOTE: this is purely speculation, I have no definitive information from HP so this may be false info.

First off – the HP Rumour:

I’ve caught wind of a secret that may be truth, may be fiction, but I hope to find out for sure from the HP blade team in Houston. The rumour is that HP’s development team currently has a Cisco Nexus Blade Switch Module for the HP BladeSystem in their lab, and they are currently testing it out.

Now, this seems far fetched, especially with the news of Cisco severing partner ties with HP, however, it seems that news tidbit was talking only about products sold with the HP label, but made by Cisco (OEM.) HP will continue to sell Cisco Catalyst switches for the HP BladeSystem and even Cisco branded Nexus switches with HP part numbers (see this HP site for details.) I have some doubt about this rumour of a Cisco Nexus Switch that would go inside the HP BladeSystem simply because I am 99% sure that HP is announcing a Flex10 type of BladeSystem switch that will allow converged traffic to be split out, with the Ethernet traffic going to the Ethernet fabric and the Fibre traffic going to the Fibre fabric (check out this rumour blog I posted a few days ago for details.) Guess only time will tell.

The IBM Rumour:

I posted a few days ago a rumour blog that discusses the rumour of HP’s next generation adding Converged Network Adapters (CNA) to the motherboard on the blades (in lieu of the 1GB or Flex10 NICs), well, now I’ve uncovered a rumour that IBM is planning on following later this year with blades that will also have CNA’s on the motherboard. This is huge! Let me explain why.

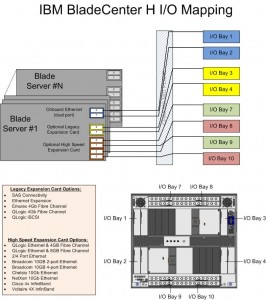

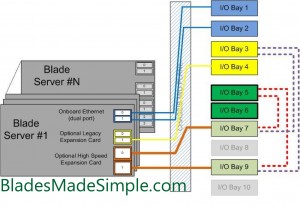

The design of IBM’s BladeCenter E and BladeCenter H have the 1Gb NICs onboard each blade server hard-wired to I/O Bays 1 and 2 – meaning only Ethernet modules can be used in these bays (see the image to the left for details.) However, I/O Bays 1 and 2 are for “standard form factor I/O modules” while I/O Bays are for “high speed form factor I/O modules”. This means that I/O Bays 1 and 2 can not handle “high speed” traffic, i.e. converged traffic.

The design of IBM’s BladeCenter E and BladeCenter H have the 1Gb NICs onboard each blade server hard-wired to I/O Bays 1 and 2 – meaning only Ethernet modules can be used in these bays (see the image to the left for details.) However, I/O Bays 1 and 2 are for “standard form factor I/O modules” while I/O Bays are for “high speed form factor I/O modules”. This means that I/O Bays 1 and 2 can not handle “high speed” traffic, i.e. converged traffic.

This means that IF IBM comes out with a blade server that has a CNA on the motherboard, either:

a) the blade’s CNA will have to route to I/O Bays 7-10

OR

b) IBM’s going to have to come out with a new BladeCenter chassis that allows the high speed converged traffic from the CNAs to connect to a high speed switch module in Bays 1 and 2.

So let’s think about this. If IBM (and HP for that matter) does put CNA’s on the motherboard, is there a need for additional mezzanine/daughter cards? This means the blade servers could have more real estate for memory, or more processors. If there’s no extra daughter cards, then there’s no need for additional I/O module bays. This means the blade chassis could be smaller and use less power – something every customer would like to have.

I can really see the blade market moving toward this type of design (not surprising very similar to Cisco’s UCS design) – one where only a pair of redundant “modules” are needed to split converged traffic to their respective fabrics. Maybe it’s all a pipe dream, but when it comes true in 18 months, you can say you heard it here first.

Thanks for reading. Let me know your thoughts – leave your comments below.

Blade Networks Announces Industry’s First and Only Fully Integrated FCoE Solution Inside Blade Chassis

BLADE Network Technologies, Inc. (BLADE), “officially” announces today the delivery of the industry’s first and only fully integrated Fibre Channel over Ethernet (FCoE) solution inside a blade chassis. This integration significantly reduces power, cost, space and complexity over external FCoE implementations.

You may recall that I blogged about this the other day (click here to read), however I left off one bit of information. The (Blade Networks) BNT Virtual Fabric 10 Gb Switch Module does not require the QLogic Virtual Fabric Extension Module to function. It will work with an existing Top-of-Rack (TOR) Convergence Switch from Brocade or Cisco to act as a 10Gb switch module, feeding the converged 10Gb link up to the TOR switch. Since it is a switch module, you can connect as few as 1 uplink to your TOR switch, therefore saving connectivity costs, as opposed to a pass-thru option (click here for details on the pass-thru option.)

Yes – this is the same architectural design as the Cisco Nexus 4001i provides as well, however there are a couple of differences:

BNT Virtual Fabric Switch Module (IBM part #46C7191) – 10 x 10Gb Uplinks, $11,199 list (U.S.)

Cisco Nexus 4001i Switch (IBM part #46M6071) – 6 x 10Gb Uplinks, $12,999 list (U.S.)

While BNT provides 4 extra 10Gb uplinks, I can’t really picture anyone using all 10 ports. However, it does has a lower list price, but I encourage you to check your actual price with your IBM partner, as the actual pricing may be different. Regardless of whether you choose BNT or Cisco to connect into your TOR switch, don’t forget the transceivers! They add much more $$ to the overall cost, and without them you are hosed.

About the BNT Virtual Fabric 10Gb Switch Module

The BNT Virtual Fabric 10Gb Switch Module includes the following features and functions:

The BNT Virtual Fabric 10Gb Switch Module includes the following features and functions:

- Form-factor

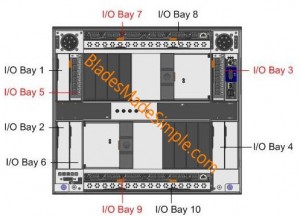

- Single-wide high-speed switch module (fits in IBM BladeCenter H bays #7 and 9.)

- Internal ports

- 14 internal auto-negotiating ports: 1 Gb or 10 Gb to the server blades

- Two internal full-duplex 100 Mbps ports connected to the management module

- External ports

- Up to ten 10 Gb SFP+ ports (also designed to support 1 Gb SFP if required, flexibility of mixing 1 Gb/10 Gb)

- One 10/100/1000 Mb copper RJ-45 used for management or data

- An RS-232 mini-USB connector for serial port that provides an additional means to install software and configure the switch module

- Scalability and performance

- Autosensing 1 Gb/10 Gb internal and external Ethernet ports for bandwidth optimization

To read the extensive list of details about this switch, please visit the IBM Redbook located here.

HP Blades and Infrastructure Software Tech Day 2010 (UPDATED)

On Wednesday I will be headed to the 2010 HP Infrastructure Software & Blades Tech Day, an invitation only blogger event at the HP Campus in Houston, TX. This event is a day and a half deep dive about the blade server market, key data center trends and client virtualization. We will be with HP technology leaders and business executives who will discuss the company’s business advantages and technical advances. The event will also include customers’ and their own key insights and experiences and provide demos of the products including an insider’s tour of HP’s Lab facilities.

I’m extremely excited to attend this event and can’t wait to blog about it. (Hopefully HP will not NDA the entire event.) I’m also excited to meet some of the world’s top bloggers. Check out this list of attendees:

Rich Brambley: http://vmetc.com

Greg Knieremen: http://www.storagemonkeys.com/

Chris Evans: http://thestoragearchitect.com

Simon Seagrave: http://techhead.co.uk

John Obeto: http://absolutelywindows.com

Frank Owen: http://techvirtuoso.com

Martin Macleod: http://www.bladewatch.com/

Plus a couple that I left off originally (sorry guys):

Steven Foskett: http://blog.fosketts.net/

Devang Panchigar: http://www.storagenerve.com

Be sure to check back with me on Thursday and Friday for updates to the event, and also follow me on Twitter @kevin_houston (twitter hashcode for this event is #hpbladesday.)

Disclaimer: airfare, accommodations and some meals are being provided by HP, however the content being blogged is solely my opinion and does not in anyway express the opinions of HP.

IBM’s New Approach to Ethernet/Fibre Traffic

Okay, I’ll be the first to admit when I’m wrong – or when I provide wrong information.

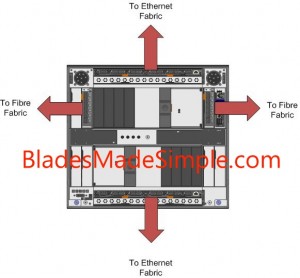

A few days ago, I commented that no one has yet offered the ability to split out Ethernet and Fibre traffic at the chassis level (as opposed to using a top of rack switch.) I quickly found out that I was wrong – IBM now has the ability to separate the Ethernet fabric and the Fibre fabric at the BladeCenter H, so if you are interested grab a cup of coffee and enjoy this read.

First a bit of background. The traditional method of providing Ethernet and Fibre I/O in a blade infrastructure was to integrate 6 Ethernet switches and 2 Fibre switches into the blade chassis, which provides 6 NICs and 2 Fibre HBAs per blade server. This is a costly method and it limits the scalability of a blade server.

A more conventional method that is becoming more popular is to converge the I/O traffic using a single converged network adapter (CNA) to carry the Ethernet and the Fibre traffic over a single 10Gb connection to a top of rack (TOR) switch which then sends the Ethernet traffic to the Ethernet fabric and the Fibre traffic to the Fibre fabric. This reduces the number of physical cables coming out of the blade chassis, offers higher bandwidth and reduces the overall switching costs. Up now, IBM offered two different methods to enable converged traffic:

Method 1: connect a pair of 10Gb Ethernet Pass-Thru modules into the blade chassis, add a CNA on each blade server, then connect the pass thru modules to a top of rack convergence switch from Brocade or Cisco. This method is the least expensive method, however since Pass-Thru modules are being used, a connection is required on the TOR convergence switch for every blade server being connected. This would mean a 14 blade infrastructure would eat up 14 ports on the convergence switch, potentially leaving the switch with very few available ports.

Method 1: connect a pair of 10Gb Ethernet Pass-Thru modules into the blade chassis, add a CNA on each blade server, then connect the pass thru modules to a top of rack convergence switch from Brocade or Cisco. This method is the least expensive method, however since Pass-Thru modules are being used, a connection is required on the TOR convergence switch for every blade server being connected. This would mean a 14 blade infrastructure would eat up 14 ports on the convergence switch, potentially leaving the switch with very few available ports.

Method #2: connect a pair of IBM Cisco Nexus 4001i switches, add a CNA on each server then connect the Nexus 4001i to a Cisco Nexus 5000 top of rack switch. This method enables you to use as few as 1 uplink connection from the blade chassis to the Nexus 5000 top of rack switch, however it is more costly and you have to invest into another Cisco switch.

server then connect the Nexus 4001i to a Cisco Nexus 5000 top of rack switch. This method enables you to use as few as 1 uplink connection from the blade chassis to the Nexus 5000 top of rack switch, however it is more costly and you have to invest into another Cisco switch.

The New Approach

A few weeks ago, IBM announced the “Qlogic Virtual Fabric Extension Module” – a device that fits into the IBM BladeCenter H and takes the the Fibre traffic from the CNA on a blade server and sends it to the Fibre fabric. This is HUGE! While having a top of rack convergence switch is helpful, you can now remove the need to have a top of rack switch because the I/O traffic is being split out into it’s respective fabrics at the BladeCenter H.

A few weeks ago, IBM announced the “Qlogic Virtual Fabric Extension Module” – a device that fits into the IBM BladeCenter H and takes the the Fibre traffic from the CNA on a blade server and sends it to the Fibre fabric. This is HUGE! While having a top of rack convergence switch is helpful, you can now remove the need to have a top of rack switch because the I/O traffic is being split out into it’s respective fabrics at the BladeCenter H.

What’s Needed

I’ll make it simple – here’s a list of components that are needed to make this method work:

- 2 x BNT Virtual Fabric 10 Gb Switch Module – part # 46C7191

- 2 x QLogic Virtual Fabric Extension Module – part # 46M6172

- a Qlogic 2-port 10 Gb Converged Network Adapter per blade server – part # 42C1830

- a IBM 8 Gb SFP+ SW Optical Transceiver for each uplink needed to your fibre fabric – part # 44X1964 (note – the QLogic Virtual Fabric Extension Module doesn’t come with any, so you’ll need the same quantity for each module.)

The CNA cards connect to the BNT Virtual Fabric 10 Gb Switch Module in Bays 7 and 9. These switch modules have an internal connector to the QLogic Virtual Fabric Extension Module, located in Bays 3 and 5. The I/O traffic moves from the CNA cards to the BNT switch, which separates the Ethernet traffic and sends it out to the Ethernet fabric while the Fibre traffic routes internally to the QLogic Virtual Fabric Extension Modules. From the Extension Modules, the traffic flows into the Fibre Fabric.

It’s important to understand the switches, and how they are connected, too, as this is a new approach for IBM. Previously the Bridge Bays (I/O Bays 5 and 6) really haven’t been used and IBM has never allowed for a card in the CFF-h slot to connect to the switch bay in I/O Bay 3.

It’s important to understand the switches, and how they are connected, too, as this is a new approach for IBM. Previously the Bridge Bays (I/O Bays 5 and 6) really haven’t been used and IBM has never allowed for a card in the CFF-h slot to connect to the switch bay in I/O Bay 3.

There are a few other designs that are acceptable that will still give you the split fabric out of the chassis, however they were not “redundant” so I did not think they were relevant. If you want to read the full IBM Redbook on this offering, head over to IBM’s site.

A few things to note with the maximum redundancy design I mentioned above:

1) the CIOv slots on the HS22 and HS22v can not be used. This is because I/O bay 3 is being used for the Extension Module and since the CIOv slot is hard wired to I/O bay 3 and 4, that will just cause problems – so don’t do it.

2) The BladeCenter E chassis is not supported for this configuration. It doesn’t have any “high speed bays” and quite frankly wasn’t designed to handle high I/O throughput like the BladeCenter H.

3) Only the parts listed above are supported. Don’t try and slip in a Cisco Fibre Switch Module or use the Emulex Virtual Adapter on the blade server – it won’t work. This is a QLogic design and they don’t want anyone else’s toys in their backyard.

That’s it. Let me know what you think by leaving a comment below. Thanks for stopping by!

10 Things That Cisco UCS Polices Can Do (That IBM, Dell or HP Can’t)

ViewYonder.com recently posted a great write up on some things that Cisco’s UCS can do that IBM, Dell or HP really can’t. You can go to ViewYonder.com to read the full article, but here are 10 things that Cisco’s UCS Polices do:

- Chassis Discovery – allows you to decide how many links you should use from the FEX (2104) to the FI (6100). This affects the path from blades to FI and the oversubscription rate. If you’ve cabled 4 I can just use 2 if you want, or even 1.

- MAC Aging – helps you manage your MAC table? This affects ability to scale, as bigger MAC tables need more management.

- Autoconfig – when you insert a blade, depending on its hardware config enables you to apply a specific template for you and put it in a organization automatically.

- Inheritence – when you insert a blade, allows you to automatically create a logical version (Service Profile) by coping the UUID, MAC, WWNs etc.

- vHBA Templates – helps you to determine how you want _every_ vmhba2 to look like (i.e. Fabric, VSAN, QoS, Pin to a border port)

- Dynamic vNICs – helps you determine how to distribute the VIFs on a VIC

- Host Firmware – enables you to determine what firmware to apply to the CNA, the HBA, HBA ROM, BIOS, LSI

- Scrub – provides you with the ability to wipe the local disks on association

- Server Pool Qualification – enables you to determine which hardware configurations live in which pool

- vNIC/vHBA Placement – helps you to determine how to distribute VIFs over one/two CNAs?

For more on this topic, visit Steve’s blog at ViewYonder.com. Nice job, Steve!

HP BladeSystem Rumours

I’ve recently posted some rumours about IBM’s upcoming announcements in their blade server line, now it is time to let you know some rumours I’m hearing about HP. NOTE: this is purely speculation, I have no definitive information from HP so this may be false info. That being said – here we go:

Rumour #1: Integration of “CNA” like devices on the motherboard.

As you may be aware, with the introduction of the “G6”, or Generation 6, of HP’s blade servers, HP added “FlexNICs” onto the servers’ motherboards instead of the 2 x 1Gb NICs that are standard on most of the competition’s blades. FlexNICs allow for the user to carve up a 10Gb NIC into 4 virtual NICs when using the Flex-10 Modules inside the chassis. (For a detailed description of Flex-10 technology, check out this HP video.) The idea behind Flex-10 is that you have 10Gb connectivity that allows you to do more with fewer NICs.

SO – what’s next? Rumour has it that the “G7” servers, expected to be announced on March 16, will have an integrated CNA or Converged Network Adapter. With a CNA on the motherboard, both the ethernet and the fibre traffic will have a single integrated device to travel over. This is a VERY cool idea because this announcement could lead to a blade server that can eliminate the additional daughter card or mezzanine expansion slots therefore freeing up valueable real estate for newer Intel CPU architecture.

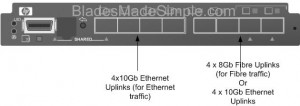

Rumour #2: Next generation Flex-10 Modules will separate Fibre and Network traffic.

Today, HP’s Flex-10 ONLY allows handles Ethernet traffic. There is no support for FCoE (Fibre Channel over Ethernet) so if you have a Fibre network, then you’ll also have to add a Fibre Switch into your BladeSystem chassis design. If HP does put in a CNA onto their next generation blade servers that carry Fibre and Ethernet traffic, wouldn’t it make sense there would need to be a module that would fit in the BladeSystem chassis that would allow for the storage and Ethernet traffic to exit?

I’m hearing that a new version of the Flex-10 Module is coming, very soon, that will allow for the Ethernet AND the Fibre traffic to exit out the switch. (The image to the right shows what it could look like.) The switch would allow for 4 of the uplink ports to go to the Ethernet fabric and the other 4 ports of the 8 port Next Generation Flex-10 switch to either be dedicated to a Fibre fabric OR used for additional 4 ports to the Ethernet fabric.

If this rumour is accurate, it could shake up things in the blade server world. Cisco UCS uses 10Gb Data Center Ethernet (Ethernet plus FCoE); IBM BladeCenter has the ability to do a 10Gb plus Fibre switch fabric (like HP) or it can use a 10Gb Enhanced Ethernet plus FCoE (like Cisco) however no one currently has a device to split the Ethernet and Fibre traffic at the blade chassis. If this rumour is true, then we should see it announced around the same time as the G7 blade server (March 16).

That’s all for now. As I come across more rumours, or information about new announcements, I’ll let you know.

Introducing the IBM HS22v Blade Server

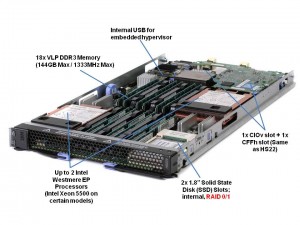

IBM officially announced today a new addition to their blade server line – the HS22v. Modeled after the HS22 blade server, the HS22v is touted by IBM as a “high density, high performance blade optimized for virtualization.” So what makes it so great for virtualization? Let’s take a look.

Memory

Memory

One of the big differences between the HS22v and the HS22 is more memory slots. The HS22v comes with 18 x very low profile (VLP) DDR3 memory DIMMs for a maximum of 144GB RAM. This is a key attribute for a server running virtualization since everyone knows that VM’s love memory. It is important to note, though, the memory will only run at 800Mhz when all 18 slots are used. In comparison, if you only had 6 memory DIMMs installed (3 per processor) then the memory would run at 1333Mhz and 12 DIMMs installed (6 per processor) runs at 1066Mhz. As a final note on the memory, this server will be able to use both 1.5v and 1.35v memory. The 1.35v will be newer memory that is introduced as the Intel Westmere EP processor becomes available. The big deal about this is that lower voltage memory = lower overall power requirements.

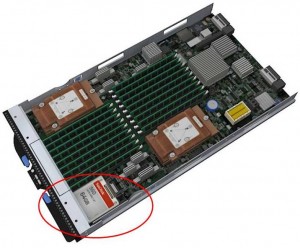

Drives

The second big difference is the HS22v does not use hot-swap drives like the HS22 does. Instead, it uses a 2 x solid state drives (SSD) for local storage. These drives have hardware RAID 0/1 capabilities standard. Although the picture to the right shows a 64GB SSD drive, my understanding is that only 50GB drives will be available as they start to become readlily available on March 19, with larger sizes (64GB and 128GB) becoming available in the near future. Another thing to note is that the image shows a single SSD drive, however the 2nd drive is located directly beneath. As mentioned above, these drives do have the ability to be set up in a RAID 0 or 1 as needed.

does. Instead, it uses a 2 x solid state drives (SSD) for local storage. These drives have hardware RAID 0/1 capabilities standard. Although the picture to the right shows a 64GB SSD drive, my understanding is that only 50GB drives will be available as they start to become readlily available on March 19, with larger sizes (64GB and 128GB) becoming available in the near future. Another thing to note is that the image shows a single SSD drive, however the 2nd drive is located directly beneath. As mentioned above, these drives do have the ability to be set up in a RAID 0 or 1 as needed.

So – why did IBM go back to using internal drives? For a few reasons:

Reason #1 : in order to get the space to add the extra memory slots, a change had to be made in the design. IBM decided that solid state drives were the best fit.

Reason #2: the SSD design allows the server to run with lower power. It’s well known that SSD drives run at a much lower power draw than physical spinning disks, so using SSD’s will help the HS22v be a more power efficient blade server than the HS22.

Reason #3: a common trend of virtualization hosts, especially VMware ESXi, is to run on integrated USB devices. By using an integrated USB key for your virtualization software, you can eliminate the need for spinning disks, or even SSD’s therefore reducing your overall cost of the server.

Processors

So here’s the sticky area. IBM will be releasing the HS22v with the Intel Xeon 5500 processor first. Later in March, as the Intel Westmere EP (Intel Xeon 5600) is announced, IBM will have models that come with it. IBM will have both Xeon 5500 and Xeon 5600 processor offerings. Why is this? I think for a couple of reasons:

a) the Xeon 5500 and the Xeon 5600 will use the same chipset (motherboard) so it will be easy for IBM to make one server board, and plop in either the Nehalem EP or the Westmere EP

b) simple – IBM wants to get this product into the marketplace sooner than later.

Questions

1) Will it fit into the BladeCenter E?

YES – however there may be certain limitations, so I’d recommend you reference the IBM BladeCenter Interoperability Guide for details.

2) Is it certified to run VMware ESX 4?

YES

3) Why didn’t IBM call it HS22XM?

According to IBM, the “XM” name is feature focused while “V” is workload focused – a marketing strategy we’ll probably see more of from IBM in the future.

That’s it for now. If there are any questions you have about the HS22v, let me know in the comments and I’ll try to get some answers.

For more on the IBM HS22v, check out IBM’s web site here.

Check back with me in a few weeks when I’m able to give some more info on what’s coming from IBM!

Cisco Takes Top 8 Core VMware VMmark Server Position

Cisco is getting some (more) recognition with their UCS blade server product, as they recently achieved the top position for “8 Core Server” on VMware’s VMmark benchmark tool. VMmark is the industry’s first (and only credible) virtualization benchmark for x86-based computers. According to the VMmark website, the Cisco UCS B200 blade server reached a score of 25.06 @ 17 tiles. A “tile” is simple a collection of virtual machines (VM’s) that are executing a set of diverse workloads designed to represent a natural work environment. The total number of tiles that a server can handle provides a detailed measurement of that server’s consolidation capacity.

Cisco’s Winning Configuration

So – how did Cisco reach the top server spot? Here’s the configuration:

server config:

- 2 x Intel Xeon X5570 Processors

- 96GB of RAM (16 x 8GB)

- 1 x Converged Network Adapter (Cisco UCS M71KR-Q)

storage config:

- EMC CX4-240

- Cisco MDS 9130

- 1154.27GB Used Disk Space

- 1024MB Array Cache

- 41 disks used on 4 enclosures/shelves (1 with 14 disk, 3 with 9 disks)

- 37 LUNs used

*17 at 38GB (file server + mail server) over 20 x 73GB SSDs

*17 at 15GB (database) + 2 LUNs at 400GB (Misc) over 16 x 450GB 15k disks

* 1 LUN at 20GB (boot) over 5 x 300GB 15k disks - RAID 0 for VMs, RAID 5 for VMware ESX 4.0 O/S

While first place on the VMmark page (8 cores) shows Fujitsu’s RX300, it’s important to note that it was reached using Intel’s W5590 processor – a processor that is designed for “workstations” – not servers. Second place, of server processors, currently shows HP’s BL490 with 24.54 (@ 17 tiles)

Thanks to Omar Sultan (@omarsultan) for Tweeting about this and to Harris Sussman for blogging about it.