Today Dell announced a new server architecture that combines characteristics of both rack servers and blade servers into a new architecture, known as PowerEdge FX. Today I’ll give you a first look into the platform. First off – according to Dell’s Product Development team, this platform was not designed to replace Dell’s blade server portfolio. Instead it was created to help bridge the gap between blade servers and rack servers. As you look at this new infrastructure, you may ask is it really a “blade server?” I’ve always claimed if it shared power, cooling and networking, it’s a “blade server” however I’ll let you form your own opinion.

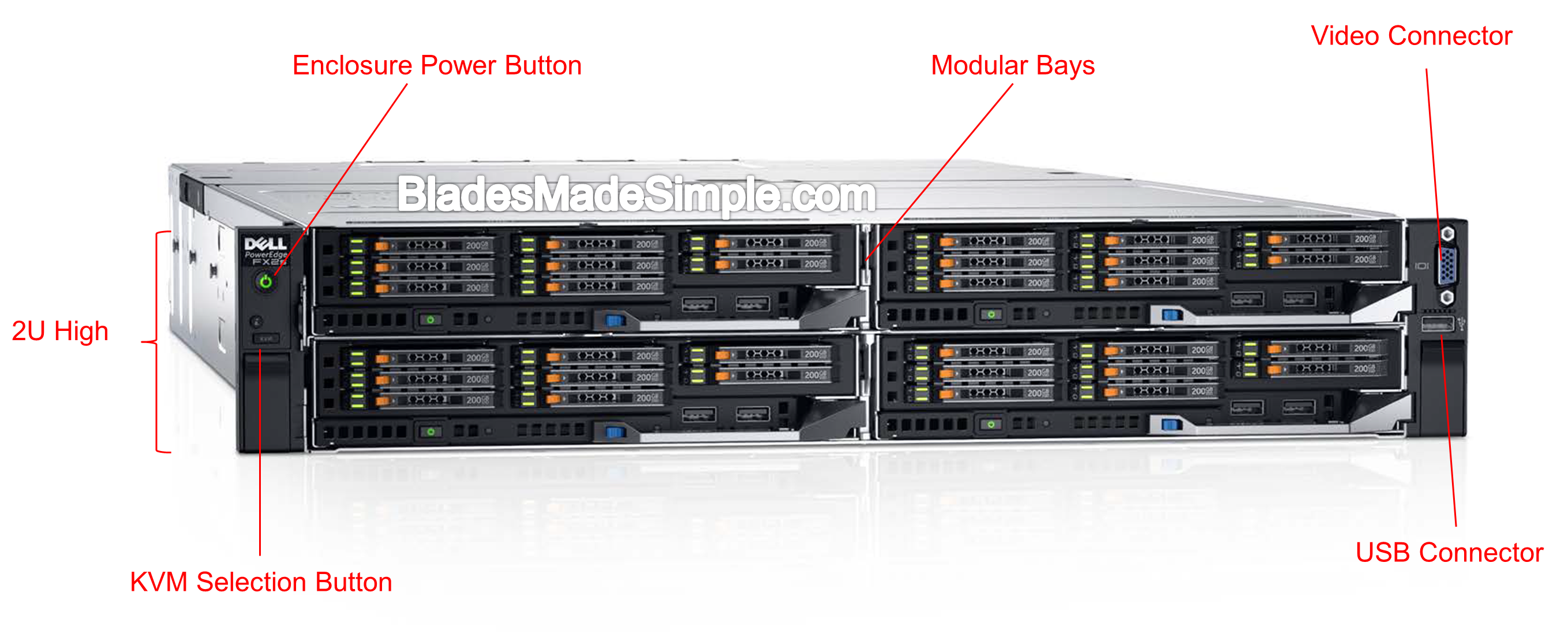

Dell’s PowerEdge FX architecture combines compute, storage and networking in just 2U of rack space offering the ability to manage all the resources from a single console or in a 1-to-1 rack as with traditional servers. The FX architecture consists of a 2U chassis with PCIe slots, server sleds and integrated networking.

FX Chassis

The base of the architecture is the 2U chassis designed to hold 1 to 8 sleds, depending upon the type of sled used.

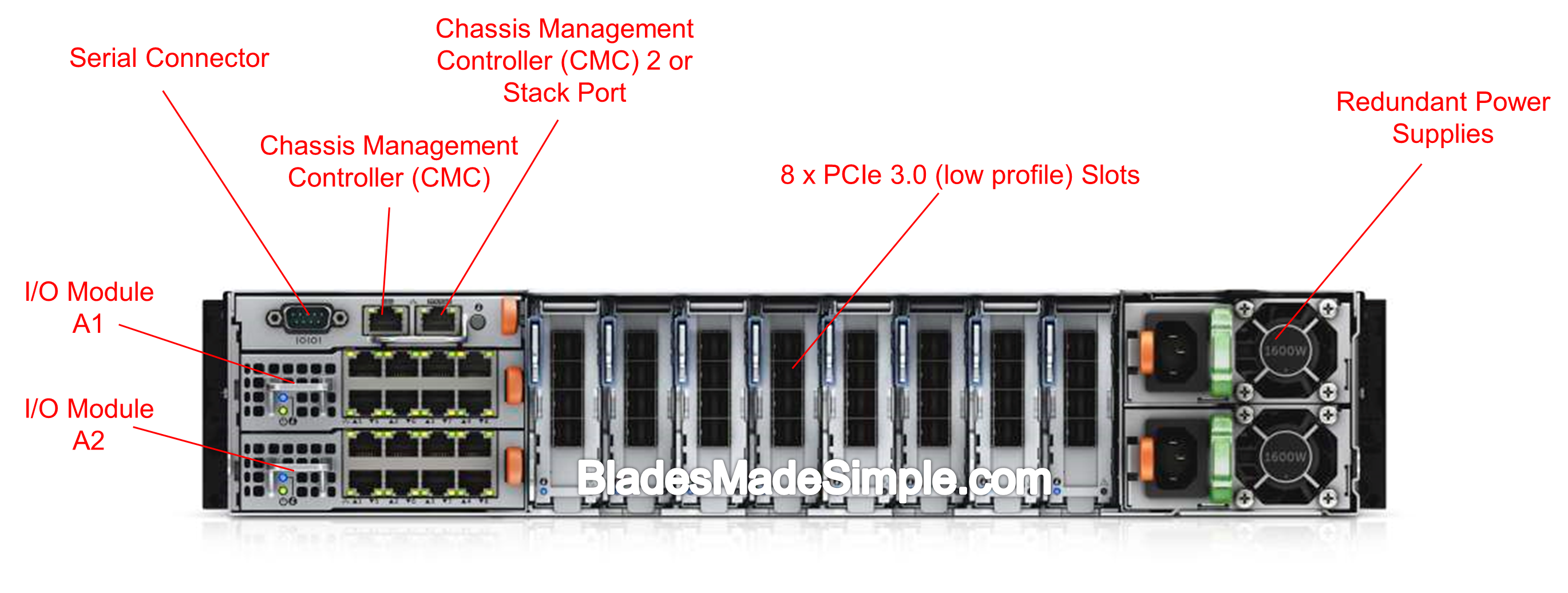

The FX2s chassis comes with 8 x PCIe Gen-3 low profile slots, a chassis management controller (CMC), redundant power supplies and two I/O modules. The advantage of using PCIe slots is that it offers flexibility to use any type of card that particular server requires instead of being tied to a particular “fabric” as in traditional blade chassis. While there will be a list of “supported” cards for these low profile slots, I imagine any low profile card would work. (Note, the image below shows 10GbE SFP+ cards.) You will probably see the names “FX2″and “FX2s” for the chassis – these are not typos. There will be two versions of the chassis: the PowerEdge FX2s which contain PCIe slots (shown below) and the PowerEdge FX2 which are absent of PCIe slots. When you read about the FM120x4 servers below you’ll understand why there are two chassis offerings.

If you are familiar with the Dell PowerEdge M1000e blade chassis or the Dell PowerEdge VRTX, then the CMC found on the FX2 chassis should be easy to navigate. The FX2’s CMC allows for a user to connect to a single IP address and manage the sleds, the I/O modules as well as the PCIe slots. There is a 2nd CMC port that can either be used to stack to another FX2 chassis or as a 2nd CMC management port for redundancy. If a user doesn’t want to manage via a CMC, each server can be managed via it’s built in iDRAC (Integrated Dell Remote Access Controller.) By managing the servers via the CMC, you’ll get alerts through the CMC instead of each individual server and you can do one-to-many tasks like updating firmware or assigning server profiles. An added benefit is that the CMC has the ability to manage up to 20 PowerEdge FX2 chassis.

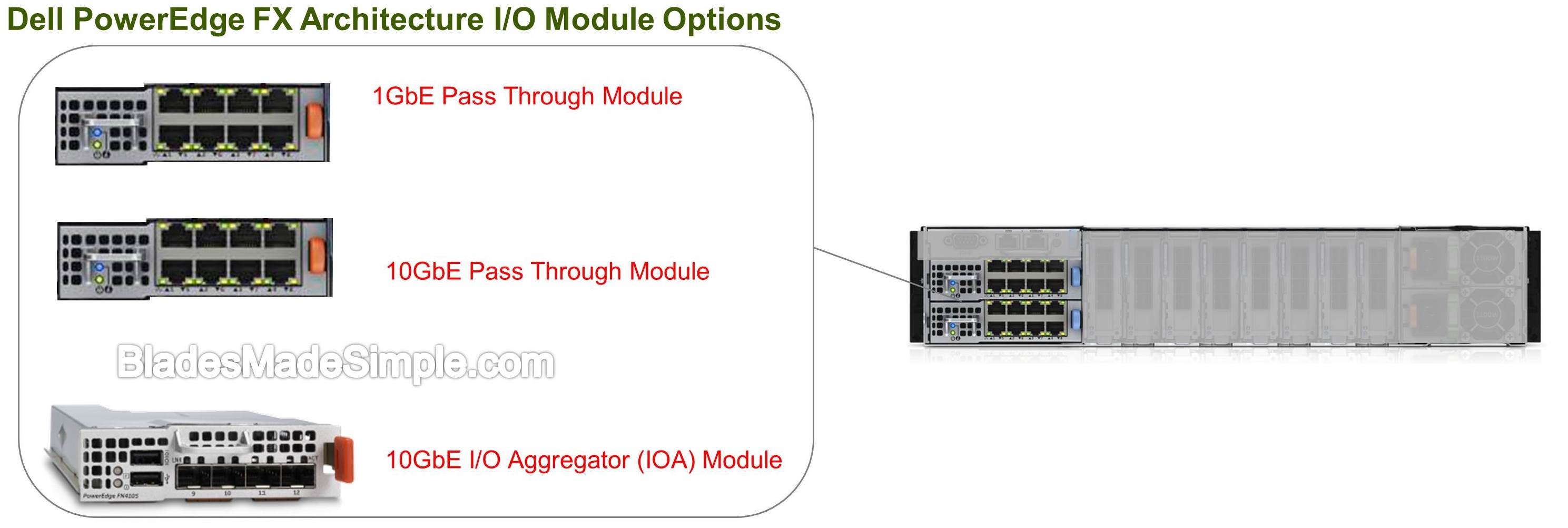

I/O Module

The I/O Module bays shown above are connected to the ports of the NICs that sit on the server’s Network Daughter Card (NDC). In a dual port card, one port goes to A1, the other goes to A2 providing redundancy. There will be several options to choose from for the I/O Modules: both 1GbE and 10GbE pass through modules and a 10GbE I/O Aggregator. The Pass Through modules allow for a non-switching connectivity for each on-board NIC port of the server to be connected to to a top-of-rack switch. While this module is typically less expensive it requires one Ethernet cable per NIC port that needs to be active.

In comparison, the 10GbE I/O Aggregator (officially known as the Dell PowerEdge FN I/O Aggregator) is similar to the Dell PowerEdge M I/O Aggregator available on the Dell PowerEdge M 1000E chassis. It provides internal Layer 2 switching to the 8 internal 10GbE ports and 4 external 10GbE ports. This module allows for as little as one connection per module to the top-of-rack environment in order to allow for all the internal NICs to be activated.

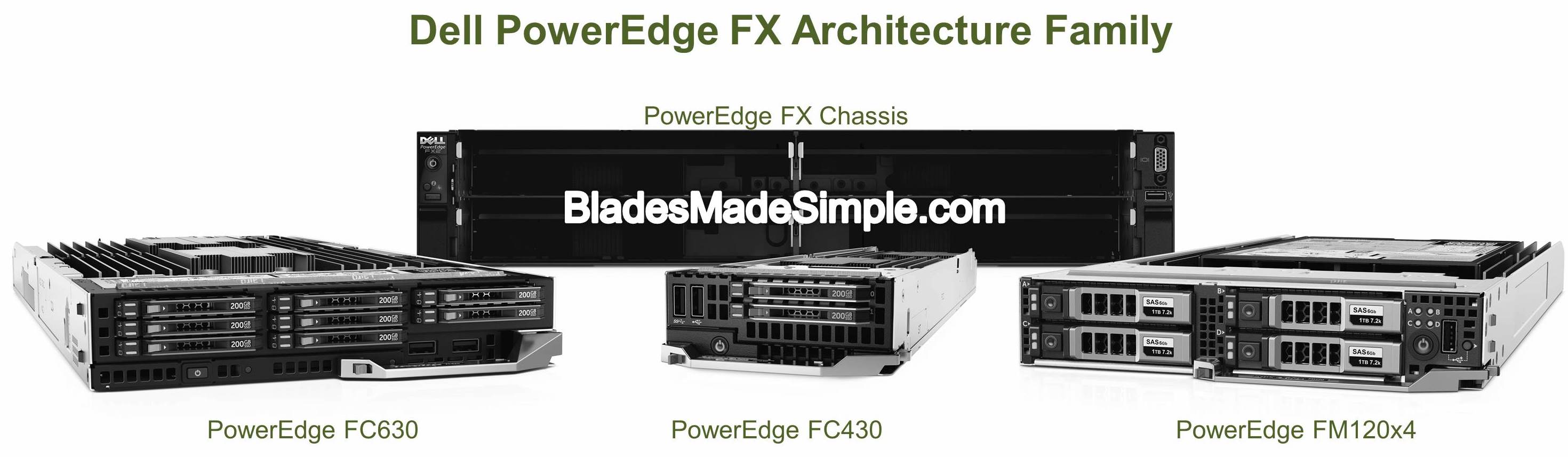

Servers

The PowerEdge FC630 is a dual CPU, Intel Xeon E5-2600 v3 capable server with up to 24 DDR4 DIMM slots, access to 2 x PCIe slots and up to 8 x 1.8″ SSD drives (2 x 2.5″ drive option also available.) This server is half-width therefore you can fit up to 4 within a single Dell PowerEdge FX2s chassis providing up to 144 cores, 96 DIMMS and 56TB of local storage within 2U. From a density perspective, 1 x FX2 chassis with 4 x Dell PowerEdge FC630 servers is 2x more dense than a 1U Dell PowerEdge R630 and is nearly identical in design. This platform is ideal for most enterprise applications including virtualization.

The PowerEdge FC430 is a quarter-width server that holds up to 2 x Intel Xeon E5-2600 v3 CPUs (up to 14 cores), up to 8 Memory DIMMs, 2 x 1.8″ SSD drives (1 with optional front InfiniBand Mezz model), Dual Port 1/10GbE LOM, access to 1 PCIe slot. A fully loaded FX2s Chassis can provide up to 224 CPU cores, 64 DIMMs and up to 16 drives. This platform is ideal for workloads like web serving, virtualization, and dedicated hosting.

The PowerEdge FC830 will be released in Q1 2015 but is planned to be a full-width server that will up to 4 CPUs (next generation Intel Xeon E5-4600 series) and 48 DIMMs. The FX2s chassis will hold 2 x FC830s.

The PowerEdge FM120x4 is a half-width sled, using a System-on-Chip (SoC) design which holds four individual single socket microservers. Each FM120 microserver is powered by a single Intel Atom processor C2000 with up to eight cores, two DIMMs of memory, one 2.5-inch front-access hard drive or two 1.8-inch SSDs, and an integrated 1GbE NIC on the SoC. PCIe connectivity is not supported for the FM120x4. A fully loaded FX2 chassis can hold 16, offering impressive density. Using eight-core processors, 128 cores and 32 DIMMs of memory can be utilized in a single 2U FX2 chassis. Ideal workloads for this platform include web services and batch data analytics.

The PowerEdge FD332 is a half-width flexible disk sled that will allow up to 16 x 2.5″ hot-plug drives (12Gbs SAS) to be connected to one or two servers via dual on-board PERC9 RAID Controllers. Up to 3 FD332s will be supported per PowerEdge FX2 chassis, however the FD332 will not support the FM120x4. (Note – if you watch the video below you’ll actually get to see this sled.) To put this into perspective – if you put a single FC630 with 3 x FD332s, you’d have a 2 CPU server with 24 DIMMs and 48 x 2.5″ Drives. That’s the most density you’ll find in a 2U server in the market.

The PowerEdge FX architecture including PowerEdge FX2 chassis and initial sleds (FC630, FM120x4) will be available in December 2014 while the FC430, FC830 and FD332 sleds are scheduled to release in Q1 2015.

Spec sheets

Video Overview

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has over 17 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin works for Dell as a Server Sales Engineer covering the Global Enterprise market.

Kevin Houston is the founder and Editor-in-Chief of BladesMadeSimple.com. He has over 17 years of experience in the x86 server marketplace. Since 1997 Kevin has worked at several resellers in the Atlanta area, and has a vast array of competitive x86 server knowledge and certifications as well as an in-depth understanding of VMware and Citrix virtualization. Kevin works for Dell as a Server Sales Engineer covering the Global Enterprise market.

Pingback: Why You May Not Want to Buy a Blade Server » Blades Made Simple

Hi Kevin,

Very informative, however, after studying FC430 and FX2 Chassis documentation, I came to know that there is only one mid plane on the chassis and so on the servers. Single mid plane connector may result entire failure of the system.

Source:

1. PowerEdge FX SOURCEBOOK 2 4 Updated June 25 2015 / Page # 123.

2. PowerEdge-FX-Architecture-Technical-Guide / Page # 59/60

Please comment………….

Thanks for the comment. The FX2 chassis does have a midplane, like all of the other modular systems on the market. It is a “passive” component so failure is unlikely however if it needed to be serviced (i.e. due to a lightening strike) it would require that all of the servers be powered down and removed. There is a really nice DELL video of the midplane at https://www.youtube.com/watch?v=rAzE2mg2aso (check out 2:09 min.) One interesting advantage of the connectors, as you’ll see in the video, is that the pins reside on the server, not the midplane so if a server was jammed in, any pin damage would be on the server side only. I hope this helps.

As a side note to your comment, the SOURCEBOOK is a Dell internal paper and not designed for use outside of Dell. It’s a dynamic document constantly changing so it’s not recommended that you reference it unless you work at Dell and have access to the most current version. Thanks, again, for the comment.

Dear Kavin,

Bundle of thanks for reply, you rightly said its passive and one but it contains both primary and secondary path. If it fails we will be completely disconnected.

Please reply.

A passive misplane means no active components. As with ANY modular system if it has to be replaced then you will have an outage. If your application is not designed to handle an outage then a modular design may not be the best recommendation.

Thanks, watched the video and it seems not a big deal.

Hello,

Si we cannot share fd332 with three servers ?

What about three esxi and shared storage ?

Thanks

Nice article, thank you. Is it wise to replace M1000e with FX2 chassis if we are using just 4 or 5 Blades? What are the advantages/disadvantages of FX2 over M1000e chassis?

Thanks in advance.

Pingback: Dell vs Cisco – New Tolly Report (updated) » Blades Made Simple

Pingback: 2018 – Year in Review » Blades Made Simple