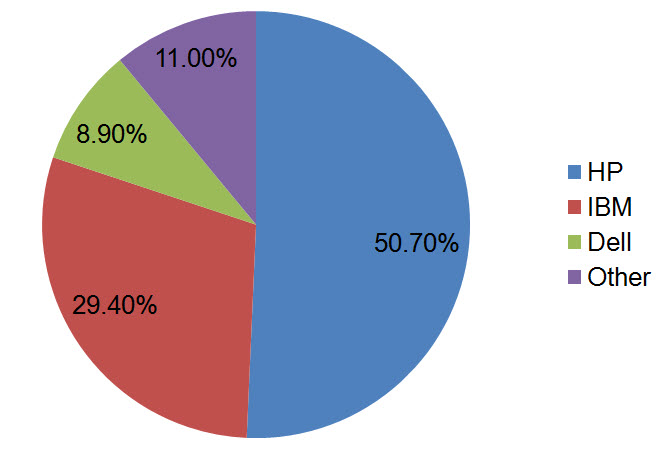

IDC reported on Wednesday that blade server sales for Q3 2009 returned to quarterly revenue growth with factory revenues increasing 1.2% year over year. However there was a 14.0% year-over-year shipment decline. Overall blade servers accounted for $1.4 billion in Q3 2009 which represented 13.6% of the overall server revenue. Of the top 5 OEM blade manufacturers, IBM experienced the strongest blade growth gaining 6.0 points of market share. However, overall market share for Q3 2009 still belongs to HP with 50.7%, with IBM following up with 29.4% and Dell in 3rd place with a lowly 8.9% revenue share. According to Jed Scaramella, senior research analyst in IDC's Datacenter and Enterprise Server group, "Customers are leveraging blade technologies to optimize their environments in response to the pressure of the economic downturn and tighter budgets. Blade technologies provide IT organizations the capability to simplify their IT while improving asset utilization, IT flexibility, and energy efficiency. For the second consecutive quarter, the blade segment increased in revenue on a quarter-to-quarter basis, while simultaneously increasing their average sales value (ASV). This was driven by next generation processors (Intel Nehalem) and a greater amount of memory, which customers are utilizing for more virtualization deployments. IDC sees virtualization and blades are closely associated technologies that drive dynamic IT for the future datacenter."

According to Jed Scaramella, senior research analyst in IDC's Datacenter and Enterprise Server group, "Customers are leveraging blade technologies to optimize their environments in response to the pressure of the economic downturn and tighter budgets. Blade technologies provide IT organizations the capability to simplify their IT while improving asset utilization, IT flexibility, and energy efficiency. For the second consecutive quarter, the blade segment increased in revenue on a quarter-to-quarter basis, while simultaneously increasing their average sales value (ASV). This was driven by next generation processors (Intel Nehalem) and a greater amount of memory, which customers are utilizing for more virtualization deployments. IDC sees virtualization and blades are closely associated technologies that drive dynamic IT for the future datacenter."

Category Archives: Cisco

Cisco, EMC and VMware Announcement – My Thoughts

By now I’m sure you’ve read, heard or seen Tweeted the announcement that Cisco, EMC and VMware have come together and created the Virtual Computing Environment coalition . So what does this announcement really mean? Here are my thoughts:

Greater Cooperation and Compatibility

Since these 3 top IT giants are working together, I expect to see greater cooperation between all three vendors, which will lead to understanding between what each vendor is offering. More important, though, is we’ll be able to have reference architecturethat can be a starting point to designing a robust datacenter. This will help to validate that an “optimized datacenter” is a solution that every customer should consider.

Technology Validation

With the introduction of the Xeon 5500 processor from Intel earlier this year and the announcement of the Nehalem EX coming early in Q1 2010, the ability to add more and more virtual machines onto a single host server is becoming more prevalent. No longer is the processor or memory the bottleneck – now it’s the I/O. With the introduction of Converged Network Adapters (CNAs), servers now have access to Converged Enhanced Ethernet (CEE) or DataCenter Ethernet (DCE) providing up to 10Gb of bandwidth running at 80% efficiency with lossless packets. With this lossless ethernet, I/O is no longer the bottleneck.

VMware offers the top selling virtualization software, so it makes sense they would be a good fit for this solution.

Cisco has a Unified Computing System that offers up the ability to combine a server running a CNA to a Interconnect switch that allows the data to be split out into ethernet and storage traffic. It also has a building block design to allow for ease of adding new servers – a key messaging in the Coalition announcement.

EMCoffers a storage platform that will enable the storage traffic from the Cisco UCS 6120XP Interconnect Switch and they have a vested interest in VMware and Cisco, so this marriage of the 3 top IT vendors is a great fit.

Announcement of Vblock™ Infrastructure Packages

According to the announcement, the Vblock Infrastructure Package “will provide customers with a fundamentally better approach to streamlining and optimizing IT strategies around private clouds.” The packages will be fully integrated, tested, validated, and that combine best-in-class virtualization, networking, computing, storage, security, and management technologies from Cisco, EMC and VMware with end-to-end vendor accountability. My thought on these packages is that they are really nothing new. Cisco’s UCS has been around, VMware vSphere has been around and EMC’s storage has been around. The biggest message from this announcement is that there will soon be “bundles” that will simplify customers solutions. Will that take away from Solution Providers’ abilities to implement unique solutions? I don’t think so. Although this new announcement does not provide any new product, it does mark the beginning of an interesting relationship between 3 top IT giants and I think this announcement will definitely be an industry change – it will be interesting to see what follows.

UPDATE – click here check out a 3D model of the vBlocks Architecture.

Cisco’s Unified Computing System Management Software

Cisco’s own Omar Sultan and Brian Schwarz recently blogged about Cisco’s Unified Computing System (UCS) Manager software and offered up a pair of videos demonstrating its capabilities. In my opinion, the management software of Cisco’s UCS is the magic that is going to push Cisco out of the Visionary quadrant of the Gartner Magic Quadrant for Blade Servers to the “Leaders” quadrant.

The Cisco UCS Manager is the centralized management interface that integrates the entire set of Cisco Unified Computing System components. The management software not only participates in UCS blade server provisioning, but also in device discovery, inventory, configuration, diagnostics, onitoring, fault detection, auditing, and statistics collection.

On Omar’s Cisco blog, located at http://blogs.cisco.com/datacenter, Omar and Brian created two videos. Part 1 of their video offers a general overview of the Management software, where as in Part 2 they highlight the capabilities of profiles.

I encourage you to check out the videos – they did a great job with them.

Cisco's New Virtualized Adapter (aka "Palo")

Previously known as “Palo”, Cisco’s virtualized adapter allows for a server to split up the 10Gb pipes into numerous virtual pipes (see below ) like multiple NICs or multiple Fibre Channel HBAs. Although the card shown in the image to the left is a normal PCIe card, the initial launch of the card will be in the Cisco UCS blade server.

) like multiple NICs or multiple Fibre Channel HBAs. Although the card shown in the image to the left is a normal PCIe card, the initial launch of the card will be in the Cisco UCS blade server.

So, What’s the Big Deal?

When you look at server workloads, their needs vary – web servers need a pair of NICs, whereas database servers may need 4+ NICs and 2+HBAs. By having the ability to split the 10Gb pipe into virtual devices, you can set up profiles inside of Cisco’s UCS Manager to apply the profiles for a specific servers’ needs. An example of this would be a server being used for VMware VDI (6 NICs and 2 HBAs) during the day, and at night, it’s repurposed for a computational server needing only 4 NICs.

Another thing to note is although the image shows 128 virtual devices, that is only the theoretical limitation. The reality is that the # of virtual devices depends on the # of connections to the Fabric Interconnects. As I previously posted, the servers’ chassis has a pair of 4 port Fabric Extenders (aka FEX) that uplink to the UCS 6100 Fabric Interconnect. If only 1 of the 4 ports is uplinked to the UCS 6100, then only 13 virtual devices will be available. If 2 FEX ports are uplinked, then 28 virtual devices will be available. If 4 FEX uplink ports are used, then 58 virtual devices will be available.

Will the ability to carve up your 10Gb pipes into smaller ones make a difference? It’s hard to tell. I guess we’ll see when this card starts to ship in December of 2009.

Cisco's UCS Software

eWeek recently posted snapshots of Cisco’s Unified Computing System (UCS) Software on their site: http://www.eweek.com/c/a/IT-Infrastructure/LABS-GALLERY-Cisco-UCS-Unified-Computing-System-Software-199462/?kc=rss

Take a good look at the software because the software is the reason this blade system will be successful because they are treating the physical blades as a resource – just CPUs, memory and I/O. “What” the server should be and “How” the server should act is a feature of the UCS Management software. It will show you the physical layout of the blades to the UCS 6100 Interconnect, it can show you the configurations of the blades in the attached UCS 5108 chassis, it can set the boot order of the blades, etc. Quite frankly there are too many features to mention and I don’t want to steal their fire, so take a few minutes to go to: http://www.eweek.com/c/a/IT-Infrastructure/LABS-GALLERY-Cisco-UCS-Unified-Computing-System-Software-199462/?kc=rss.

How IBM's BladeCenter works with Cisco Nexus 5000

Other than Cisco’s UCS offering, IBM is currently the only blade vendor who offers a Converged Network Adapter (CNA) for the blade server. The 2 port CNA sits on the server in a PCI express slot and is mapped to high speed bays with CNA port #1 going to High Speed Bay #7 and CNA port #2 going to High Speed Bay #9. Here’s an overview of the IBM BladeCenter H I/O Architecture (click to open large image:)

Since the CNAs are only switched to I/O Bays 7 and 9, those are the only bays that require a “switch” for the converged traffic to leave the chassis. At this time, the only option to get the converged traffic out of the IBM BladeCenter H is via a 10Gb “pass-thru” module. A pass-thru module is not a switch – it just passes the signal through to the next layer, in this case the Cisco Nexus 5000.

10 Gb Ethernet Pass-thru Module for IBM BladeCenter

The pass-thru module is relatively inexpensive, however it requires a connection to the Nexus 5000 for every server that has a CNA installed. As a reminder, the IBM BladeCenter H can hold up to 14 servers with CNAs installed so that would require 14 of the 20 ports on a Nexus 5010. This is a small cost to pay, however to gain the 80% efficiency that 10Gb Datacenter Ethernet (or Converged Enhanced Ethernet) offers. The overall architecture for the IBM Blade Server with CNA + IBM BladeCenter H + Cisco Nexus 5000 would look like this (click to open larger image:)

Hopefully when IBM announces their Cisco Nexus 4000 switch for the IBM BladeCenter H later this month, it will provide connectivity to CNAs on the IBM Blade server and it will help consolidate the amount of connections required to the Cisco Nexus 5000 from 14 to perhaps 6 connections ;)

Cisco Announces Nexus 4000 Switch for Blade Chassis

Cisco is announcing today the release of the Nexus 4000 switch. It will be designed to work in “other” blade vendors’ chassis, although they aren’t announcing which blade vendors. My gut is that Dell and IBM will OEM it, but HP will stick with their ProCurve line announced a few weeks ago. Here’s what I know about the Nexus 4000 switch:

1) It will aggregate 1GB links to 10Gb uplink. To me, this means that it will not be compatible with Converged Network Adapters (CNAs). From this description, it seems to be just the Cisco Nexus 2000 in a blade form factor. It’s simply a “fabric extender” allowing all of the traffic to flow into the Nexus 5000 Switch.

2) It will run on the Nexus O/S (NX-OS) This is key because it allows users to have a seamless environment for their server and their Nexus switch infrastructure.

3) Cisco Nexus 4000 will provide “cost effective transition from multiple 1GbE links to a lossless 10GbE for virtualized environments” This statement confuses me. Does it mean that the Cisco Nexus 4000 switch will be capable of working with 1Gb NICs as well as 10Gb CNAs, or is it just stating that the traditional 1Gb NICs will be able to connect into a lossless unified fabric??

Cisco is having a live broadcast at 10 am PST today, but I just reviewed the slide deck and they talk at a VERY high level on this new announcement. I suppose maybe they are going to let each vendor (Dell and IBM) provide details once they officially announce their switches. When they do, I’ll post details here.