I wanted to post a few more rumours before I head out to HP in Houston for “HP Blades and Infrastructure Software Tech Day 2010” so it’s not to appear that I got the info from HP. NOTE: this is purely speculation, I have no definitive information from HP so this may be false info.

First off – the HP Rumour:

I’ve caught wind of a secret that may be truth, may be fiction, but I hope to find out for sure from the HP blade team in Houston. The rumour is that HP’s development team currently has a Cisco Nexus Blade Switch Module for the HP BladeSystem in their lab, and they are currently testing it out.

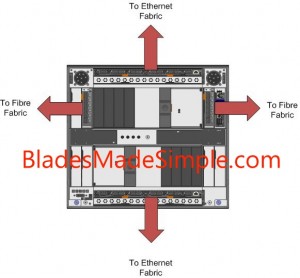

Now, this seems far fetched, especially with the news of Cisco severing partner ties with HP, however, it seems that news tidbit was talking only about products sold with the HP label, but made by Cisco (OEM.) HP will continue to sell Cisco Catalyst switches for the HP BladeSystem and even Cisco branded Nexus switches with HP part numbers (see this HP site for details.) I have some doubt about this rumour of a Cisco Nexus Switch that would go inside the HP BladeSystem simply because I am 99% sure that HP is announcing a Flex10 type of BladeSystem switch that will allow converged traffic to be split out, with the Ethernet traffic going to the Ethernet fabric and the Fibre traffic going to the Fibre fabric (check out this rumour blog I posted a few days ago for details.) Guess only time will tell.

The IBM Rumour:

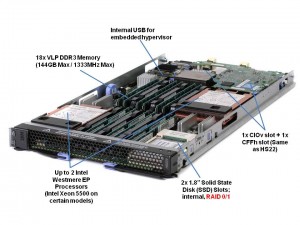

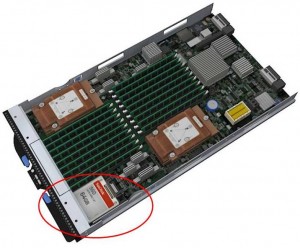

I posted a few days ago a rumour blog that discusses the rumour of HP’s next generation adding Converged Network Adapters (CNA) to the motherboard on the blades (in lieu of the 1GB or Flex10 NICs), well, now I’ve uncovered a rumour that IBM is planning on following later this year with blades that will also have CNA’s on the motherboard. This is huge! Let me explain why.

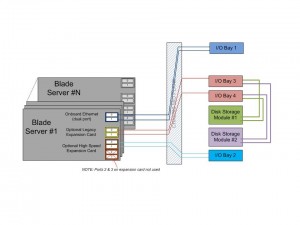

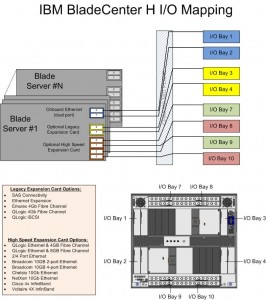

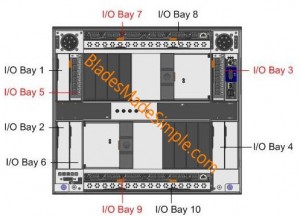

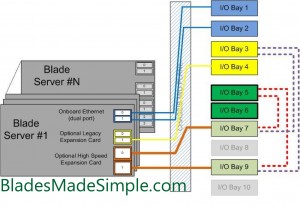

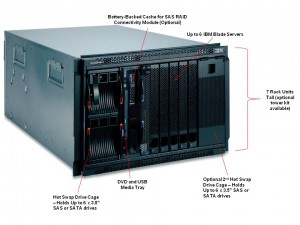

The design of IBM’s BladeCenter E and BladeCenter H have the 1Gb NICs onboard each blade server hard-wired to I/O Bays 1 and 2 – meaning only Ethernet modules can be used in these bays (see the image to the left for details.) However, I/O Bays 1 and 2 are for “standard form factor I/O modules” while I/O Bays are for “high speed form factor I/O modules”. This means that I/O Bays 1 and 2 can not handle “high speed” traffic, i.e. converged traffic.

The design of IBM’s BladeCenter E and BladeCenter H have the 1Gb NICs onboard each blade server hard-wired to I/O Bays 1 and 2 – meaning only Ethernet modules can be used in these bays (see the image to the left for details.) However, I/O Bays 1 and 2 are for “standard form factor I/O modules” while I/O Bays are for “high speed form factor I/O modules”. This means that I/O Bays 1 and 2 can not handle “high speed” traffic, i.e. converged traffic.

This means that IF IBM comes out with a blade server that has a CNA on the motherboard, either:

a) the blade’s CNA will have to route to I/O Bays 7-10

OR

b) IBM’s going to have to come out with a new BladeCenter chassis that allows the high speed converged traffic from the CNAs to connect to a high speed switch module in Bays 1 and 2.

So let’s think about this. If IBM (and HP for that matter) does put CNA’s on the motherboard, is there a need for additional mezzanine/daughter cards? This means the blade servers could have more real estate for memory, or more processors. If there’s no extra daughter cards, then there’s no need for additional I/O module bays. This means the blade chassis could be smaller and use less power – something every customer would like to have.

I can really see the blade market moving toward this type of design (not surprising very similar to Cisco’s UCS design) – one where only a pair of redundant “modules” are needed to split converged traffic to their respective fabrics. Maybe it’s all a pipe dream, but when it comes true in 18 months, you can say you heard it here first.

Thanks for reading. Let me know your thoughts – leave your comments below.

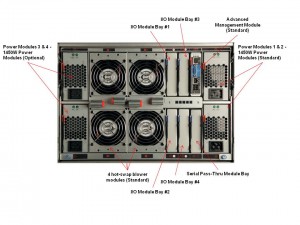

I love pictures – so I’ve attached an image of the BladeCenter S, as seen from the back. A few key points to make note of:

I love pictures – so I’ve attached an image of the BladeCenter S, as seen from the back. A few key points to make note of: