In the wake of the Cisco, EMC and VMware announcement, HP today is formally announcing the HP Converged Infrastructure. You can take a look at the full details of this design on HP’s Website, but I wanted to try and simplify:

The HP Converged Infrastrcture is comprised of four core areas:

- HP Infrastructure Operating Environment

- HP FlexFabric

- HP Virtual Resource Pools

- HP Data Center Smart Grid

According to HP, achieving the benefits of a “converged infrastructure” requires the following core attributes:

- Virtualized pools of servers, storage, networking

- Resiliency built into the hardware, software, and operating environment

- Orchestration through highly automated resources to deliver an application aligned according to policies

- Optimized to support widely changing workloads and different applications and usage models

- Modular components built on open standards to more easily upgrade systems and scale capacity

Let’s take a peak into each of the core areas that makes up the HP Converged Infrastructure.

HP Infrastructure Operating Environment

HP Infrastructure Operating Environment

This element of the converged infrastructure provides a shared services management engine that adapts and provisions the infrastructure. The goal of this core area is to expedite delivery and provisioning of the datacenter’s infrastructure.

The HP Infrastructure Operating Environment is comprised of HP Dynamics – a command center that enables you to continuously analyze and optimize your infrastructure; and HP Insight Control– HP’s existing server management software.

HP FlexFabric

HP defines this core area as a “next-generation, highly scalable data center fabric architecture and a technology layer in the HP Converged Infrastructure.” The goal of the HP FlexFabric is to create a highly scalable, flat network domain that enables administrators to easily provision networks as needed and on-demand to meet the virtual machines requirements.

HP’s FlexFabric is made up of HP’s ProCurve line and their VirtualConnect technologies. Beyond the familiar network components, the HP Procurve Data Center Connection Manager is also included as a fundamental component offering up automated network provisioning.

HP Virtual Resource Pools

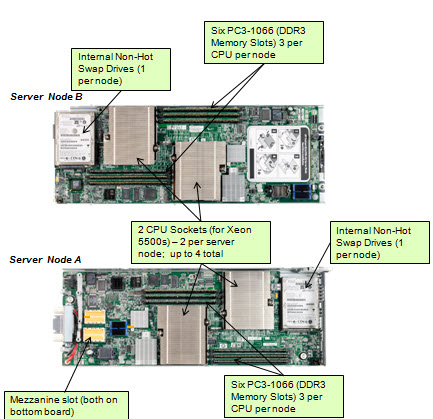

This core area is designed to allow for a virtualized collection of storage, servers and networking that can be shared, repurposed and provisioned as needed.

Most of HP’s Enterprise products fit into this core area. The HP 9000 and HP Integrity servers use HP Global Workload Managerto provision workloads; HP Proliant servers can use VMware or Microsoft’s virtualization technologies and the HP StorageWorks SAN Virtualization Services

Platform(SVSP) enables network-based (SAN) virtualization of heterogeneous disk arrays.

HP Data Center Smart Grid

The goal of this last core area of the HP Converged Infrastructure is to “create an intelligent, energy-aware environment across IT and facilities to optimize and reduce energy use, reclaiming facility capacity and reducing energy costs.”

HP approaches this core area with a few different products. The Proliant G6 server lines offer a “sea of sensors” that aid with the consumption of power and cooling. HP also offers a Performance Optimized Datacenter (POD)– a container based datacenter that optimize power and cooling. HP also uses the HP Insight Control software to manage the HP Thermal Logic technologies and control peaks and valleys of power management on servers.

Summary

In summary, HP’s Converged Infrastructure follows suit with what many other vendors are doing – taking their existing products and technologies and re-marketing them to closely align and reflect a more coherent messaging. Only time will tell as to if this approach will be successful in growing HP’s business.

On Thursday, IBM plans to announce its work with university researchers to instantly process data for wildfire prediction — changing the delay time from every six hours to real-time. This will not only help firefighters control the blaze more efficiently, but deliver more informed decisions on public evacuations and health warnings.

On Thursday, IBM plans to announce its work with university researchers to instantly process data for wildfire prediction — changing the delay time from every six hours to real-time. This will not only help firefighters control the blaze more efficiently, but deliver more informed decisions on public evacuations and health warnings.