News Flash: Cisco is now selling servers!

Okay – perhaps this isn’t news anymore, but the reality is Cisco has been getting a lot of press lately – from their overwhelming presence at VMworld 2009 to their ongoing cat fight with HP. Since I work for a Solutions Provider that sells HP, IBM and now Cisco blade servers, I figured it might be good to “try” and put together a comparison between the Cisco and IBM. Why IBM? Simply because at this time, they are the only blade vendor who offers a Converged Network Adapter (CNA) that will work with the Cisco Nexus 5000 line. At this time Dell and HP do not offer a CNA for their blade server line so IBM is the closest we can come to Cisco’s offering. I don’t plan on spending time educating you on blades, because if you are interested in this topic, you’ve probably already done your homework. My goal with this post is to show the pros (+) and cons (-) that each vendor has with their blade offering – based on my personal, neutral observation

Chassis Variety / Choice: winner in this category is IBM.

IBM currently offers 5 types of blade chassis: BladeCenter S, BladeCenter E, BladeCenter H, BladeCenter T and BladeCenter HT. Each of the IBM blade chassis have unique offerings, such as the BladeCenter S is designed for small or remote offices with local storage capabilities, whereas the BladeCenter HT is designed for Telco environments with options for NEBS compliant features including DC power. At this time, Cisco only offers a single blade chassis offering (the 5808).

IBM BladeCenter H

Cisco UCS 5108

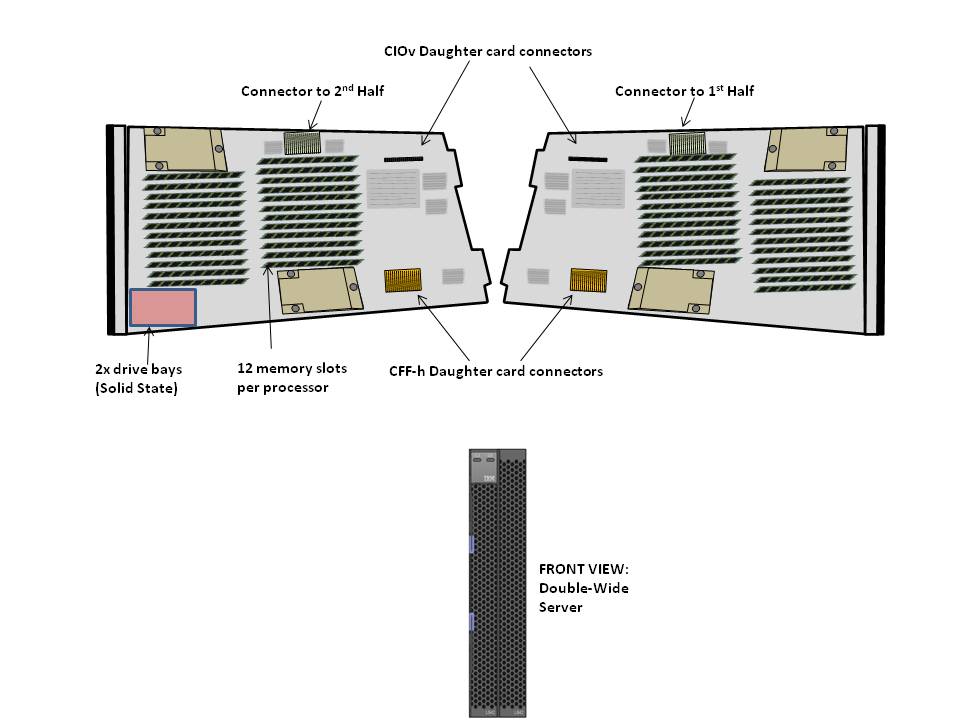

Server Density and Server Offerings: winner in this category is IBM. IBM’s BladeCenter E and BladeCenter H chassis offer up to 14 blade servers with servers using Intel, AMD and Power PC processors. In comparison, Cisco’s 5808 chassis offers up to 8 server slots and currently offers servers with Intel Xeon processors. As an honorable mention Cisco does offer a “full width” blade (Cisco UCS B250 server) that provides up to 384Gb of RAM in a single blade server across 48 memory slots offering up the ability to get to higher memory at a lower price point.

Management / Scalability: winner in this category is Cisco.

This is where Cisco is changing the blade server game. The traditional blade server infrastructure calls for each blade chassis to have its own dedicated management module to gain access to the chassis’ environmentals and to remote control the blade servers. As you grow your blade chassis environment, you begin to manage multiple servers. Beyond the ease of managing , the management software that the Cisco 6100 series offers provides users with the ability to manage server service profiles that consists of things like MAC Addresses, NIC Firmware, BIOS Firmware, WWN Addresses, HBA Firmware (just to name a few.)

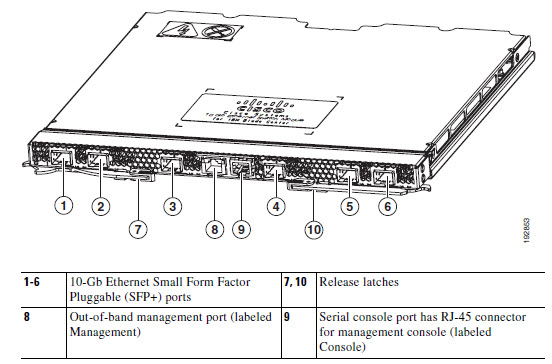

Cisco UCS 6100 Series Fabric Interconnect

With Cisco’s UCS 6100 Series Fabric Interconnects, you are able to manage up to 40 blade chassis with a single pair of redundant UCS 6140XP (consisting of 40 ports.)

If you are familiar with the Cisco Nexus 5000 product, then understanding the role of the Cisco UCS 6100 Fabric Interconnect should be easy. The UCS 6100 Series Fabric Interconnect do for the Cisco UCS servers what Nexus does for other servers: unifies the fabric. HOWEVER, it’s important to note the UCS 6100 Series Fabric Interconnect is NOT a Cisco Nexus 5000. The UCS 6100 Series Fabric Interconnect is only compatible with the UCS servers.

Cisco UCS I/O Connectivity Diagram (UCS 5108 Chassis with 2 x 6120 Fabric Interconnects)

If you have other servers, with CNAs, then you’ll need to use the Cisco Nexus 5000.

The diagram on the right shows a single connection from the FEX to the UCS 6120XP, however the FEX has 4 uplinks, so if you want (need) more throughput, you can have it. This design provides each half-wide Cisco B200 server with the ability to have 2

CNA ports with redundant pathways. If you are satisified with using a single FEX connection per chassis, then you have the ability to scale up to 20 x blade chassis with a Cisco UCS 6120 Fabric Interconnect, or 40 chassis with the Cisco UCS 6140 Fabric Interconnect. As hinted in the previous section, the management software for the all connected UCS chassis resides in the redundant Cisco UCS 6100 Series Fabric Interconnects. This design offers a highly scaleable infrastructure that enables you to scale simply by dropping in a chassis and connecting the FEX to the 6100 switch. (Kind of like Lego blocks.)

On the flip side, while this architecture is simple, it’s also limited. There is currently no way to add additional I/O to an individual server. You get 2 x CNA ports per Cisco B200 server or 4 x CNA ports per Cisco B250 server.

As previously mentioned, IBM has a strategy that is VERY similar to the Cisco UCS strategy using the Cisco Nexus 5000 product line. IBM’s solution consists of:

Until IBM and Cisco design a Cisco Nexus switch that integrates into the IBM BladeCenter H chassis, using a 10Gb pass-thru module is the best option to get true DataCenter Ethernet (or Converged Enhanced Ethernet) from the server to the Nexus switch. The performance for the IBM solution should equal the Cisco UCS design, since it’s just passing the signal through, however the connectivity is going to be more with the IBM solution. Passing signals through means NO cable

BladeCenter H Diagram with Nexus 5010 (using 10Gb Passthru Modules)

consolidation – for every server you’re going to need a connection to the Nexus 5000. For a fully populated IBM BladeCenter H chassis, you’ll need 14 connections to the Cisco Nexus 5000. If you are using the Cisco 5010 (20 ports) you’ll eat up all but 6 ports. Add a 2nd IBM BladeCenter chassis and you’re buying more Cisco Nexus switches. Not quite the scaleable design that the Cisco UCS offers.

IBM offers a 10Gb Ethernet Switch Option from BNT (Blade Networks) that will work with converged switches like the Nexus 5000, but at this time that upgrade is not available. Once it does become available, it would reduce the connectivity requirements down to a single cable, but, adding a switch between the blade chassis and the Nexus switch could bring additional management complications. That is yet to be seen.

IBM’s BladeCenter H (BCH) does offer something that Cisco doesn’t – additional I/O expansion. Since this solution uses two of the high speed bays in the BCH, bays 1, 2, 3 & 4 remain available. Bays 1 & 2 are mapped to the onboard NICs on each server, and bays 3&4 are mapped to the 1st expansion card on each server. This means that 2 additional NICs and 2 additional HBAs (or NICs) could be added in conjunction with the 2 CNAs on each server. Based on this, IBM potentially offers more I/O scalability.

And the Winner Is…

It depends. I love the concept of the Cisco UCS platform. Servers are seen as processors and memory – building blocks that are centrally managed. Easy to scale, easy to size. However, is it for the average datacenter who only needs 5 servers with high I/O? Probably not. I see the Cisco UCS as a great platform for datacenters with more than 14 servers needing high I/O bandwidth (like a virtualization server or database server.) If your datacenter doesn’t need that type of scalability, then perhaps going with IBM’s BladeCenter solution is the choice for you. Going the IBM route gives you flexibility to choose from multiple processor types and gives you the ability to scale into a unified solution in the future. While ideal for scalability, the IBM solution is currently more complex and potentially more expensive than the Cisco UCS solution.

Let me know what you think. I welcome any comments.