It occurred to me that I created a reference chart for showing what blade server options are available in the market (“Blade Server Comparison – 2018“) but I’ve never listed the options for blade server chassis. In this post, I’ll provide you with overviews of blade chassis from Cisco, Dell EMC, HPE and Lenovo. One of the things I’m not going to do is try and give Pro’s and Con’s for each chassis. The reason is quite obvious if you have read this blog before, but in a nutshell, I work for Dell EMC, so I’m not going to promote or bash any vendor. My goal is to simplify each vendor’s offerings and give you one place to get an overview of each blade chassis in the market.

Tag Archives: FEX

Blade Server Networking Options

If you are new to blade servers, you may find there are quite a few options to consider in regards to managing your Ethernet traffic. Some vendors promote the traditional integrated switching, while others promote extending the fabric to a Top of Rack (ToR) device. Each method has its own benefits, so let me explain what those are. Before I get started, although I work for Dell, this blog post is designed to be an un-biased review of the network options available for many blade server vendors.

Cisco Launches UCS B200 M3

On March 8, Cisco launched the UCS B200 M3 blade server. The half-height blade uses processors from Intel’s newly-announced Xeon E5 family, and competes with the Dell M620, HP BL460c Gen8, and IBM HS23 server blades. Continue reading

On March 8, Cisco launched the UCS B200 M3 blade server. The half-height blade uses processors from Intel’s newly-announced Xeon E5 family, and competes with the Dell M620, HP BL460c Gen8, and IBM HS23 server blades. Continue reading

Rumour? New 8 Port Cisco Fabric Extender for UCS

I recently heard a rumour that Cisco was coming out with an 8 port Fabric Extender (FEX) for the UCS 5108, so I thought I’d take some time to see what this would look like. NOTE: this is purely speculation, I have no definitive information from Cisco so this may be false info.

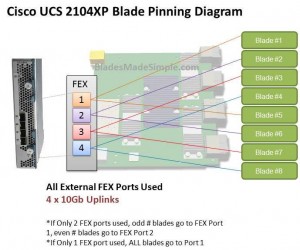

Before we discuss the 8 port FEX, let’s take a look at the 4 port UCS 2140XP FEX and how the blade servers connect, or “pin” to them. The diagram below shows a single FEX. A single UCS 2140XP FEX has 4 x 10Gb uplinks to the 6100 Fabric Interconnect Module. The UCS 5108 chassis has 2 FEX per chassis, so each server would have a 10Gb connection per FEX. However, as you can see, the server shares that 10Gb connection with another blade server. I’m not an I/O guy, so I can’t say whether or not having 2 servers connect to the same 10Gb uplink port would cause problems, but simple logic would tell me that two items competing for the same resource “could” cause contention. If you decide to only connect 2 of the 4 external FEX ports, then you have all of the “odd #” blade servers connecting to port 1 and all of the “even # blades” connecting to port 2. Now you are looking at a 4 servers contending for 1 uplink port. Of course, if you only connect 1 external uplink, then you are looking at all 8 servers using 1 uplink port.

Before we discuss the 8 port FEX, let’s take a look at the 4 port UCS 2140XP FEX and how the blade servers connect, or “pin” to them. The diagram below shows a single FEX. A single UCS 2140XP FEX has 4 x 10Gb uplinks to the 6100 Fabric Interconnect Module. The UCS 5108 chassis has 2 FEX per chassis, so each server would have a 10Gb connection per FEX. However, as you can see, the server shares that 10Gb connection with another blade server. I’m not an I/O guy, so I can’t say whether or not having 2 servers connect to the same 10Gb uplink port would cause problems, but simple logic would tell me that two items competing for the same resource “could” cause contention. If you decide to only connect 2 of the 4 external FEX ports, then you have all of the “odd #” blade servers connecting to port 1 and all of the “even # blades” connecting to port 2. Now you are looking at a 4 servers contending for 1 uplink port. Of course, if you only connect 1 external uplink, then you are looking at all 8 servers using 1 uplink port.

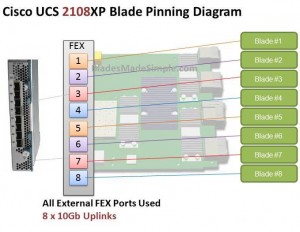

Introducing the 8 Port Fabric Extender (FEX)

I’ve looked around and can’t confirm if this product is really coming or not, but I’ve heard a rumour that there is going to be an 8 port version of the UCS 2100 series Fabric Extender. I’d imagine it would be the UCS 2180XP Fabric Extender and the diagram below shows what I picture it would look like. The biggest advantage I see of this design would be that each server would have a dedicated uplink port to the Fabric Interconnect. That being said, if the existing 20 and 40 port Fabric Interconnects remain, this 8 port FEX design would quickly eat up the available ports on the Fabric Interconnect switches since the FEX ports directly connect to the Fabric Interconnect ports. So – does this mean there is also a larger 6100 series Fabric Interconnect on the way? I don’t know, but it definitely seems possible.

The biggest advantage I see of this design would be that each server would have a dedicated uplink port to the Fabric Interconnect. That being said, if the existing 20 and 40 port Fabric Interconnects remain, this 8 port FEX design would quickly eat up the available ports on the Fabric Interconnect switches since the FEX ports directly connect to the Fabric Interconnect ports. So – does this mean there is also a larger 6100 series Fabric Interconnect on the way? I don’t know, but it definitely seems possible.

What do you think of this rumoured new offering? Does having a 1:1 blade server to uplink port matter or is this just more

Cisco's New Virtualized Adapter (aka "Palo")

Previously known as “Palo”, Cisco’s virtualized adapter allows for a server to split up the 10Gb pipes into numerous virtual pipes (see below ) like multiple NICs or multiple Fibre Channel HBAs. Although the card shown in the image to the left is a normal PCIe card, the initial launch of the card will be in the Cisco UCS blade server.

) like multiple NICs or multiple Fibre Channel HBAs. Although the card shown in the image to the left is a normal PCIe card, the initial launch of the card will be in the Cisco UCS blade server.

So, What’s the Big Deal?

When you look at server workloads, their needs vary – web servers need a pair of NICs, whereas database servers may need 4+ NICs and 2+HBAs. By having the ability to split the 10Gb pipe into virtual devices, you can set up profiles inside of Cisco’s UCS Manager to apply the profiles for a specific servers’ needs. An example of this would be a server being used for VMware VDI (6 NICs and 2 HBAs) during the day, and at night, it’s repurposed for a computational server needing only 4 NICs.

Another thing to note is although the image shows 128 virtual devices, that is only the theoretical limitation. The reality is that the # of virtual devices depends on the # of connections to the Fabric Interconnects. As I previously posted, the servers’ chassis has a pair of 4 port Fabric Extenders (aka FEX) that uplink to the UCS 6100 Fabric Interconnect. If only 1 of the 4 ports is uplinked to the UCS 6100, then only 13 virtual devices will be available. If 2 FEX ports are uplinked, then 28 virtual devices will be available. If 4 FEX uplink ports are used, then 58 virtual devices will be available.

Will the ability to carve up your 10Gb pipes into smaller ones make a difference? It’s hard to tell. I guess we’ll see when this card starts to ship in December of 2009.