(UPDATED 10/28/09 with new links to full article)

I received a Tweet from @HPITOps linked to Gartner’s first ever “Magic Quadrant” for blade servers.  The Magic Quadrant is a tool that Gartner put together to help people easily where manufacturers rank, based on certain criteria. As the success of blade servers continues to grow, the demand for blades increases. You can read the complete Gartner paper at http://h20195.www2.hp.com/v2/getdocument.aspx?docname=4AA3-0100ENW.pdf, but I wanted to touch on a few highlights.

The Magic Quadrant is a tool that Gartner put together to help people easily where manufacturers rank, based on certain criteria. As the success of blade servers continues to grow, the demand for blades increases. You can read the complete Gartner paper at http://h20195.www2.hp.com/v2/getdocument.aspx?docname=4AA3-0100ENW.pdf, but I wanted to touch on a few highlights.

Key Points

- *Blades are less than 15% of the server marketplace today.

- *HP and IBM make up 70% of the blade market share

- *HP, IBM and Dell are classified as “Leaders” in the blade market place and Cisco is listed as a “Visionary”

What Gartner Says About Cisco, Dell, HP and IBM

Cisco

Cisco announced their entry into the blade server market place in early 2009 and as of the past few weeks began shipping their first product. Gartner’s report says, “Cisco’s Unified Computing System (UCS) is highly innovative and is particularly targeted at highly integrated and virtualized enterprise requirements.” Gartner currently views Cisco as being in the “visionaries” quadrant. The report comments that Cisco’s strengths are:

- they have a global presence in “most data centers”

- differentiated blade design

- they have a cross-selling opportunity across their huge install base

- they have strong relationships with virtualization and integration vendors

As part of the report, Gartner also mentions some negative points (aka “Cautions”) about Cisco to consider:

- Lack of blade server install base

- limited blade portfolio

- limited hardware certification by operating system and application software vendors

Obviously these Cautions are based on Cisco’s newness to the marketplace, so let’s wait 6 months and check back on what Gartner thinks.

Dell

No stranger to the blade marketplace, Dell continues to produce new servers and new designs. While Dell has a fantastic marketing department, they still are not anywhere close to the market share that IBM and HP split. In spite of this, Gartner still classifies Dell in the “leaders” quadrant. According to the report, “Dell offers Intel and AMD Opteron blade servers that are well-engineered, enterprise-class platforms that fit well alongside the rest of DelI’s x86 server portfolio, which has seen the company grow its market share steadily through the past 18 months.“

The report views that Dell’s strengths are:

- having a cross-selling opportunity to sell blades to their existing server, desktop and notebook customers

- aggressive pricing policies

- focused in innovating areas like cooling and virtual I/O

Dell’s “cautions” are reported as:

- having a limited portfolio that is targeted toward enterprise needs

- bad history of “patchy committment” to their blade platforms

It will be interesting to see where Dell takes their blade model. It’s easy to have a low price model on entry level rack servers, but in a blade server infrastructure where standardization is key and integrated switches are a necessity having the lowest pricing may get tough.

IBM

Since 2002, IBM has ventured into the blade server marketplace with an wide variety of server and chassis offerings. Gartner placed IBM in the “leaders” quadrant as well, although they place IBM much higher and to the right signifying a “greater ability to execute” and a “more complete vision.” While IBM once had the lead in blade server market share, they’ve since handed that over to HP. Gartner reports, “IBM is putting new initiatives in place to regain market share, including supply chain enhancements, dedicated sales resources and new channel programs.“

The report views that IBM’ strengths are:

- strong global market share

- cross selling opportunities to sell into existing IBM System x, System i, System p and System z customers

- broad set of chassis options that address specialized needs (like DC power & NEBS compliance for Telco) as well as Departmental / Enterprise

- blade server offerings for x86 and Power Processors

- strong record of management tools

- innovation around cooling and specialized workloads

Gartner only lists one “caution” for IBM and that is their loss of market share to HP since 2007.

HP

Gartner identifies HP as being in the farthest right in the October 2009 Magic Quadrant, therefore I’ll classify HP as being the #1 “leader.” Gartner’s report says, “Since the 2006 introduction of its latest blade generation, HP has recaptured market leadership and now sells more blade servers than the rest of the market combined.” Ironically, Gartner list of HP’s strengths is nearly identical to IBM:

- global blade market leader

- cross selling opportunities to sell into existing HP server, laptop and desktop customers

- broad set of chassis options that address Departmental and Enterprise needs

- blade server offerings for x86 and Itanium Processors

- strong record of management tools

- innovation around cooling and virtual I/O

Gartner only lists one “caution” for HP and that is their portfolio, as extensive as it may be, could be considered too complex and it could be too close to HP’s alternative, modular, rack-based offering.

Gartner’s report continues to discuss other niche players like Fujitsu, NEC and Hitachi, so if you are interesting in reading about them, check out the full report at

http://h20195.www2.hp.com/v2/getdocument.aspx?docname=4AA3-0100ENW.pdf. All-in-all, Gartner’s report reaffirms that HP, IBM and Dell are the market leaders, for now, with Cisco coming up behind them.

Feel free to comment on this post and let me know what you think.

estimated tax payments

christmas tree store

beaches in florida

dog treat recipes

new zealand map

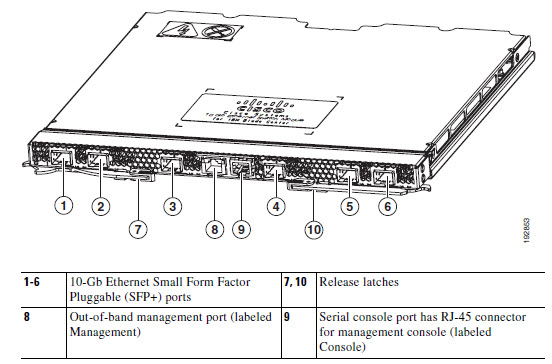

) like multiple NICs or multiple Fibre Channel HBAs. Although the card shown in the image to the left is a normal PCIe card, the initial launch of the card will be in the Cisco UCS blade server.

) like multiple NICs or multiple Fibre Channel HBAs. Although the card shown in the image to the left is a normal PCIe card, the initial launch of the card will be in the Cisco UCS blade server.