Intel is scheduled to “officially” announce today the details of their Nehalem EX CPU platform, although the details have been out for quite a while, however I wanted to highlight some key points.

Intel Xeon 7500 Chipset

Intel Xeon 7500 Chipset

This chipset will be the flagship replacement for the existing Xeon 7400 architecture. Enhancements include:

•Nehalem uarchitecture

•8-cores per CPU

•24MB Shared L3 Cache

• 4 Memory Buffers per CPU

•16 DIMM slots per CPU for a total of 64 DIMM slots supporting up to 1 terabyte of memory (across 4 CPUs)

•72 PCIe Gen2 lanes

•Scaling from 2-256 sockets

•Intel Virtualization Technologies

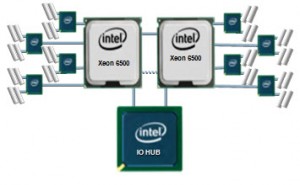

Intel Xeon 6500 Chipset

Perhaps the coolest addition to the Nehalem EX announcement by Intel is the ability for certain vendors to cut the architecture in half, and use the same quality of horsepower across 2 CPUs. The Xeon 6500 chipset will offer 2 CPUs, each with the same qualities of it’s bigger brother, the Xeon 7500 chipset. See below for details on both of the offerings.

Perhaps the coolest addition to the Nehalem EX announcement by Intel is the ability for certain vendors to cut the architecture in half, and use the same quality of horsepower across 2 CPUs. The Xeon 6500 chipset will offer 2 CPUs, each with the same qualities of it’s bigger brother, the Xeon 7500 chipset. See below for details on both of the offerings.

Additional Features

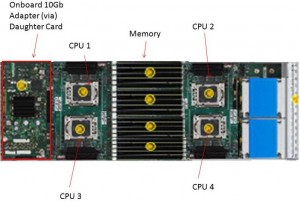

Since the Xeon 6500/7500 chipsets are modeled off the familiar Nehalem uarchitecture, there are certain well-known features that are available. Both Turbo Boost and HyperThreading have been added to the and will provide users for the ability to have better performance in their high-end servers (shown left to right below.)

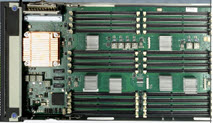

Memory

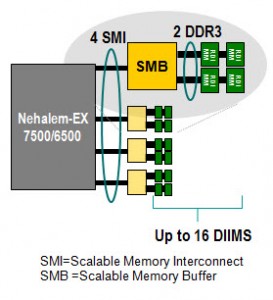

Probably the biggest winner of the features that Intel’s bringing with the Nehalem EX announcement is the ability to have more memory and bigger memory pipes. Each CPU will have 4 x high speed “Scalable Memory Interconnects” (SMI’s) that will be the highways for the memory to communicate with the CPUs. As with the existing Nehalem architecture, each CPU has a dedicated memory controller that provides access to the memory. In the case of the Nehalem EX design, each CPU has 4 pathways that each have a Scalable Memory Buffer, or SMB, that provide access to 4 memory DIMMs. So, in total, each CPU will have access to 16 DIMMs across 4 pathways. Based on the simple math, a server with 4 CPUs will be able to have up to 64 memory DIMMs. Some other key facts:

Each CPU will have 4 x high speed “Scalable Memory Interconnects” (SMI’s) that will be the highways for the memory to communicate with the CPUs. As with the existing Nehalem architecture, each CPU has a dedicated memory controller that provides access to the memory. In the case of the Nehalem EX design, each CPU has 4 pathways that each have a Scalable Memory Buffer, or SMB, that provide access to 4 memory DIMMs. So, in total, each CPU will have access to 16 DIMMs across 4 pathways. Based on the simple math, a server with 4 CPUs will be able to have up to 64 memory DIMMs. Some other key facts:

• it will support up to 16GB DDR3 DIMMs

•it will support up to 1TB with 16GB DIMMS

•it will support DDR3 DIMMs up to 1066MHz, in Registered, Single-Rank, Dual-Rank and Quad-Rank flavors.

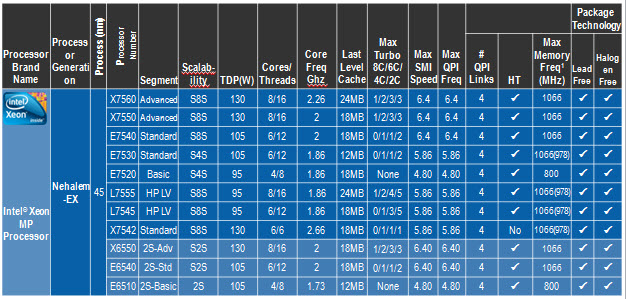

Another important note is the actual system memory speed will depend on specific processor capabilities (see reference table below for max SMI link speeds per CPU):

•6.4GT/s SMI link speed capable of running memory speeds up to 1066Mhz

•5.86GT/s SMI link speed capable of running memory speeds up to 978Mhz

•4.8GT/s SMI link speed capable of running memory speeds up to 800Mhz

Here’s a great chart to reference on the features across the individual CPU offerings, from Intel:

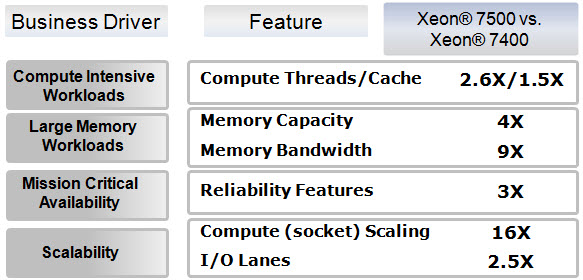

Finally, take a look at some comparisons between the Nehalem EX (Xeon 7500) and the previous generation, Xeon 7400:

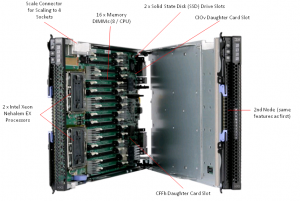

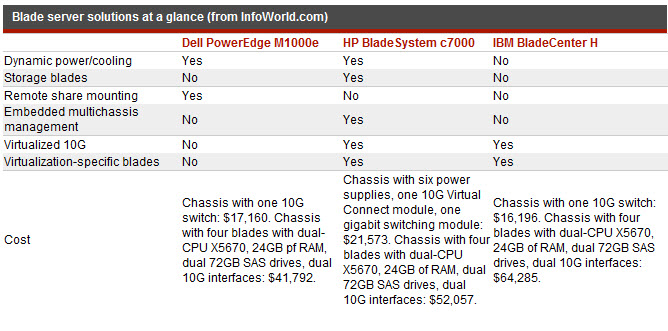

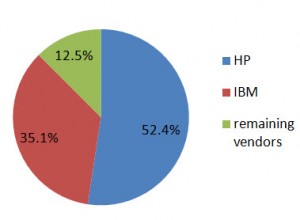

That’s it for now. Check back later for more specific details on Dell, HP, IBM and Cisco’s new Nehalem EX blade servers.

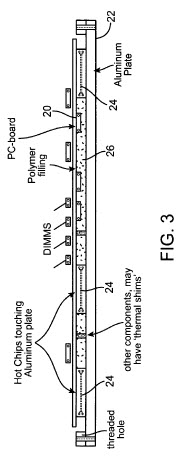

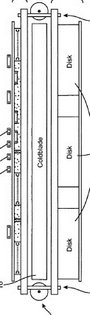

United States Patent #7552758, titled “Method for high-density packaging and cooling of high-powered compute and storage server blades” (published 6/29/2009) may be IBM’s clever way of disguising a method to liquid cool blade servers.

United States Patent #7552758, titled “Method for high-density packaging and cooling of high-powered compute and storage server blades” (published 6/29/2009) may be IBM’s clever way of disguising a method to liquid cool blade servers. According to the patent, the invention is “

According to the patent, the invention is “ It appears to be a “double-wide” enclosure that will allow for 8 disk drives to be inserted.

It appears to be a “double-wide” enclosure that will allow for 8 disk drives to be inserted.

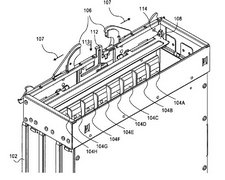

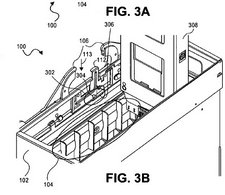

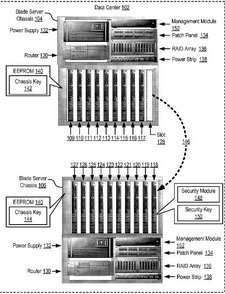

The final invention that I uncovered is very mysterious to me. Titled, “Securing Blade Servers in a Data Center,” patent application # 20100024001 shows a new concept from IBM encompassing a blade server chassis, a router, a patch panel, a RAID Array, a power strip and blade servers all inside of a single enclosure, or “Data Center.” An important note is that this device is not yet approved as a patent – it’s still a patent application. Filed on 7/25/2008 and published as a patent application on 1/28/2010, this patent application lists an abstract description of, “

The final invention that I uncovered is very mysterious to me. Titled, “Securing Blade Servers in a Data Center,” patent application # 20100024001 shows a new concept from IBM encompassing a blade server chassis, a router, a patch panel, a RAID Array, a power strip and blade servers all inside of a single enclosure, or “Data Center.” An important note is that this device is not yet approved as a patent – it’s still a patent application. Filed on 7/25/2008 and published as a patent application on 1/28/2010, this patent application lists an abstract description of, “

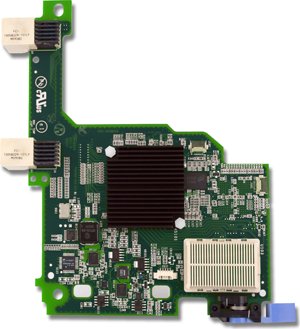

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I