Wow. HP continues its “blade everything” campaign from 2007 with a new offering today – HP Integrity Superdome 2 on blade servers. Touting a message of a “Mission-Critical Converged Infrastructure” HP is combining the mission critical Superdome 2 architecture with the successful scalable blade architecture. Continue reading

Wow. HP continues its “blade everything” campaign from 2007 with a new offering today – HP Integrity Superdome 2 on blade servers. Touting a message of a “Mission-Critical Converged Infrastructure” HP is combining the mission critical Superdome 2 architecture with the successful scalable blade architecture. Continue reading

Category Archives: HP-HPE

Behind the Scenes at the InfoWorld Blade Shoot-Out

Daniel Bowers, at HP, recently posted some behind the scenes info on what HP used for the InfoWorld Blade Shoot-Out that I posted on a few weeks ago (check it out here) which led to HP’s blade design taking 1st place. According to Daniel, HP’s final config consisted of:

- a c7000 enclosure

- 4 ProLiant BL460c G6 server blades (loaded with VMWare ESX) built with 6-core Xeon processors and 8GB LVDIMMs

- 2 additional BL460c G6’s with StorageWorks SB40c storage blades for shared storage

- a Virtual Connect Flex-10 module

- a 4Gb fibre switch

Jump over to Daniel’s HP blog at to read the full article including how HP almost didn’t compete in the Shoot-Out and how they controlled another c7000 enclosure 3,000 miles away (Hawaii to Houston).

Jump over to Daniel’s HP blog at to read the full article including how HP almost didn’t compete in the Shoot-Out and how they controlled another c7000 enclosure 3,000 miles away (Hawaii to Houston).

Daniel’s HP blog is located at http://www.communities.hp.com/online/blogs/eyeonblades/archive/2010/04/13/behind-the-scenes-at-the-infoworld-blade-shoot-out.aspx

HP Blades Helping Make Happy Feet 2 and Mad Max 4

Chalk yet another win up for HP.

Chalk yet another win up for HP.

It was reported last week on www.itnews.com.au that Digital production house Dr. D. Studios is in the early stages of building a supercomputer grid cluster for the rendering of the animated feature film Happy Feet 2 and visual effects in Fury Road the long-anticipated fourth film in the Mad Max series. The super computer grid is based on HP BL490 G6 blade servers housed within an APC HACS pod, is already running in excess of 1000 cores and is expected to reach over 6000 cores during peak rendering by mid-2011.

This cluster boasted 4096 cores, taking it into the top 100 on the list of Top 500 supercomputers in the world in 2007 (it now sits at 447).

According to Doctor D infrastructure engineering manager James Bourne, “High density compute clusters provide an interesting engineering exercise for all parties involved. Over the last few years the drive to virtualise is causing data centres to move down a medium density path.”

Check out the full article, including video at:

http://www.itnews.com.au/News/169048,video-building-a-supercomputer-for-happy-feet-2-mad-max-4.aspx

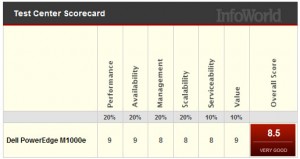

Blade Server Shoot-Out (Dell/HP/IBM) – InfoWorld.com

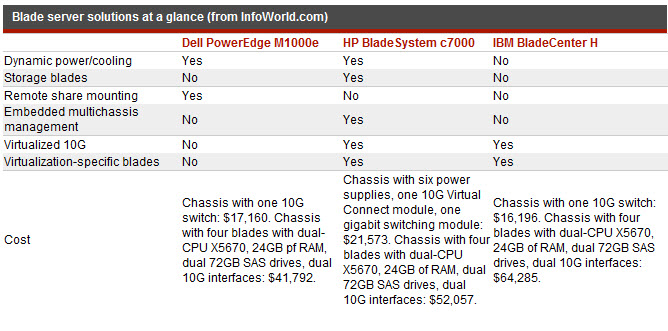

InfoWorld.com posted on 3/22/2010 the results of a blade server shoot-out between Dell, HP, IBM and Super Micro. I’ll save you some time and help summarize the results of Dell, HP and IBM.

The Contenders

Dell, HP and IBM each provided blade servers with the Intel Xeon X5670 2.93GHz CPUs and at least 24GB of RAM in each blade.

The Tests

InfoWorld designed a custom suite VMware tests as well as several real-world performance metric tests. The VMware tests were composed of:

- a single large-scale custom LAMP application

- a load-balancer running Nginx

- four Apache Web servers

- two MySQL servers

InfoWorld designed the VMware workloads to mimic a real-world Web app usage model that included a weighted mix of static and dynamic content, randomized database updates, inserts, and deletes with the load generated at specific concurrency levels, starting at 50 concurrent connections and ramping up to 200. InfoWorld’s started off with the VMware tests first on one blade server, then across two blades. Each blade being tested were running VMware ESX 4 and controlled by a dedicated vCenter instance.

The other real-world tests included serveral tests of common single-threaded tasks run simultaneously at levels that met and eclipsed the logical CPU count on each blade, running all the way up to an 8x oversubscription of physical cores. These tests included:

- LAME MP3 conversions of 155MB WAV files

- MP4-to-FLV video conversions of 155MB video files

- gzip and bzip2 compression tests

- MD5 sum tests

The ResultsDell

Dell did very well, coming in at 2nd in overall scoring. The blades used in this test were Dell PowerEdge M610 units, each with two 2.93GHz Intel Westmere X5670 CPUs, 24GB of DDR3 RAM, and two Intel 10G interfaces to two Dell PowerConnect 8024 10G switches in the I/O slots on the back of the chassis

Dell did very well, coming in at 2nd in overall scoring. The blades used in this test were Dell PowerEdge M610 units, each with two 2.93GHz Intel Westmere X5670 CPUs, 24GB of DDR3 RAM, and two Intel 10G interfaces to two Dell PowerConnect 8024 10G switches in the I/O slots on the back of the chassis

Some key points made in the article about Dell:

- Dell does not offer a lot of “blade options.” There are several models available, but they are the same type of blades with different CPUs. Dell does not currently offer any storage blades or virtualization-centric blades.

- Dell’s 10Gb design does not offer any virtualized network I/O. The 10G pipe to each blade is just that, a raw 10G interface. No virtual NICs.

- The new CMC (chassis management controller) is a highly functional and attractive management tool offering new tasks like pusing actions to multiple blades at once such as BIOS updates and RAID controller firmware updates.

- Dell has implemented more efficient dynamic power and cooling features in the M1000e chassis. Such features include the ability to shut down power supplies when the power isn’t needed, or ramping the fans up and down depending on load and the location of that load.

According to the article, “Dell offers lots of punch in the M1000e and has really brushed up the embedded management tools. As the lowest-priced solution…the M1000e has the best price/performance ratio and is a great value.”

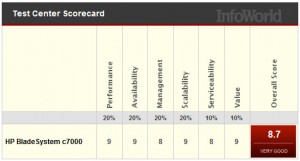

HP

Coming in at 1st place, HP continues to shine in blade leadership. HP’s testing equipment consisted of a c7000 nine BL460c blades, each running two 2.93GHz Intel Xeon X5670 (Westmere-EP) CPUs and 96GB of RAM as well as embedded 10G NICs with a dual 1G mezzanine card. As an important note, HP was the only server vendor with 10G NICs on the motherboard. Some key points made in the article about HP:

Coming in at 1st place, HP continues to shine in blade leadership. HP’s testing equipment consisted of a c7000 nine BL460c blades, each running two 2.93GHz Intel Xeon X5670 (Westmere-EP) CPUs and 96GB of RAM as well as embedded 10G NICs with a dual 1G mezzanine card. As an important note, HP was the only server vendor with 10G NICs on the motherboard. Some key points made in the article about HP:

- With the 10G NICs standard on the newest blade server models, InfoWorld says “it’s clear that HP sees 10G as the rule now, not the exception.”

- HP’s embedded Onboard Administrator offers detailed information on all chassis components from end to end. For example, HP’s management console can provide exact temperatures of every chassis or blade component.

- HP’s console can not offer global BIOS and firmware updates (unlike Dell’s CMC) or the ability to powering up or down more than one blade at a time.

- HP offers “multichassis management” – the ability to daisy-chain several chassis together and log into any of them from the same screen as well as manage them. This appears to be a unique feature to HP.

- The HP c7000 chassis also has power controlling features like dynamic power saving options that will automatically turn off power supplies when the system energy requirements are low or increasing the fan airflow to only those blades that need it.

InfoWorld’s final thoughts on HP: “the HP c7000 isn’t perfect, but it is a strong mix of reasonable price and high performance, and it easily has the most options among the blade system we reviewed.”

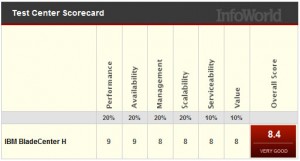

IBM

Finally, IBM’s came in at 3rd place, missing a tie with Dell by a small fraction. Surprisingly, I was unable to find the details on what the configuration was for IBM’s testing. Not sure if I’m just missing it, or if InfoWorld left out the information, but I know IBM’s blade server had the same Intel Xeon X5670 CPUs as Dell and HP used. Some of the points that InfoWorld mentioned about IBM’s BladeCenter H offering:

Finally, IBM’s came in at 3rd place, missing a tie with Dell by a small fraction. Surprisingly, I was unable to find the details on what the configuration was for IBM’s testing. Not sure if I’m just missing it, or if InfoWorld left out the information, but I know IBM’s blade server had the same Intel Xeon X5670 CPUs as Dell and HP used. Some of the points that InfoWorld mentioned about IBM’s BladeCenter H offering:

- IBM’s pricing is higher.

- IBM’s chassis only holds 14 servers whereas HP can hold 32 servers (using BL2x220c servers) and Dell holds 16 servers.

- IBM’s chassis doesn’t offer a heads-up display (like HP and Dell.)

- IBM had the only redundant internal power and I/O connectors on each blade. It is important to note the lack of redundant power and I/O connectors is why HP and Dell’s densities are higher. If you want redundant connections on each blade with HP and Dell, you’ll need to use their “full-height” servers, which decrease HP and Dell’s overall capacity to 8.

- IBM’s Management Module is lacking graphical features – there’s no graphical representation of the chassis or any images. From personal experience, IBM’s management module looks like it’s stuck in the ’90s – very text based.

- The IBM BladeCenter H lacks dynamic power and cooling capabilities. Instead of using smaller independent regional fans for cooling, IBM uses two blowers. Because of this, the ability to reduce cooling in specific areas, like Dell and HP offer are lacking.

InfoWorld summarizes the IBM results saying, ” if you don’t mind losing two blade slots per chassis but need some extra redundancy, then the IBM BladeCenter H might be just the ticket.”

Overall, each vendor has their own pro’s and con’s. InfoWorld does a great job summarizing the benefits of each offering below. Please make sure to visit the InfoWorld article and read all of the details of their blade server shoot-out.

ibs symptoms

dish network careers

fort jackson sc

escape the car

navy seals training

Cisco, IBM and HP Update Blade Portfolio with Westmere Processor

Intel officially announced today the Xeon 5600 processor, code named “Westmere.” Cisco, HP and IBM also announced their blade servers that have the new processor. The Intel Xeon 5600 offers:

- 32nm process technology with 50% more threads and cache

- Improved energy efficiency with support for 1.35V low power memory

There will be 4 core and 6 core offerings. This processor also provide the option of HyperThreading, so you could have up to 8 threads and 12 threads per processor, or 16 and 24 in a dual CPU system. This will be a huge advantage to applications that like multiple threads, like virtualization. Here’s a look at what each vendor has come out with:

Cisco

The B200 M2 provides Cisco users with the current Xeon 5600 processors. It looks like Cisco will be offering a choice of the following Xeon 5600 processors: Intel Xeon X5670, X5650, E5640, E5620, L5640, or E5506. Because Cisco’s model is a “built-to-order” design, I can’t really provide any part numbers, but knowing what speeds they have should help.

The B200 M2 provides Cisco users with the current Xeon 5600 processors. It looks like Cisco will be offering a choice of the following Xeon 5600 processors: Intel Xeon X5670, X5650, E5640, E5620, L5640, or E5506. Because Cisco’s model is a “built-to-order” design, I can’t really provide any part numbers, but knowing what speeds they have should help.

HP

HP is starting off with the Intel Xeon 5600 by bumping their existing G6 models to include the Xeon 5600 processor. The look, feel, and options of the blade servers will remain the same – the only difference will be the new processor. According to HP, “the HP ProLiant G6 platform, based on Intel Xeon 5600 processors, includes the HP ProLiant BL280c, BL2x220c, BL460c and BL490c server blades and HP ProLiant WS460c G6 workstation blade for organizations requiring high density and performance in a compact form factor. The latest HP ProLiant G6 platforms will be available worldwide on March 29.” It appears that HP’s waiting until March 29 to provide details on their Westmere blade offerings, so don’t go looking for part numbers or pricing on their website.

IBM

IBM is continuing to stay ahead of the game with details about their product offerings. They’ve refreshed their HS22 and HS22v blade servers:

HS22

HS22

7870ECU – Express HS22, 2x Xeon 4C X5560 95W 2.80GHz/1333MHz/8MB L2, 4x2GB, O/Bay 2.5in SAS, SR MR10ie

7870G4U – HS22, Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870GCU – HS22, Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 3x2GB, O/Bay 2.5in SAS, Broadcom 10Gb Gen2 2-port

7870H2U -HS22, Xeon 6C X5650 95W 2.66GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870H4U – HS22, Xeon 6C X5670 95W 2.93GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870H5U – HS22, Xeon 4C X5667 95W 3.06GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870HAU – HS22, Xeon 6C X5650 95W 2.66GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS, Emulex Virtual Fabric Adapter

7870N2U – HS22, Xeon 6C L5640 60W 2.26GHz/1333MHz/12MB, 3x2GB, O/Bay 2.5in SAS

7870EGU – Express HS22, 2x Xeon 4C E5630 80W 2.53GHz/1066MHz/12MB, 6x2GB, O/Bay 2.5in SAS

HS22V

HS22V

7871G2U – HS22V, Xeon 4C E5620 80W 2.40GHz/1066MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871G4U – HS22V, Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871GDU – HS22V, Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871H4U – HS22V, Xeon 6C X5670 95W 2.93GHz/1333MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871H5U – HS22V, Xeon 4C X5667 95W 3.06GHz/1333MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871HAU – HS22V, Xeon 6C X5650 95W 2.66GHz/1333MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871N2U – HS22V, Xeon 6C L5640 60W 2.26GHz/1333MHz/12MB, 3x2GB, O/Bay 1.8in SAS

7871EGU – Express HS22V, 2x Xeon 4C E5640 80W 2.66GHz/1066MHz/12MB, 6x2GB, O/Bay 1.8in SAS

7871EHU – Express HS22V, 2x Xeon 6C X5660 95W 2.80GHz/1333MHz/12MB, 6x4GB, O/Bay 1.8in SAS

I could not find any information on what Dell will be offering, from a blade server perspective, so if you have information (that is not confidential) feel free send it my way.

4 Socket Blade Servers Density: Vendor Comparison

IMPORTANT NOTE – I updated this blog post on Feb. 28, 2011 with better details. To view the updated blog post, please go to:

https://bladesmadesimple.com/2011/02/4-socket-blade-servers-density-vendor-comparison-2011/

Original Post (March 10, 2010):

As the Intel Nehalem EX processor is a couple of weeks away, I wonder what impact it will have in the blade server market. I’ve been talking about IBM’s HX5 blade server for several months now, so it is very clear that the blade server vendors will be developing blades that will have some iteration of the Xeon 7500 processor. In fact, I’ve had several people confirm on Twitter that HP, Dell and even Cisco will be offering a 4 socket blade after Intel officially announces it on March 30. For today’s post, I wanted to take a look at how the 4 socket blade space will impact the overall capacity of a blade server environment. NOTE: this is purely speculation, I have no definitive information from any of these vendors that is not already public.

The Cisco UCS 5108 chassis holds 8 “half-width” B-200 blade servers or 4 “full-width” B-250 blade servers, so when we guess at what design Cisco will use for a 4 socket Intel Xeon 7500 (Nehalem EX) architecture, I have to place my bet on the full-width form factor. Why? Simply because there is more real estate. The Cisco B250 M1 blade server is known for its large memory capacity, however Cisco could sacrifice some of that extra memory space for a 4 socket, “Cisco B350“ blade. This would provide a bit of an issue for customers wanting to implement a complete rack full of these servers, as it would only allow for a total of 28 servers in a 42U rack (7 chassis x 4 servers per chassis.)

The Cisco UCS 5108 chassis holds 8 “half-width” B-200 blade servers or 4 “full-width” B-250 blade servers, so when we guess at what design Cisco will use for a 4 socket Intel Xeon 7500 (Nehalem EX) architecture, I have to place my bet on the full-width form factor. Why? Simply because there is more real estate. The Cisco B250 M1 blade server is known for its large memory capacity, however Cisco could sacrifice some of that extra memory space for a 4 socket, “Cisco B350“ blade. This would provide a bit of an issue for customers wanting to implement a complete rack full of these servers, as it would only allow for a total of 28 servers in a 42U rack (7 chassis x 4 servers per chassis.)

On the other hand, Cisco is in a unique position in that their half-width form factor also has extra real estate because they don’t have 2 daughter card slots like their competitors. Perhaps Cisco would create a half-width blade with 4 CPUs (a B300?) With a 42U rack, and using a half-width design, you would be able to get a maximum of 56 blade servers (7 chassis x 8 servers per chassis.)

Dell

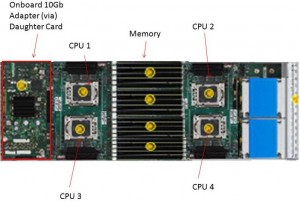

The 10U M1000e chassis from Dell can currently handle 16 “half-height” blade servers or 8 “full height” blade servers. I don’t forsee any way that Dell would be able to put 4 CPUs into a half-height blade. There just isn’t enough room. To do this, they would have to sacrifice something, like memory slots or a daughter card expansion slot, which just doesn’t seem like it is worth it. Therefore, I predict that Dell’s 4 socket blade will be a full-height blade server, probably named a PowerEdge M910. With this assumption, you would be able to get 32 blade servers in a 42u rack (4 chassis x 8 blades.)

HP

HP

Similar to Dell, HP’s 10U BladeSystem c7000 chassis can currently handle 16 “half-height” blade servers or 8 “full height” blade servers. I don’t forsee any way that HP would be able to put 4 CPUs into a half-height blade. There just isn’t enough room. To do this, they would have to sacrifice something, like memory slots or a daughter card expansion slot, which just doesn’t seem like it is worth it. Therefore, I predict that HP’s 4 socket blade will be a full-height blade server, probably named a Proliant BL680 G7 (yes, they’ll skip G6.) With this assumption, you would be able to get 32 blade servers in a 42u rack (4 chassis x 8 blades.)

IBM

Finally, IBM’s 9U BladeCenter H chassis offers up 14 servers. IBM has one size server, called a “single wide.” IBM will also have the ability to combine servers together to form a “double-wide”, which is what is needed for the newly announced IBM BladeCenter HX5. A double-width blade server reduces the IBM BladeCenter’s capacity to 7 servers per chassis. This means that you would be able to put 28 x 4 socket IBM HX5 blade servers into a 42u rack (4 chassis x 7 servers each.)

Summary

In a tie for 1st place, at 32 blade servers in a 42u rack, Dell and HP would have the most blade server density based on their existing full-height blade server design. IBM and Cisco would come in at 3rd place with 28 blade servers in a 42u rack.. However IF Cisco (or HP and Dell for that matter) were able to magically re-design their half-height servers to hold 4 CPUs, then they would be able to take 1st place for blade density with 56 servers.

Yes, I know that there are slim chances that anyone would fill up a rack with 4 socket servers, however I thought this would be good comparison to make. What are your thoughts? Let me know in the comments below.

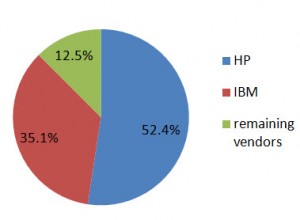

IDC Q4 2009 Report: Blade Servers STILL Growing, HP Leads STILL Leading in Shares

IDC reported on February 24, 2010 that blade server sales for Q4 2009 returned to quarterly revenue growth with factory revenues increasing 30.9% in Q4 2009 year over year (vs 1.2% in Q3.) For the first time in 2009 there was an 8.3% increase in year-over-year shipments in Q4. Overall blade servers accounted for $1.8 billion in Q4 2009 (up from $1.3 billion in Q3) which represented 13.9% of the overall server revenue. It was also reported that more than 87% of all blade revenue in Q4 2009 was driven by x86 systems where blades now represent 21.4% of all x86 server revenue.

While the press release did not provide details of the market share for all of the top 5 blade vendors, they did provide data for the following:

#1 market share: HP with 52.4%

#2 market share: IBM increased their marketshare from Q3 by 5.7% growth to 35.1%

As an important note, according to IDC, IBM significantly outperformed the market with year-over-year revenue growth of 64.1%.

According to Jed Scaramella, senior research analyst in IDC's Datacenter and Enterprise Server group, "Blades remained a bright spot in the server vendors’ portfolios. They were able to grow blade revenue throughout the year while maintaining their average selling prices. Customers recognize the benefits extend beyond consolidation and density, and are leveraging the platform to deliver a dynamic IT environment. Vendors consider blades strategic to their business due to the strong loyalty customers develop for their blade vendor as well as the higher level of pull-through revenue associated with blades."

HP Tech Day (#hpbladesday) – Final Thoughts (REVISED)

(revised 5/4/2010)

First, I’d like to thank HP for inviting me to HP Tech Day in Houston. I’m honored that I was chosen and hope that I’m invited back – event after my challenging questions about the Tolly Report. It was a fun packed day and a half, and while it was a great event, I won’t miss having to hashtag (#hpbladesday) all my tweets. I figured I’d use this last day to offer up my final thoughts – for what they are worth.

Blogger Attendees

As some of you may know, I’m still the rookie of this blogging community – especially in the group of invitees, so I didn’t have a history with anyone in the group, except Rich Brambley of http://vmetc.com . However, this did not matter, as they all welcomed me as if I were one of their own. In fact, they even treated me to a practical joke, letting me walk around HP’s Factory Express tour for hal an hour with a Proliant DL180 G6 sticker on my back (thanks to Stephen and Greg for that one.) Yes, that’s me in the picture.

All jokes aside, these bloggers were top class, and they offer up some great blogs, so if you don’t check them out daily, please make sure to visit them. Here’s the list of attendees and their sites:

Rich Brambley: http://vmetc.com

Greg Knieriemen: http://www.storagemonkeys.com/ and http://iKnerd.com

Also check out Greg’s notorious podcast, “Infosmack” (if you like it, make sure to subscribe via iTunes)

Chris Evans: http://thestoragearchitect.com

Simon Seagrave: http://techhead.co.uk

John Obeto: http://absolutelywindows.com

(don’t mention VMware or Linux to him, he’s all Microsoft)

Frank Owen: http://techvirtuoso.com

Martin Macleod: http://www.bladewatch.com/

Stephen Foskett: http://gestaltit.com/ and http://blog.fosketts.net/

Devang Panchigar: http://www.storagenerve.com

A special thanks to the extensive HP team who participated in the blogging efforts as well.

HP Demos and Factory Express Tour

I think I got the most out of this event from the live demos and the Factory Express tour. These are things that you can read about, but until you see them in person, you can’t appreciate the value that HP brings to the table, through their product design and through their services.

I think I got the most out of this event from the live demos and the Factory Express tour. These are things that you can read about, but until you see them in person, you can’t appreciate the value that HP brings to the table, through their product design and through their services.

The image on the left shows the MDS6000 MDS600 storage shelf – something that I’ve read about many times, but until I saw it, I didn’t realize how cool, and useful, it was. 70 drives in a 5u space. That’s huge. Seeing things like this, live and in person, is what these HP Tech Days need to be about. Hands-on, live demos. and tours of what makes HP tick.

The Factory Express Tour was really cool. I think we should have been allowed to work the line for an hour along with the HP employees. On this tour we saw how customized HP Server builds go from being an order, to being a solution. Workers like the one in the picture on the right typically do 30 servers a day, depending on the type of server. The entire process involves testing and 100% audits to insure accuracy.

My words won’t do HP Factory Express justice, so check out this video from YouTube:

For a full list of my pictures taken during this event, please check out:

http://tweetphoto.com/user/kevin_houston

http://picasaweb.google.com/101667790492270812102/HPTechDay2010#

Feedback to the HP team for future events:

1) Keep the blogger group small

2) Keep it to HP demos and presentations (no partners, please)

3) More time on hands-on, live demos and tours. This is where the magic is.

4) Try and do this at least once a quarter. HP’s doing a great job building their social media teams, and this event goes a long way in creating that buzz.

Thanks again, HP, and to Ivy Worldwide (http://www.ivyworldwide.com) for doing a great job. I hope to attend again!

Disclaimer: airfare, accommodations and meals are being provided by HP, however the content being blogged is solely my opinion and does not in anyway express the opinions of HP.

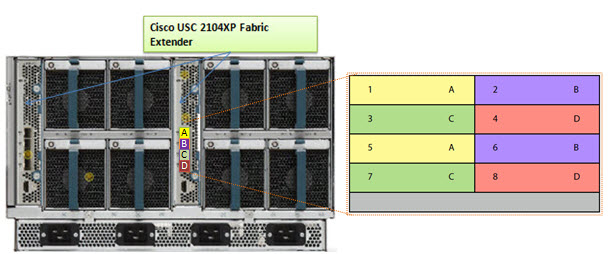

Tolly Report: HP Flex-10 vs Cisco UCS (Network Bandwidth Scalability Comparison)

Tolly.com announced on 2/25/2010 a new Test Report that compares the network bandwidth scalability between HP BladeSystem c7000 with BL460 G6 Servers and Cisco UCS 5100 with B200 Servers, and the results were interesting. The report simply tested 6 HP blades, with a single Flex-10 Module vs 6 Cisco blades using their Fabric Extender + a single Fabric Interconnect. I’m not going to try and re-state what the report says (for that you can download it directly), instead, I’m going to highlight the results. It is important to note that the report was “commissioned by Hewlett-Packard Dev. Co, L.P.”

Result #1: HP BladeSystem C7000 with a Flex-10 Module Tested to have More Aggregate Server Throughput (Gbps) over the Cisco UCS with a Fabric Extender connected to a Fabric Interconnect in a Physical-to-Physical Comparison

>The test shows when 4 physical servers were tested, Cisco can achieve an aggregate throughput of 36.59 Gbps vs HP achieving 35.83Gbps (WINNER: Cisco)

>When 6 physical servers were tested, Cisco achieved an aggregate throughput of 27.37 Gbps vs HP achieving 53.65 Gbps – a difference of 26.28 Gbps (WINNER: HP)

Result #2: HP BladeSystem C7000 with a Flex-10 Module Tested to have More Aggregate Server Throughput (Gbps) over the Cisco UCS with a Fabric Extender connected to a Fabric Interconnect in a Virtual-to-Virtual Comparison

>Testing 2 servers each running 8 VMware Red Hat Linux hosts showed that HP achieved an aggregate throughput of 16.42 Gbps vs Cisco UCS achieving 16.70 Gbps (WINNER: Cisco).

The results of the above was performed with the 2 x Cisco B200 blade servers each mapped to a dedicated 10Gb uplink port on the Fabric Extender (FEX). When the 2 x Cisco B200 blade servers were designed to share the same 10Gb uplink port on the FEX, the achieved aggregate throughput on the Cisco UCS decreased to 9.10 Gbps.

A few points to note about these findings:

a) the HP Flex-10 Module has 8 x 10Gb uplinks whereas the Cisco Fabric Extender (FEX) has 4 x 10Gb uplinks

b) Cisco’s FEX Design allows for the 8 blade servers to extend out the 4 external ports in the FEX a 2:1 ratio (2 blades per external FEX port.) The current Cisco UCS design requires the servers to be “pinned”, or permanently assigned, to the respective FEX uplink. This works well when there are 4 blade servers, but when you get to more than 4 blade servers, the traffic is shared between two servers, which could cause bandwidth contention.

Furthermore, it’s important to understand that the design of the UCS blade infrastructure does not allow communication to go from Server 1 to Server 2 without leaving the FEX, connecting to the Fabric Interconnect (top of the picture) then returning to the FEX and connecting to the server. This design is the potential cause of the decrease in aggregate throughput from 16.70Gbps to 9.10Gbps as shown above.

One of the “Bottom Line” conclusions from this report states, “throughput degradation on the Cisco UCS cased by bandwidth contention is a cause of concern for customers considering the use of UCS in a virtual server environment” however I encourage you to take a few minutes, download this full report from the Tolly.com website and make your own conclusions about this report.

Let me know your thoughts about this report – leave a comment below.

Disclaimer: This report was brought to my attention while attending the HP Tech Day event where airfare, accommodations and meals are being provided by HP, however the content being blogged is solely my opinion and does not in anyway express the opinions of HP.

HP Tech Day – Day 1 Recap

Wow – the first day of HP Tech Day 2010 was jammed pack full of meetings, presentations and good information. Unfortunately, it appears there won’t be any confidential, earth shattering news to report on, but it has still been a great event to attend.

My favorite part of the day was going to the HP BladeSystem demo, where we not only got to get our hands on the blade servers, but we got to see what the mid-plane and power bus looks like outside the chassis.

|

|

| From HP Tech Day 2010 |

Kudos to James Singer, HP Blade engineer, who did a great job talking about the HP BladeSystem and all it offers. My only advice to the HP events team is to double the time we get with the blades next time. (Isn’t that why were were here?)

Since I spent most of the day Tweeting what was going on, I figured it would be easiest to just list my tweets throughout the day. If you have any questions about any of this, let me know.

My tweets from 2/25/2010 (latest to earliest):

Q&A from HP StorageWorks CTO, Paul Perez

- “the era of spindles for IOPS will be over soon.” Paul Perez, CTO HP StorageWorks

- CTO Perez said Memristors (http://tinyurl.com/39f6br) are the next major evolution in storage – in next 2 or 3 years

- CTO Perez views Solid State (Drives) as an extension of main memory.

- HP StorageWorks CTO, Paul Perez, now discussing HP StorageWorks X9000 Network Storage System (formerly known as IBRIX)

- @SFoskett is grilling the CTO of HP StorageWorks

- Paul Perez – CTO of StorageWorks is now in the room

Competitive Discussion

- Kudos to Gary Thome , Chief Architect at HP, for not wanting to bash any vendor during the competitive blade session

- Cool – we have a first look at a Tolly report comparing HP BladeSystem Flex-10 vs Cisco UCS…

- @fowen Yes – a 10Gb, a CNA and a virtual adapter. Cisco doesn’t have anything “on the motherboard” though.

- RT @fowen: HP is the only vendor (currently) who can embed 10GB nics in Blades @hpbladeday AND Cisco…

- Wish HP allowed more time for deep dive into their blades at #hpbladesday. We’re rushing through in 20 min content that needs an hour.

- Dell’s M1000 blade chassis has the blade connector pins on the server side. This causes a lot of issues as pins bend

- I’m going to have to bite my tongue on this competitive discussion between blade vendors…

- Mentioning HP’s presence in Gartner’s Magic Quadrant (see my previous post on this here) –> http://tinyurl.com/ydbsnan

- Fun – now we get to hear how HP blades are better than IBM, Cisco and Dell

HP BladeSystem Matrix Demo

- Brian Jacquol and Ute Albert are demo’ing the HP BladeSystem Matrix

- RT @Connect_WW: Dan Bowers’ Early Afternoon Update at HP Blades Tech Day #hpbladesday http://bit.ly/9lxga0

Insight Software Demo

- Whoops – previous picture was “Tom Turicchi” not John Schmitz

- John Schmitz, HP, demonstrates HP Insight Software http://tinyurl.com/yjnu3o9

- HP Insight Control comes with “Data Center Power Control” which allows you to define rules for power control inside your DC

- HP Insight Control = “Essential Management”; HP Insight Dynamics = “Advanced Management”

- Live #hpBladesday Tweet Feed can be seen at http://tinyurl.com/ygcaq2a

BladeSystem in the Lab

- c7000 Power Bus (rear) http://tinyurl.com/yjy3kwy #hpbladesday complete list of pics can be found @ http://tinyurl.com/yl465v9

- HP c7000 Power Bus (front) http://tinyurl.com/yfwg88t #hpbladesday (one more pic coming…)

- HP c7000 Midplane (rear) http://tinyurl.com/yhozte6

- HP BladeSystem C7000 Midplane (front) http://tinyurl.com/ylbr9rd

- BladeSystem lab was friggin awesome. Pics to follow

- 23 power “steppings” on each BladeSystem fan

- 4 fan zones in a HP BladeSystem allows for fans to spin at different rates. – controlled by the Onboard Administrator

- The designs of the HP BladeSystem cooling fans came from Ducted Electric Jet Fans from hobby planes) http://tinyurl.com/yhug94w

- Check out the HP SB40c Storage Blade with the cover off : http://tinyurl.com/yj6xode

- James Singer – talking about HP BladeSystem power (http://tinyurl.com/ykfhbb2)

- DPS takes total loads and pushes on fewer supplies which maximizes the power efficiency

- DPS – Dynamic Power Saver dynamically turns power supplies off based on the server loads (HP exclusive technology)

- HP BladeSystem power supplies are 94% efficient

- HP’s hot-pluggable equipment is not purple, it’s “port wine”

- Here’s the HP BladeSystem C3500 (1/2 of a C7000) http://tinyurl.com/yhbpddt

- In BladeSystem demo with James Singer (HP). Very cool. They’ve got a C3500 (C7000 cut in half.) Picture will come later.

- Having lunch with Dan Bowers (HP marketing) and Gary Thome – talking about enhancements need for Proliant support materials

Virtual Connect

- HP’s published a “Virtual Connect for Dummies” Want a copy? Go to http://tinyurl.com/yhubgld

- New blog posting, HP Tech Day – Live TweetFeed – http://tinyurl.com/yegm9a5

- Next presenter – Mike Kendall – Virtual Connect and Network Convergence

ISB Overview and Data Center Trends 2010

- check out all my previous HP posts at http://tinyurl.com/yzx3hx6

- BladeSystem midplane doesn’t require transceivers, so it’s easy to run 10Gb at same cost as 1Gb

- BladeSystem was designed for 10Gb (with even higher in mind.)

- RT @SFoskett: Spot the secret “G” (for @GestaltIT?) in this #HPBladesDay Nth Generation slide! http://twitpic.com/159q23

- If Cisco wants to be like HP, they’d have to buy Lenovo, Canon and Dunder Mifflon

- discussed how HP blades were used in Avatar (see my post on this here )–> http://tinyurl.com/yl32xud

- HP’s Virtual Client Infra. Solutions design allows you to build “bricks” of servers and storage to serve 1000’s of virtual PCs

- Power capping is built into HP hardware (it’s not in the software.)

- Power Capping is a key technology in the HP Thermal Logic design.

- HP’s Thermal Logic technology allows you to actively manage power overtime.