Unless you’ve been hiding in a cave in Eastern Europe, you know by now that the New Orleans Saints are headed to the Super Bowl. According to IBM, this is all due to the Saints having an IBM BladeCenter S running their business. Okay, well, I’m sure there’s other reasons, like having stellar tallent, but let’s take a look at what IBM did for the Saints.

Other than the obvious threat of having to relocate or evacuate due to the weather, the Saints’ constant travel required them to search for a portable IT solution that would make it easier to quickly set up operations in another city. The Saints were a long-time IBM customer, so they looked at the IBM BladeCenter S for this solution, and it worked great. (I’m going to review the BladeCenter S below, so keep reading.) The Saints consolidated 20 physical servers onto the BladeCenter S, virtualizing the environment with VMware. Although the specific configuration of their blade environment is not disclosed, IBM reports that the Saints are using 1 terabyte of built-in storage, which enables the Saints to go on the road with the essential files (scouting reports, financial apps, player stats, etc) and tools the coaches and the staff need. In fact, in the IBM Case Study video, the Assistant Director of IT for the New Orleans Saints, Jody Barbier, says, “The Blade Center S definitely can make the trip with us if we go to the Super Bowl.” I guess we’ll see. Be looking for the IBM Marketing engine to jump on this bandwagon in the next few days.

A Look at the IBM BladeCenter S

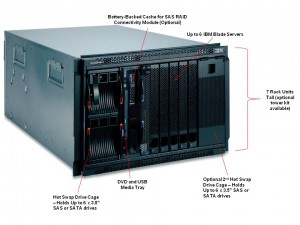

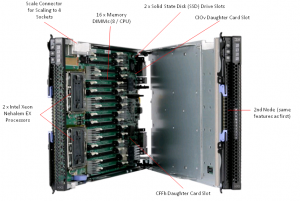

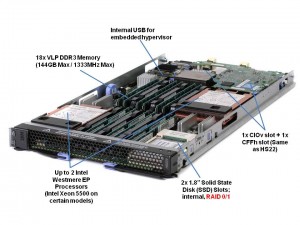

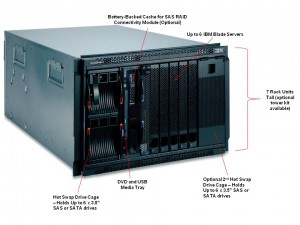

The IBM BladeCenter S is a 7u high (click image on left for larger view of details) chassis that has the ability to hold 6 blade servers and up to 12 disk drives held in Disk Storage Modules located on the left and right of the blade server bays. The chassis has the option to either segment the disk drives to an individual blade server, or the option to create a RAID volume and allow all of the servers to access the data. As of this writing, the drive options for the Disk Storage Module are: 146GB, 300GB, 450GB SAS, 750GB and 1TB Near-Line SAS and 750GB and 1TB SATA. Depending on your application needs, you could have up to 12TB of local storage for 6 servers. That’s pretty impressive, but wait, there’s more! As I reported a few weeks ago, there’s is a substantial rumour that there is a forthcoming option to use 2.5″ drives. This would enable the ability to have up to 24 drives (12 per Disk Storage Module.) Although that would provide more spindles, the current capacities of 2.5″ drives aren’t quite to the capacities of the 3.5″ drives. Again, that’s just “rumour” – IBM has not disclosed whether that option is coming (but it is…)

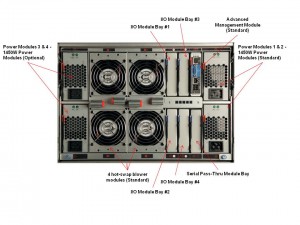

IBM BladeCenter – Rear View

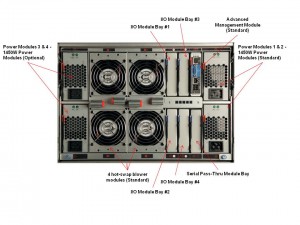

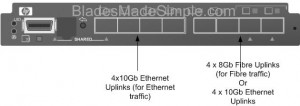

I love pictures – so I’ve attached an image of the BladeCenter S, as seen from the back. A few key points to make note of:

I love pictures – so I’ve attached an image of the BladeCenter S, as seen from the back. A few key points to make note of:

110v Capable – yes, this can run on the average office power. That’s the idea behind it. If you have a small closet or an area near a desk, you can plug this bad boy in. That being said, I always recommend calculating the power with IBM’s Power Configurator to make sure your design doesn’t exceed what 110v can handle. Yes, this box will run on 220v as well. Also, the power supplies are auto-sensing so there’s no worry about having to buy different power supplies based on your needs.

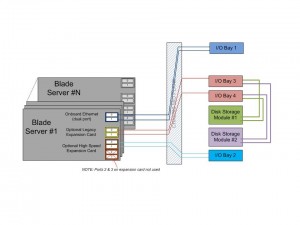

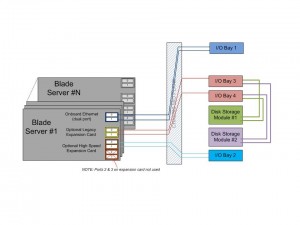

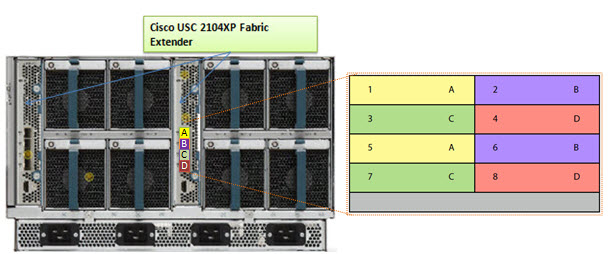

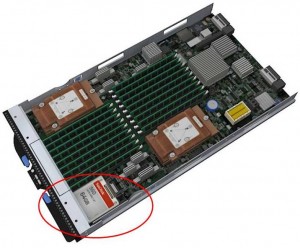

I/O Modules – if you are familar with the IBM BladeCenter or IBM BladeCenter H I/O architecture, you’ll know that the design is redundant, with dual paths. With the IBM BladeCenter S, this isn’t the case.  As you can see below, the onboard network adapters (NICs) both are mapped to the I/O module in Bay #1. The expansion card is mapped to Bay #3 and 4 and the high speed card slot (CFF-h) is mapped to I/O Bay 2. Yes, this design put I/O Bays 1 and 2 as single points of failure (since both paths connect intothe module bay), however when you look at the typical small office or branch office environment that the IBM BladeCenter S is designed for, you’ll realize that very rarely do they have redundant network fabrics – so this is no different.

As you can see below, the onboard network adapters (NICs) both are mapped to the I/O module in Bay #1. The expansion card is mapped to Bay #3 and 4 and the high speed card slot (CFF-h) is mapped to I/O Bay 2. Yes, this design put I/O Bays 1 and 2 as single points of failure (since both paths connect intothe module bay), however when you look at the typical small office or branch office environment that the IBM BladeCenter S is designed for, you’ll realize that very rarely do they have redundant network fabrics – so this is no different.

Another key point here is that I/O Bays 3 and 4 are connected to the Disk Storage Modules mentioned above. In order for a blade server to access the external disks in the Disk Storage Module bays, the blade server must:

a) have a SAS Expansion or Connectivity card installed in the expansion card slot

b) have 1 or 2 SAS Connectivity or RAID modules attached in Bays 3 and 4

This means that there is currently no way to use the local drives (in the Disk Storage Modules) and have external access to a fibre storage array.

BladeCenter S Office Enablement Kit

BladeCenter S Office Enablement Kit

Finally – I wanted to show you the optional Office Enablement Kit. This is an 11U enclosure that is based on IBM’s NetBay 11. It has security doors and special acoustics and air filtration to suit office environements. The Kit features:

*an acoustical module (to lower the sound of the environment) Check out this YouTube video for details.

* a locking door

* 4U of extra space (for other devices)

* wheels

There is also an optional Air Contaminant Filter that is available that assists in keeping the IBM BladeCenter S functional in a dusty environment (i.e. shops or production floors) using air filters.

If the BladeCenter S is going to be used in an environment without a rack (i.e. broom closet) or in a mobile environment (i.e. going to the Super Bowl) the Office Enablement Kit is a necessary addition.

So, hopefully, you can now see the value that the New Orleans Saints saw in the IBM BladeCenter S for their flexible, mobile IT needs. Good luck in the Super Bowl, Saints. I know that IBM will be rooting for you.

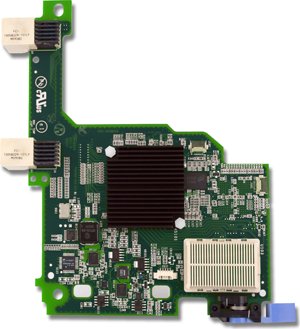

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I

I love pictures – so I’ve attached an image of the BladeCenter S, as seen from the back. A few key points to make note of:

I love pictures – so I’ve attached an image of the BladeCenter S, as seen from the back. A few key points to make note of: