IBM once again is promoting is striving to increase market share by offering customers the chance to get a “free” IBM BladeCenter chassis. The last time they promoted a free chassis was in November, so this year they kicked in the promo effective July 5, 2011. The promotion is for a free chassis – without any purchase, however a chassis without any blades or switches is just a metal box. Regardless, this promotion is a great way to help offset some of the cost to implementation of your blade server project. Continue reading

IBM once again is promoting is striving to increase market share by offering customers the chance to get a “free” IBM BladeCenter chassis. The last time they promoted a free chassis was in November, so this year they kicked in the promo effective July 5, 2011. The promotion is for a free chassis – without any purchase, however a chassis without any blades or switches is just a metal box. Regardless, this promotion is a great way to help offset some of the cost to implementation of your blade server project. Continue reading

Tag Archives: BladeCenter

IBM Blades on the Battlefield

You have probably heard of IBM’s ruggedized BladeCenter offering, the BladeCenter T and HT but did you know there was another IBM blade server offering that meets MIL-SPEC requirements that is not sold by IBM? Continue reading

Virtual I/O on IBM BladeCenter (IBM Virtual Fabric Adapter by Emulex)

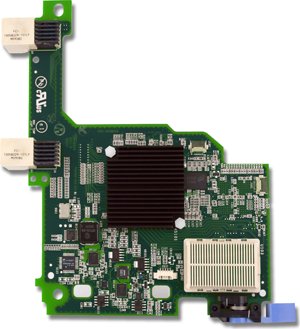

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I blogged about it in October and that was because, I didn't get it. I didn't get what the big deal was with being able to take a 10Gb pipe and allow you to carve it up into 4 "virtual NICs". HP's been doing this for a long time with their FlexNICs (check out VirtualKennth's blog for a great detail on this technology) so I didn't see the value in what IBM and Emulex was trying to do. But now I understand. Before I get into this, let me remind you of what this adapter is. The Emulex Virtual Fabric Adapter (CFFh) for IBM BladeCenter is a dual-port 10 Gb Ethernet card that supports 1 Gbps or 10 Gbps traffic, or up to eight virtual NIC devices.

A few weeks ago, IBM and Emulex announced a new blade server adapter for the IBM BladeCenter and IBM System x line, called the “Emulex Virtual Fabric Adapter for IBM BladeCenter" (IBM part # 49Y4235). Frequent readers may recall that I had a "so what" attitude when I blogged about it in October and that was because, I didn't get it. I didn't get what the big deal was with being able to take a 10Gb pipe and allow you to carve it up into 4 "virtual NICs". HP's been doing this for a long time with their FlexNICs (check out VirtualKennth's blog for a great detail on this technology) so I didn't see the value in what IBM and Emulex was trying to do. But now I understand. Before I get into this, let me remind you of what this adapter is. The Emulex Virtual Fabric Adapter (CFFh) for IBM BladeCenter is a dual-port 10 Gb Ethernet card that supports 1 Gbps or 10 Gbps traffic, or up to eight virtual NIC devices.

This adapter hopes to address three key I/O issues:

1.Need for more than two ports per server, with 6-8 recommended for virtualization

2.Need for more than 1Gb bandwidth, but can't support full 10Gb today

3.Need to prepare for network convergence in the future

"1, 2, 3, 4"

I recently attended an IBM/Emulex partner event and Emulex presented a unique way to understand the value of the Emulex Virtual Fabric Adapter via the term, "1, 2, 3, 4" Let me explain:

"1" – Emulex uses a single chip architecture for these adapters. (As a non-I/O guy, I'm not sure of why this matters – I welcome your comments.)

"2" – Supports two platforms: rack and blade (Easy enough to understand, but this also emphasizes that a majority of the new IBM System x servers announced this week will have the Virtual Fabric Adapter "standard")

"3" – Emulex will have three product models for IBM (one for blade servers, one for the rack servers and one intergrated into the new eX5 servers)

"4" – There are four modes of operation:

-

Legacy 1Gb Ethernet

-

10Gb Ethernet

-

Fibre Channel over Ethernet (FCoE)…via software entitlement ($$)

-

iSCSI Hardware Acceleration…via software entitlement ($$)

This last part is the key to the reason I think this product could be of substantial value. The adapter enables a user to begin with traditional Ethernet, then grow into 10Gb, FCoE or iSCSI without any physical change – all they need to do is buy a license (for the FCoE or iSCSI).

Modes of operation

The expansion card has two modes of operation: standard physical port mode (pNIC) and virtual NIC (vNIC) mode.

In vNIC mode, each physical port appears to the blade server as four virtual NIC with a default bandwidth of 2.5 Gbps per vNIC. Bandwidth for each vNIC can be configured from 100 Mbps to 10 Gbps, up to a maximum of 10 Gb per virtual port.

In pNIC mode, the expansion card can operate as a standard 10 Gbps or 1 Gbps 2-port Ethernet expansion card.

As previously mentioned, a future entitlement purchase will allow for up to two FCoE ports or two iSCSI ports. The FCoE and iSCSI ports can be used in combination with up to six Ethernet ports in vNIC mode, up to a maximum of eight total virtual ports.

Mode IBM Switch Compatibility

vNIC – works with BNT Virtual Fabric Switch

pNIC – works with BNT, IBM Pass-Thru, Cisco Nexus

FCoE– BNT or Cisco Nexus

iSCSI Acceleration – all IBM 10GbE switches

I really think the "one card can do all" concept works really well for the IBM BladeCenter design, and I think we'll start seeing more and more customers move toward this single card concept.

Comparison to HP Flex-10

I'll be the first to admit, I'm not a network or storage guy, so I'm not really qualified to compare this offering to HP's Flex-10, however IBM has created a very clever video that does some comparisons. Take a few minutes to watch and let me know your thoughts.

7 habits of highly effective people

pet food express

cartoon network video

arnold chiari malformation

category 1 hurricane

IBM BladeCenter Rumours

I recently heard some rumours about IBM’s BladeCenter products that I thought I would share – but FIRST let me be clear: this is purely speculation, I have no definitive information from IBM so this may be false info, but my source is pretty credible, so…

4 Socket Nehalem EX Blade

I posted a few weeks ago my speculation IBM’s announcement that they WILL have a 4 socket blade based on the upcoming Intel Nehalem EX processor (https://bladesmadesimple.com/2009/09/ibm-announces-4-socket-intel-blade-server/) – so today I got a bit of an update on this server.

Rumour 1: It appears IBM may call it the HS43 (not HS42 like I first thought.) I’m not sure why IBM would skip the “HS42” nomenclature, but I guess it doesn’t really matter. This is rumoured to be released in March 2010.

Rumour 2: It seems that I was right in that the 4 socket offering will be a double-wide server, however it appears IBM is working with Intel to provide a 2 socket Intel Nehalem EX blade as the foundation of the HS43. This means that you could start with a 2 socket blade, then “snap-on” a second to make it a 4 socket offering – but wait, there’s more… It seems that IBM is going to enable these blade servers to grow to up to 8 sockets via snapping on 4 x 2 socket servers together. If my earlier speculations (https://bladesmadesimple.com/2009/09/ibm-announces-4-socket-intel-blade-server/) are accurate and each 2 socket blade module has 12 DIMMs, this means you could have an 8 socket, 64 cores, 96 DIMM, 1.5TB of RAM (using 16GB per DIMM slot) all in a single BladeCenter chassis. This, of course, would take up 4 blade server slots. Now the obvious question around this bit of news is WHY would anyone do this? The current BladeCenter H only holds 14 servers so you would only be able to get 3 of these monster servers into a chassis. Feel free to offer up some comments on what you think about this.

Rumour 3: IBM’s BladeCenter S chassis currently uses local drives that are 3.5″. The industry is obviously moving to smaller 2.5″ drives, so it’s only natural that the BladeCenter S drive cage will need to be updated to provide 2.5″ drives. Rumour is that this is coming in April 2010 and it will offer up to 24 x 2.5″ SAS or SATA drives.

Rumour 4: What’s missing from the BladeCenter S right now that HP currently offers? A tape drive. Rumour has it that IBM will be adding a “TS Family” tape drive offering to the BladeCenter S in upcoming months. This makes total sense and is well-needed. Customers buying the BladeCenter S are typically smaller offices or branch offices, so using a local backup device is a critical component to insuring data protection. I’m not sure if this will be in the form of taking up a blade slot (like HP’s model) or it will be a replacement for one of the 2 drive cages. I would imagine it will be the latter since the BladeCenter S architecture allows for all servers to connect to the drive cages, but we’ll see.

That’s all I have. I’ll continue to keep you updated as I hear rumours or news.

IBM BladeCenter HS22 Delivers Best SPECweb2005 Score Ever Achieved by a Blade Server

According to IBM’s System x and BladeCenter x86 Server Blog, the IBM BladeCenter HS22 server has posted the best SPECweb2005 score ever from a blade server. With a SPECweb2005 supermetric score of 75,155, IBM has reached a benchmark seen by no other blade yet to-date. The SPECweb2005 benchmark is designed to be a neutral, equal benchmark for evaluting the peformance of web servers. According to the IBM blog, the score is derived from three different workloads measured:

According to IBM’s System x and BladeCenter x86 Server Blog, the IBM BladeCenter HS22 server has posted the best SPECweb2005 score ever from a blade server. With a SPECweb2005 supermetric score of 75,155, IBM has reached a benchmark seen by no other blade yet to-date. The SPECweb2005 benchmark is designed to be a neutral, equal benchmark for evaluting the peformance of web servers. According to the IBM blog, the score is derived from three different workloads measured:

- SPECweb2005_Banking – 109,200 simultaneous sessions

- SPECweb2005_Ecommerce – 134,472 simultaneous sessions

- SPECweb2005_Support – 64,064 simultaneous sessions

The HS22 achieved these results using two Quad-Core Intel Xeon Processor X5570 (2.93GHz with 256KB L2 cache per core and 8MB L3 cache per processor—2 processors/8 cores/8 threads). The HS22 was also configured with 96GB of memory, the Red Hat Enterprise Linux® 5.4 operating system, IBM J9 Java® Virtual Machine, 64-bit Accoria Rock Web Server 1.4.9 (x86_64) HTTPS software, and Accoria Rock JSP/Servlet Container 1.3.2 (x86_64).

It’s important to note that these results have not yet been “approved” by SPEC, the group who posts the results, but as soon as they are, they’ll be published at at http://www.spec.org/osg/web2005

The IBM HS22 is IBM’s most popular blade server with the following specs:

- up to 2 x Intel 5500 Processors

- 12 memory slots for a current maximum of 96Gb of RAM

- 2 hot swap hard drive slots capable of running RAID 1 (SAS or SATA)

- 2 PCI Express connectors for I/O expansion cards (NICs, Fibre HBAs, 10Gb Ethernet, CNA, etc)

- Internal USB slot for running VMware ESXi

- Remote management

- Redundant connectivity

IBM Announces Emulex Virtual Fabric Adapter for BladeCenter…So?

Emulex and IBM announced today the availability of a new Emulex expansion card for blade servers that allows for up to 8 virtual nics to be assigned for each physical NIC. The “Emulex Virtual Fabric Adapter for IBM BladeCenter (IBM part # 49Y4235)” is a CFF-H expansion card is based on industry-standard PCIe architecture and can operate as a “Virtual NIC Fabric Adapter” or as a dual-port 10 Gb or 1 Gb Ethernet card.

Emulex and IBM announced today the availability of a new Emulex expansion card for blade servers that allows for up to 8 virtual nics to be assigned for each physical NIC. The “Emulex Virtual Fabric Adapter for IBM BladeCenter (IBM part # 49Y4235)” is a CFF-H expansion card is based on industry-standard PCIe architecture and can operate as a “Virtual NIC Fabric Adapter” or as a dual-port 10 Gb or 1 Gb Ethernet card.

When operating as a Virtual NIC (vNIC) each of the 2 physical ports appear to the blade server as 4 virtual NICs for a total of 8 virtual NICs per card. According to IBM, the default bandwidth for each vNIC is 2.5 Gbps. The cool feature about this mode is that the bandwidth for each vNIC can be configured from 100 Mbps to 10 Gbps, up to a maximum of 10 Gb per virtual port. The one catch with this mode is that it ONLY operates with the BNT Virtual Fabric 10Gb Switch Module, which provides independent control for each vNIC. This means no connection to Cisco Nexus…yet. According to Emulex, firmware updates coming later (Q1 2010??) will allow for this adapter to be able to handle FCoE and iSCSI as a feature upgrade. Not sure if that means compatibility with Cisco Nexus 5000 or not. We’ll have to wait and see.

When used as a normal Ethernet Adapter (10Gb or 1Gb), aka “pNIC mode“, the card can is viewed as a standard 10 Gbps or 1 Gbps 2-port Ethernet expansion card. The big difference here is that it will work with any available 10 Gb switch or 10 Gb pass-thru module installed in I/O module bays 7 and 9.

So What?

I’ve known about this adapter since VMworld, but I haven’t blogged about it because I just don’t see a lot of value. HP has had this functionality for over a year now in their VirtualConnect Flex-10 offering so this technology is nothing new. Yes, it would be nice to set up a NIC in VMware ESX that only uses 200MB of a pipe, but what’s the difference in having a fake NIC that “thinks” he’s only able to use 200MB vs a big fat 10Gb pipe for all of your I/O traffic. I’m just not sure, but am open to any comments or thoughts.

legalization of cannabis

beth moore blog

charcoal grill

dell coupon code

cervical cancer symptoms

REVEALED: IBM's Nexus 4000 Switch: 4001I (Updated)

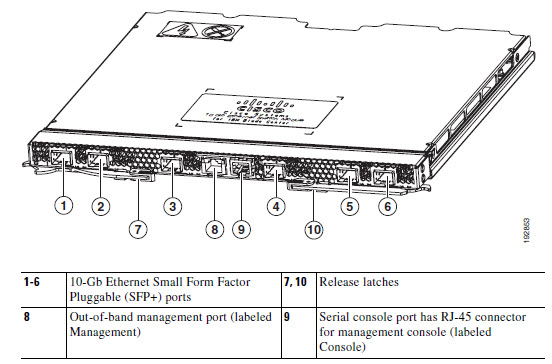

Finally – information on the soon-to-be-released Cisco Nexus 4000 switch for IBM BladeCenter. Apparently IBM is officially calling their version “Cisco Nexus Switch Module 4001I for the IBM BladeCenter.” I’m not sure if it’s “officially” announced yet, but I’ve uncovered some details. Here is a summary of the Cisco Nexus Switch Module 4001I for the IBM BladeCenter:

- Six external 10-Gb Ethernet ports for uplink

- 14 internal XAUI ports for connection to the server blades in the chassis

- One 10/100/1000BASE-T RJ-45 copper management port for out-of-band management link (this port is available on the front panel next to the console port)

- One external RS-232 serial console port (this port is available on the front panel and uses an RJ-45 connector)

More tidbits of info:

- The switch will be capable of forwarding Ethernet and FCoE packets at wire rate speed.

- The six external ports will be SFP+ (no surprise) and they’ll support 10GBASE-SR SFP+, 10GBASE-LR SFP+, 10GBASE-CU SFP+ and GE-SFP.

- Internal port speeds can run at 1 Gb or 10Gb (and can be set to auto-negotiate); full duplex

- Internal ports will be able to forward Layer-2 packets at wire rate speed.

- The switch will work in the IBM BladeCenter “high-speed bays” (bays 7, 8, 9 and 10); however at this time, the available Converged Network Adapters (CNAs) for the IBM blade servers will only work with Nexus 4001I’s located in bays 7 and 9.

There is also mention of a “Nexus 4005I” from IBM, but I can’t find anything on that. I do not believe that IBM has announced this product, so the information provided is based on documentation from Cisco’s web site. I expect announcement to come in the next 2 weeks, though, with availability probably following in November just in time for the Christmas rush!

For details on the information mentioned above, please visit the Cisco web site, titled “Cisco Nexus 4001I and 4005I Switch Module for IBM BladeCenter Hardware Installation Guide“.

If you are interested in finding out more about configuring the NX-OS for the Cisco Nexus Switch Module 4001I for the IBM BladeCenter, check out the Cisco Nexus 4001I and 4005I Switch Module for IBM BladeCenter NX-OS Configuration Guide

UPDATE (10/20/09): the IBM part # for the Cisco Nexus 4001I Switch Module will be 46M6071.

UPDATE # 2 (10/20/09, 17:37 PM EST): Found more Cisco links:

Cisco Nexus 4001I Switch Module At A Glance

Cisco Nexus 4001I Switch Module DATA SHEET

New Picture:

black swan movie

hot shot business

ibooks for mac

bonita springs florida

greenville daily news

How IBM's BladeCenter works with Cisco Nexus 5000

Other than Cisco’s UCS offering, IBM is currently the only blade vendor who offers a Converged Network Adapter (CNA) for the blade server. The 2 port CNA sits on the server in a PCI express slot and is mapped to high speed bays with CNA port #1 going to High Speed Bay #7 and CNA port #2 going to High Speed Bay #9. Here’s an overview of the IBM BladeCenter H I/O Architecture (click to open large image:)

Since the CNAs are only switched to I/O Bays 7 and 9, those are the only bays that require a “switch” for the converged traffic to leave the chassis. At this time, the only option to get the converged traffic out of the IBM BladeCenter H is via a 10Gb “pass-thru” module. A pass-thru module is not a switch – it just passes the signal through to the next layer, in this case the Cisco Nexus 5000.

10 Gb Ethernet Pass-thru Module for IBM BladeCenter

The pass-thru module is relatively inexpensive, however it requires a connection to the Nexus 5000 for every server that has a CNA installed. As a reminder, the IBM BladeCenter H can hold up to 14 servers with CNAs installed so that would require 14 of the 20 ports on a Nexus 5010. This is a small cost to pay, however to gain the 80% efficiency that 10Gb Datacenter Ethernet (or Converged Enhanced Ethernet) offers. The overall architecture for the IBM Blade Server with CNA + IBM BladeCenter H + Cisco Nexus 5000 would look like this (click to open larger image:)

Hopefully when IBM announces their Cisco Nexus 4000 switch for the IBM BladeCenter H later this month, it will provide connectivity to CNAs on the IBM Blade server and it will help consolidate the amount of connections required to the Cisco Nexus 5000 from 14 to perhaps 6 connections ;)

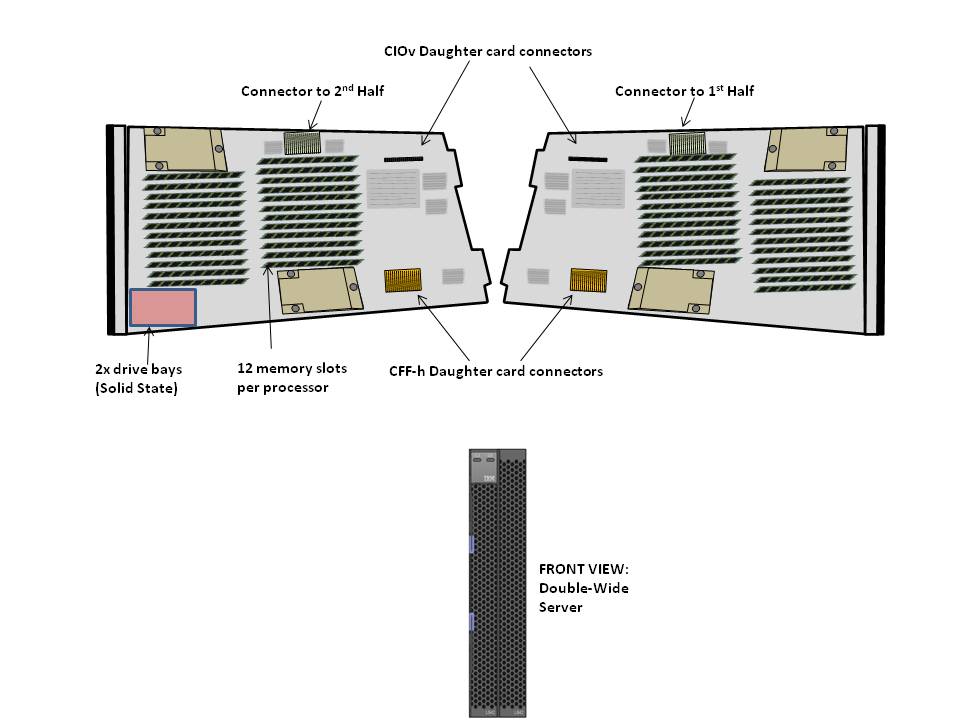

IBM Announces 4 Socket Intel Blade Server-UPDATED

IBM announced last week they will be launching a new blade server modeled with the upcoming 4 socket Intel Nehalem EX. While details have not yet been provided on this new server, I wanted to provide an estimation of what this server could look like, based on previous IBM models. I’ve drawn up what I think it will look like below, but first let me describe it.

“New Server Name”

IBM’s naming schema is pretty straight forward: Intel blades are “HS”, AMD blades are “LS”, Power blades are “JS”. Knowing this, I the new server will most likely be called a “HS42“. IBM previously had an HS40 and HS41, so calling it an HS42 would make the most sense.

“Size”

With the amount of memory that each CPU will have access to, I don’t see any way for IBM to create a 4 socket blade that wasn’t a “double-wide” form factor. A “double-wide” design means the server is 2 server slots wide, so in a single IBM BladeCenter H chassis, customers would be limited to 7 x HS42’s per chassis.

“Memory”

The Intel Nehalem EX will tentatively support 16 memory slots PER CPU, across 4 memory channels, so a 4 socket server will have 64 memory slots. Each memory channel can hold up to 4 DIMMs each. This is great, but this is the MAX for an upcoming Intel Nehalem EX server. I do not expect for any blade server vendor to achieve 64 memory slots with 4 CPUs. Since this is the maximum, it makes sense that vendors, like IBM, will be able to use less memory. I expect for these new servers to have 12 memory slots per CPUs (or 3 DIMMs per memory channel). This will still provide 48 memory dimms per” HS42″ blade server; and with 16Gb DIMMs, that would equal 768Gb per blade server.

“CPU”

The “HS42” would have up to 4 x Intel Nehalem EX CPU’s, each with 8 cores, for a total of 32 CPU cores per “HS42” server. HOWEVER, Intel is offering Hyperthreading with this CPU so an 8 core CPU now looks like 16 CPUs.

“Internal Drive Capacity”

I don’t see any way for IBM to have hot-swap drives in this server. There is just not enough real estate. So, I believe they would consider putting in Solid State drives (SSD’s) toward the front of the server. Will they put it on both sides of the server, probably not. The role of these drives would be just to provide space for your boot O/S. The data will sit on a storage area network.

“I/O Expansion”

I don’t think that IBM will re-design their existing I/O architecture for the blade servers. Therefore, I expect for each side of the double-wide “HS42” to have a single CIOv and a CFF-h daughter card expansion slot, so a single HS42 would have 4 expansion slots. This is assuming that IBM designs connector pins that interconnect the two halves of the server together that don’t interfere with the card slots (presumably at the upper half of the connections.)

As we come closer to the release date of the Intel Nehalem EX processor later in Q4 of 2009, I expect to hear more definitive details on the announced 4 socket IBM Blade server, so make sure to check back here later this year.

UPDATE (10/6/09): I’m hearing rumors that IBM’s Nehalem EX processor offerings (aka “X5″ offerings” will be shipping in Q2 of 2010.) Once that is confirmed by IBM, I’ll post a new post.

Cisco UCS vs IBM BladeCenter H

News Flash: Cisco is now selling servers!

Okay – perhaps this isn’t news anymore, but the reality is Cisco has been getting a lot of press lately – from their overwhelming presence at VMworld 2009 to their ongoing cat fight with HP. Since I work for a Solutions Provider that sells HP, IBM and now Cisco blade servers, I figured it might be good to “try” and put together a comparison between the Cisco and IBM. Why IBM? Simply because at this time, they are the only blade vendor who offers a Converged Network Adapter (CNA) that will work with the Cisco Nexus 5000 line. At this time Dell and HP do not offer a CNA for their blade server line so IBM is the closest we can come to Cisco’s offering. I don’t plan on spending time educating you on blades, because if you are interested in this topic, you’ve probably already done your homework. My goal with this post is to show the pros (+) and cons (-) that each vendor has with their blade offering – based on my personal, neutral observation

Chassis Variety / Choice: winner in this category is IBM.

IBM currently offers 5 types of blade chassis: BladeCenter S, BladeCenter E, BladeCenter H, BladeCenter T and BladeCenter HT. Each of the IBM blade chassis have unique offerings, such as the BladeCenter S is designed for small or remote offices with local storage capabilities, whereas the BladeCenter HT is designed for Telco environments with options for NEBS compliant features including DC power. At this time, Cisco only offers a single blade chassis offering (the 5808).

IBM BladeCenter H

Cisco UCS 5108

Server Density and Server Offerings: winner in this category is IBM. IBM’s BladeCenter E and BladeCenter H chassis offer up to 14 blade servers with servers using Intel, AMD and Power PC processors. In comparison, Cisco’s 5808 chassis offers up to 8 server slots and currently offers servers with Intel Xeon processors. As an honorable mention Cisco does offer a “full width” blade (Cisco UCS B250 server) that provides up to 384Gb of RAM in a single blade server across 48 memory slots offering up the ability to get to higher memory at a lower price point.

Management / Scalability: winner in this category is Cisco.

This is where Cisco is changing the blade server game. The traditional blade server infrastructure calls for each blade chassis to have its own dedicated management module to gain access to the chassis’ environmentals and to remote control the blade servers. As you grow your blade chassis environment, you begin to manage multiple servers. Beyond the ease of managing , the management software that the Cisco 6100 series offers provides users with the ability to manage server service profiles that consists of things like MAC Addresses, NIC Firmware, BIOS Firmware, WWN Addresses, HBA Firmware (just to name a few.)

Cisco UCS 6100 Series Fabric Interconnect

With Cisco’s UCS 6100 Series Fabric Interconnects, you are able to manage up to 40 blade chassis with a single pair of redundant UCS 6140XP (consisting of 40 ports.)

If you are familiar with the Cisco Nexus 5000 product, then understanding the role of the Cisco UCS 6100 Fabric Interconnect should be easy. The UCS 6100 Series Fabric Interconnect do for the Cisco UCS servers what Nexus does for other servers: unifies the fabric. HOWEVER, it’s important to note the UCS 6100 Series Fabric Interconnect is NOT a Cisco Nexus 5000. The UCS 6100 Series Fabric Interconnect is only compatible with the UCS servers.

Cisco UCS I/O Connectivity Diagram (UCS 5108 Chassis with 2 x 6120 Fabric Interconnects)

If you have other servers, with CNAs, then you’ll need to use the Cisco Nexus 5000.

The diagram on the right shows a single connection from the FEX to the UCS 6120XP, however the FEX has 4 uplinks, so if you want (need) more throughput, you can have it. This design provides each half-wide Cisco B200 server with the ability to have 2

CNA ports with redundant pathways. If you are satisified with using a single FEX connection per chassis, then you have the ability to scale up to 20 x blade chassis with a Cisco UCS 6120 Fabric Interconnect, or 40 chassis with the Cisco UCS 6140 Fabric Interconnect. As hinted in the previous section, the management software for the all connected UCS chassis resides in the redundant Cisco UCS 6100 Series Fabric Interconnects. This design offers a highly scaleable infrastructure that enables you to scale simply by dropping in a chassis and connecting the FEX to the 6100 switch. (Kind of like Lego blocks.)

On the flip side, while this architecture is simple, it’s also limited. There is currently no way to add additional I/O to an individual server. You get 2 x CNA ports per Cisco B200 server or 4 x CNA ports per Cisco B250 server.

As previously mentioned, IBM has a strategy that is VERY similar to the Cisco UCS strategy using the Cisco Nexus 5000 product line. IBM’s solution consists of:

-

IBM BladeCenter H Chassis

-

10Gb Pass-Thru Module

-

CNA’s on the blade servers

Until IBM and Cisco design a Cisco Nexus switch that integrates into the IBM BladeCenter H chassis, using a 10Gb pass-thru module is the best option to get true DataCenter Ethernet (or Converged Enhanced Ethernet) from the server to the Nexus switch. The performance for the IBM solution should equal the Cisco UCS design, since it’s just passing the signal through, however the connectivity is going to be more with the IBM solution. Passing signals through means NO cable

BladeCenter H Diagram with Nexus 5010 (using 10Gb Passthru Modules)

consolidation – for every server you’re going to need a connection to the Nexus 5000. For a fully populated IBM BladeCenter H chassis, you’ll need 14 connections to the Cisco Nexus 5000. If you are using the Cisco 5010 (20 ports) you’ll eat up all but 6 ports. Add a 2nd IBM BladeCenter chassis and you’re buying more Cisco Nexus switches. Not quite the scaleable design that the Cisco UCS offers.

IBM offers a 10Gb Ethernet Switch Option from BNT (Blade Networks) that will work with converged switches like the Nexus 5000, but at this time that upgrade is not available. Once it does become available, it would reduce the connectivity requirements down to a single cable, but, adding a switch between the blade chassis and the Nexus switch could bring additional management complications. That is yet to be seen.

IBM’s BladeCenter H (BCH) does offer something that Cisco doesn’t – additional I/O expansion. Since this solution uses two of the high speed bays in the BCH, bays 1, 2, 3 & 4 remain available. Bays 1 & 2 are mapped to the onboard NICs on each server, and bays 3&4 are mapped to the 1st expansion card on each server. This means that 2 additional NICs and 2 additional HBAs (or NICs) could be added in conjunction with the 2 CNAs on each server. Based on this, IBM potentially offers more I/O scalability.

And the Winner Is…

It depends. I love the concept of the Cisco UCS platform. Servers are seen as processors and memory – building blocks that are centrally managed. Easy to scale, easy to size. However, is it for the average datacenter who only needs 5 servers with high I/O? Probably not. I see the Cisco UCS as a great platform for datacenters with more than 14 servers needing high I/O bandwidth (like a virtualization server or database server.) If your datacenter doesn’t need that type of scalability, then perhaps going with IBM’s BladeCenter solution is the choice for you. Going the IBM route gives you flexibility to choose from multiple processor types and gives you the ability to scale into a unified solution in the future. While ideal for scalability, the IBM solution is currently more complex and potentially more expensive than the Cisco UCS solution.

Let me know what you think. I welcome any comments.