(updated 1/13/2010 – see bottom of blog for updates)

Eric Gray at www.vcritical.com blogged today about the benefits of using a flash based device, like an SD card, for loading VMware ESXi, so I thought I would take a few minutes to touch on the topic.

As Eric mentions, probably the biggest benefit of using VMware ESXi on an embedded device is that you don’t need local drives, which lowers the power and cooling of your blade server. While he mentions HP in his blog, both HP and Dell offer SD slots in their blade servers – so let’s take a look:

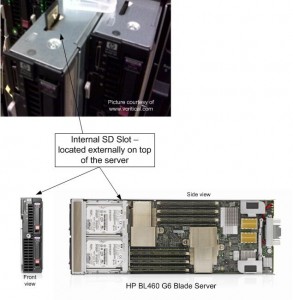

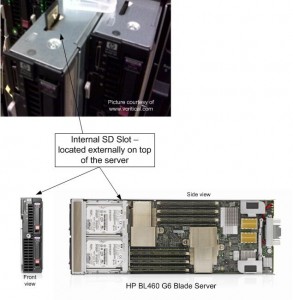

HP

HP curre ntly offers these SD slots in their BL460 G6 and BL490 G6 blade servers. As you can see from the picture on the left (thanks again to Eric at vCritical.com) HP allows for you to access the SD slot from the top of the blade server. This makes it fairly convenient to access, although once the image is installed on the SD card, it’s probably not ever coming out. HP’s QuickSpecs for the BL460 G6 state offer up an “HP 4GB SD Flash Media” that has a current list price of $70, however I have been unable to find any documentation that says you MUST use this SD card, so if you want to try and use it with your own personal SD card first, good luck. It is important to note that HP does not currently offer VMware ESXi, or any other virtualization vendor’s software, pre-installed on an SD card, unlike Dell.

ntly offers these SD slots in their BL460 G6 and BL490 G6 blade servers. As you can see from the picture on the left (thanks again to Eric at vCritical.com) HP allows for you to access the SD slot from the top of the blade server. This makes it fairly convenient to access, although once the image is installed on the SD card, it’s probably not ever coming out. HP’s QuickSpecs for the BL460 G6 state offer up an “HP 4GB SD Flash Media” that has a current list price of $70, however I have been unable to find any documentation that says you MUST use this SD card, so if you want to try and use it with your own personal SD card first, good luck. It is important to note that HP does not currently offer VMware ESXi, or any other virtualization vendor’s software, pre-installed on an SD card, unlike Dell.

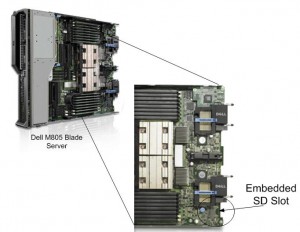

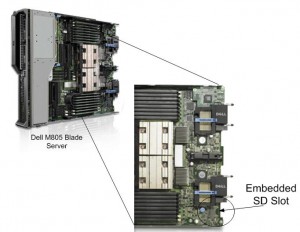

Dell

Dell has been offering SD slots on select servers for quite a while. In fact, I can remember seeing it at VMworld 2008. Everyone else was showing “embedded hypervisors” on USB keys while Dell was using an SD card. I don’t know that I have a personal preference of USB vs SD, but the point is that Dell was ahead of the game on this one.

Dell currently only offers their SD slot on their M805 and M905 blade servers. These are full-height servers, which could be considered good candidates for a virtualization server due to its redundant connectivity, high memory offering and high I/O (but that’s for another blog post. )

)

Dell chose to place the SD slots on the bottom rear of their blade servers. I’m not sure I agree with the placement, because if you needed to access the card, for whatever reason, you have to pull the server completely out of the chassis to service. It’s a small thing, but it adds time and complexity to the serviceability of the server.

An advantage that Dell has over HP is they offer to have VMware ESXi 4 PRE-LOADED on the SD key upon delivery. Per the Dell website, an SD card with ESXi 4 (basic, not Standard or Enterprise) is available for $99. It’s listed as “VMware ESXi v4.0 with VI4, 4CPU, Embedded, Trial, No Subsc, SD,NoMedia“. Yes, it’s considered a “trial” and it’s the basic version with no bells or whistles, however it is pre-loaded which equals time savings. There are additional options to upgrade the ESXi to either Standard or Enterprise as well (for additional cost, of course.)

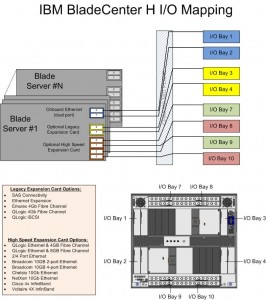

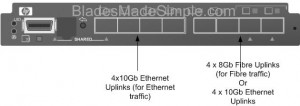

It is important to note that this discussion was only about SD slots. All of the blade server vendors, including IBM, have incorporated USB slots internally to their blade servers, so whereas a specific server may not have an SD slot, there is still the ability to load the hypervisor onto an USB key (where supported.)

1/13/2010 UPDATE –SD slots are also available on the BL 280G6 and BL 685 G6.

There is also an HP Advisory discouraging use of an internal USB key for embedded virtualization. Check it out at:

http://h20000.www2.hp.com/bizsupport/TechSupport/Document.jsp?objectID=c01957637&lang=en&cc=us&taskId=101&prodSeriesId=3948609&prodTypeId=3709945